This post is co-authored with Ben Garfinkel. It is cross-posted from the CEA blog. A PDF version can be found here.

Summary: Some strategic decisions available to the effective altruism movement may be difficult to reverse. One example is making the movement’s brand explicitly political. Another is growing large. Under high uncertainty, there is often reason to avoid or delay such hard-to-reverse decisions.

Table of contents

Introduction

What is reversibility?

How to choose

Fundamental considerations

Secondary considerations

Where do all the social movements go?

Introduction

The importance of option value is widely appreciated within the effective altruism movement. In an uncertain world, keeping multiple paths open can be very valuable.

One aspect of option value is reversibility. When we consider a change from the status quo to a new state of affairs, we risk losing option value if the decision is difficult to reverse. If we have a white cloth, we can dye it black at any time. However, once we have dyed it black, it would take much more work to make it white again.

The effective altruism movement faces many similar strategic situations, where it is easier to leave the status quo than to get back. For instance:(1)

-

The effective altruism movement is currently not strongly associated with any political party, or with any of the mainstream political ideologies. In that sense, the effective altruism brand is relatively apolitical. This could easily change, e.g., if the movement allied itself with a certain political party. However, once that step has been taken, it might be hard to go back to an apolitical brand.

-

The effective altruism movement is currently quite small. The movement may try to grow big, but if it does, it will arguably be hard to reverse that decision. Shrinking the movement in a way which does not cause serious damage is presumably very difficult.

-

The effective altruism movement has invested in acquiring a reputation for integrity, rigour, friendliness, and other kinds of prosocial behaviour. It may, however, decide that such a reputation is too costly to uphold, and that some level of dishonesty, lack of rigour, or unfriendliness is acceptable. Since it is easier to destroy a good reputation than to build one, it is plausibly hard to reverse such a decision.(2)

What is reversibility?

To understand the notion of reversibility, let us first look at the notion of option value. It can be defined as follows.

Option value: The option value associated with a possible choice is the expected value of having this choice available.

To use a standard example from the literature, it can be in your self-interest to support a tax for the maintenance of Sequoia National Park even if you are not sure that you will ever visit. The fact that you might some day choose to visit the park, and can expect the visit to be worthwhile if you do, gives this possible choice option value.(3)

The concept of option value is frequently applied within the effective altruism movement. For example, the Open Philanthropy Project has argued that working on many different causes gives it the option value of being able to focus on any of these causes in the future.

Now suppose that one is considering leaving some state A (e.g., being an apolitical movement) in order to enter some state B (e.g., being a political movement). While one is in state A, one derives option value from the possible choice to enter state B. One loses this option value, of course, by actually entering B. But one may also gain option value from the possible choice to re-enter A.

The right decision in this case depends in part on how much option value one would gain. A good heuristic here is to ask how reversible the decision to leave state A for state B would be.

We define:

Reversibility: The reversibility of a decision to leave state A for state B is the reciprocal (i.e. inverse) of the direct cost of returning to A.(4)

To better understand the significance of reversibility, let us consider a concrete case.

Suppose, again, that the decision to become a political movement has both a low direct cost and a very low reversibility. Then many future opportunities that will be available to political movements (such as the opportunity to partner with an influential activist group) could in practice be available to apolitical movements too, since the direct cost of becoming political is low. In contrast, future opportunities that will be available to apolitical movements (such as the opportunity to attract a wide range of recruits) will not in practice be available to political movements, since the direct cost of becoming apolitical again will be too high.

Note that reversibility is not the only determinant of option value. However, it is one particularly significant determinant, which it is easy to conceptualize and make snap judgements of.

In this article, we are focusing on decision situations where the decision to exit the status quo is easier to make than reverse. However, this choice of focus is not arbitrary. In many situations where we might consider two different strategies, one much easier to switch out from than the other, the question of which strategy we should follow will only be a live one if we are currently following the strategy that it is easy to switch out of. Due to this selection effect, we will less often be considering situations in which it is easier to re-enter the status quo than to exit it.(5)

How to choose?

Let us now have a closer look at how to evaluate a potential decision by a social movement to switch strategies (e.g., to become explicitly political). We will first look at five fundamental considerations, before turning to eight secondary considerations.(6)

Fundamental considerations

Core expected value. We define a strategy’s core expected value to be its expected value given that one does not switch out of it. A large core expected value can obviously trump concerns having to do with reversibility. For instance, if a large movement has much greater core expected value than a small movement, then it may be worth growing large even if the decision is not reversible.

Reversibility. Lower reversibility normally counts against a decision to adopt a new strategy (though see uncertainty about core expected value), since this implies that we will not be able to derive much option value from the possibility of switching back.

Direct cost. As also discussed in endnote 4, we define the direct cost of a decision to switch strategies as the cost of the decision itself, rather than the opportunity cost of no longer following the initial strategy. For example, the direct cost of switching from a small movement to a large movement might include time and money spent on outreach and the loss of any members who are alienated by the growing process (but not the new size of the movement itself). A large direct cost would of course count against a decision.

Uncertainty about core expected value. The reason reversibility can be so useful is that the core expected value of many decisions is very uncertain. For instance, it is highly uncertain how valuable it would be for the effective altruism movement to grow large. If the movement could reverse that strategy once it had embarked on it, uncertainty would be less of a problem.

Conversely, if we are certain of how valuable our strategies are, reversibility does not matter. But neither does it matter if we believe that there is no way for us to learn more about how valuable they are. Under such radical uncertainty, having the option to reverse your decision is of no avail.

However, the most common scenario is that of more moderate uncertainty. For instance, if we embark on a certain strategy, we tend to improve our estimates of its core expected value. If we learn that it is lower than we thought, having the option to reverse that strategy can be crucial.

Uncertainty can also diminish prior to the launch of a strategy, either automatically or as a result of research and testing (see researching and testing strategies). If we expect uncertainty to be reduced in the future, this can be a strong reason to delay a relatively irreversible decision to embark on a new strategy.

Uncertainty about reversibility and direct cost. In addition to being uncertain about expected value, we are also often uncertain about reversibility and direct cost. For instance, it seems very hard to assess how reversible a decision not to have norms of integrity actually is. This may be a reason against prematurely leaping onto paths which we suspect can be highly irreversible. We may want to wait until we have got a more resilient estimate of reversibility and direct cost, e.g., thanks to research or testing (cf. researching and testing strategies).

Secondary considerations

Risk aversion. Risk aversion is normally a reason not to make a hard-to-reverse decision. Since reversibility gives you the option to switch course if your strategy underperforms, it normally reduces the risk of a truly bad outcome. Note, though, that the standard view within the effective altruism movement seems to be that altruists should not be risk-averse.

Focus on long time horizons. If the effective altruism movement remains active for decades, and significant opportunities to do good continue to exist, then it will probably be faced with a large array of opportunities, some of which will only be available to the movement if it is pursuing a particular strategy (such as having a reputation for integrity). Longer time horizons also leads to greater uncertainty about which opportunities may eventually arise or become valuable (cf. uncertainty about core expected value). This means that it may be crucial to keep our options open, by avoiding hard-to-reverse decisions. On the other hand, if the effective altruism movement will be short-lived, or if the best opportunities to do good are fleeting, then option value considerations may not be very significant. Option value can be thought as a kind of capacity, which the movement may or may not take advantage of in the future.

Cause-neutrality and option value. One of the key features of effective altruism is cause-neutrality: the notion that we should not prejudge what cause to invest in, but rather compare all causes impartially.(7) If the movement finds new and more valuable causes in the future, it can pursue them. This gives the movement much greater option value compared to cause-partial groups, which are set on pursuing certain causes. It is not implausible to believe that most of the effective altruism movement’s expected value derives from this option value. However, some hard-to-reverse decisions may make it significantly harder to pursue some causes. For instance, turning political may make it harder to work on promoting bipartisan civility. This means that making hard-to-reverse decisions on key questions may deprive the effective altruism movement significant proportions of the value that cause-neutrality gives it.

Correlation between irreversibility and uncertainty. First, we saw that a question of what strategy to pursue on a certain issue often ceases to be a live one if we take a hard-to-reverse decision. Second, we are normally more certain of the expected value, direct costs, and reversibility of strategies that we already have pursued. Together, these two premises entail a negative correlation between reversibility and uncertainty: if a decision to pursue a certain strategy is hard to reverse, we typically have not pursued it previously, which normally means that we are uncertain about its expected value, direct costs, and reversibility.(8) If so, that can strengthen the case against hard-to-reverse decisions to adopt new strategies (cf. uncertainty about core expected value and uncertainty about reversibility and direct cost).

Side effects on other decisions. Deviations from the status quo on one issue are likely to affect the core expected value, reversibility, and direct cost of other decisions in ways which are hard to predict. For instance, growing the effective altruism movement may affect the core expected value, reversibility, and direct cost of the possible decision to become explicitly political in unpredictable ways. That may be a reason not to make several hard-to-reverse decisions at once.

Researching and testing strategies. Often, it is possible to learn more about the core expected value, reversibility, and direct cost of a decision to adopt a new strategy prior to embarking on it. This can be done through research, or through testing the strategy on a small scale. Whether to research and test a specific strategy depends on costs and expected information value. The information value is, in turn, dependent on estimated reversibility. Everything else being equal, a low level of reversibility is a reason to invest more resources in researching and testing a strategy prior to pursuing it.

Overconfidence. Humans tend to be biased towards overconfidence. That may make us underestimate the actual uncertainty of core expected value and reversibility and therefore the importance of reversibility. In particular, we may underestimate the number of doors that a hard-to-reverse decision closes. We often employ inside-view thinking (cf. Robin Hanson) to model plausible future scenarios in terms of specific causal pathways. When doing so, we often overestimate the extent to which the pathways we have identified exhaust the space of plausible scenarios. We often miss important ways in which the future could pan out, and in some of those, it may be very valuable to be in the state (e.g., being a small movement) that is difficult to re-enter into.

The unilateralist’s curse. Many hard-to-reverse decisions are instances of the unilateralist’s curse; a concept described in a paper by Bostrom, Douglas and Sandberg. The “curse” appears in situations where a group’s decision may effectively be determined by a unilateral action from a single member of the group. For instance, if one person decides to tell the object of a surprise party in advance, they have effectively made the decision for the whole group. In particular, the curse predicts that the more members the group has, the more likely it is that the decision will be determined by unilateral action, regardless of whether it is the right one.

All of the examples discussed in the paper by Bostrom et al., such as the case of the spoiled surprise party, are also examples of hard-to-reverse decisions. However, there are also many hard-to-reverse decisions which cannot be undertaken unilaterally.(9) For instance, it is probably hard for a single effective altruist organization to grow the movement by itself, in the face of resistance from other parts of the movement.

Out of the three discussed examples, decisions regarding norms of, e.g., honesty and integrity are probably the most susceptible to the unilateralist’s curse. For such decisions, low reversibility and the unilateralist’s curse have compounding effects. That a small group can unilaterally make a decision which irreversibly harms the whole movement may pose a serious risk.

Bostrom et al. suggest that the unilateralist’s curse can be lifted through deliberation or deference to other actors: what they call the principle of conformity.(10) In short, they argue, members of a group should agree to a code of conduct that makes it unlikely that any individual member will take the unilateral decision against the wishes of the group.

It is hard to say in the abstract which of these considerations are most important, but in our view, what one should look at first is core expected value, reversibility, and direct cost. Some of the secondary considerations are also quite important. These include focus on long time horizons, correlation between irreversibility and uncertainty, and side effects on other decisions. It could be useful to reflect on them, especially because they are less obvious than the fundamental considerations.

Where do all the social movements go?

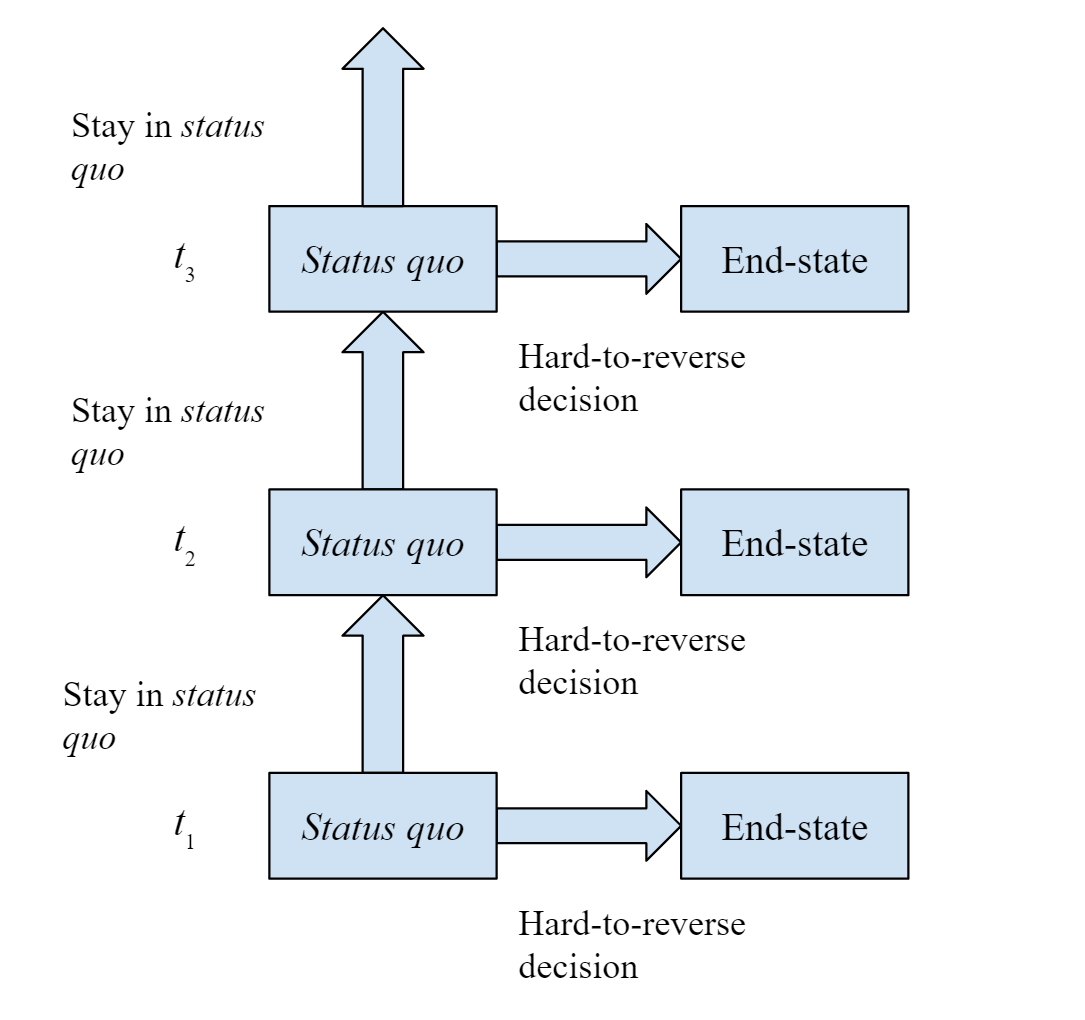

To put the concept of reversibility in perspective, let us note that it could help to explain and predict the trajectory of social movements. Suppose that:

-

A social movement has an ongoing or recurring opportunity to make a hard-to-reverse decision

-

Given that the movement survives, the probability that it takes the hard-to-reverse decision at any particular point in time never dips below some (potentially very low) lower bound.

Then:

-

If the movement exists for long enough, it will almost certainly take the hard-to-reverse decision eventually.(11)

Figure: A social movement facing a recurrent opportunity to make a hard-to-reverse decision.

This means that if it is very difficult to reverse the decisions to grow large, to go explicitly political, or to give up on norms of honesty and integrity, we may expect most social movements capable of entering these states to end up in them. In particular, we may expect that the effective altruism movement will do so by default.

This means, in turn, that the effective altruism movement should think through carefully whether those end-states are indeed desirable. That depends on host of considerations. We have addressed some of them here, but individual strategic decisions must be decided on a case-by-case basis. If the movement decides that a particular end-state is undesirable, it should reflect on what can be done to prevent us from ending up in it, e.g., through unilateral action.

Notes

- Note that the primary point of these examples is to illustrate the notion of reversibility. There might be reasonable disagreement on how reversible these strategies are.

-

Although our main focus here is on strategic choices for the effective altruism movement as a whole, it is worth noting that reversibility is often salient in the decisions made by individuals within the movement as well. For instance, the choice to leave a high status career for a lower status one may be difficult to reverse. It can also be very difficult for individuals to reverse a reputation for being a bad apple.

-

See Richard C. Bishop’s Option Value: An Exposition and Extension (1982) for a more thorough discussion of this example and the concept of option value.

-

This definition can be made more precise. By the “direct cost” of switching states we mean the cost of switching itself, rather than the opportunity cost of no longer being in the initial state. For instance, in the case of Sequoia National Park, the direct cost of moving from a developed to an undeveloped state would include the financial cost of demolishing buildings, replanting trees, importing animals, and so on, but would not include the lost tax revenue from any businesses displaced. Note also that for cases where it is in fact impossible to switch states, the direct cost is infinite.

-

Much of our analysis is applicable to cases where the more reversible decision is not the status quo as well. This includes situations where we face two new options which differ in terms of reversibility.

-

The fundamental considerations are the ones that we believe it is most important to take into account when choosing whether to make a hard-to-reverse decision. The secondary considerations are ones that we believe are either less important or useful mainly insofar as they help us to think more clearly about the fundamental considerations. However, this distinction is quite rough.

-

Thus, we use the term “cause-neutral” in the sense that one of us, Stefan, calls “cause-impartiality” in his article Understanding cause-neutrality.

-

It should be said, however, that in some cases we may have firm knowledge of the value of hard-to-reverse strategies from other sources. For instance, deciding to change your career from earning to give to academia may be hard to reverse, but you can still get a fair estimate of its value through looking at other people’s careers.

-

Conversely, there are some decisions prone to the curse which are not hard to reverse. For instance, suppose that a member of a group can veto a decision to take a certain offer. Suppose also that the offer will not cease to be given. That is a unilateralist’s curse situation, and yet the decision is not hard to reverse.

-

There may be an empirical correlation between a refusal to adopt the principle of conformity, and a tendency to rashly take irreversible decisions. This could be mediated by overconfidence in one’s own present judgements of the best course of action. However, this is merely a conjecture. The question should be studied further.

-

Though note Keynes’s quip that “in the long run we will all be dead”.

While I see some value in detailing commonly-held positions like this post does, and I think this post is well-written, I want to flag my concern that it seems like a great example of a lot of effort going into creating content that nobody really disagrees with. This sort of armchair qualified writing doesn't seem to me like a very cost-effective use of EA resources, and I worry we do a lot of it, partly because it's easy to do and gets a lot of positive social reinforcement, to a much greater degree than empirical bold writing tends to get.

I think that the value of this type of work comes from: (i) making it easier for people entering the community to come up to the frontier of thought on different issues; (ii) building solid foundations for our positions, which makes it easier to go take large steps in subsequent work.

Cf. Olah & Carter's recent post on research debt.

For what it's worth, I do agree that's where most of the value comes from, though I think the value is much lower than the value of similar empirical/bold writing, at least for this example.

While enough people are skeptical about rapid growth and no one (I think) wants so sacrifice integrity, the warning to be careful about politicization of EA is a timely and controversial one because well-known EAs have put a lot of might behind Hillary’s election campaign and the prevention of Brexit to the point that the lines behind private efforts and EA efforts may blur.

I doubly agree here. The title "Hard-to-reverse decisions destroy option value" is hard to disagree with because it is pretty tautological.

Over the last couple of years, I've found it to be a widely held view among researchers interested in the long-run future that the EA movement should on the margin be doing less philosophical analysis. It seems to me that it would be beneficial for more work to be done on the margin on i) writing proposals for concrete projects, ii) reviewing empirical literature, and iii) analyzing technological capabilities and fundamental limitations, and less philosophical analysis.

Philosophical analysis such as in much of EA Concepts and these characterizations of how to think about counterfactuals and optionality are less useful than (i-iii) because they do not very strongly change how we will try to affect the world. Suppose I want to write some EA project proposals. In such cases, I am generally not very interested in citing these generalist philosophical pieces. Rather, I usually want to build from a concrete scientific/empirical understanding of related domains and similar past projects. Moreover, I think "customers" like me who are trying to propose concrete work are usually not asking for these kinds of philosophical analysis and are more interested in (i-iii).

I agree with some versions of this view. For what it's worth I think there may be a selection effect in terms of the people you're talking to, though (perhaps in terms of the organisations they've chosen to work with): I don't think there's anything like consensus about this among the researchers I've talked to.

For an example of this view, see Nick Beckstead's research advice from back in 2014:

I suspect that the distinctions here are actually less bright than "philosophical analysis" and "concrete research". I can think of theoretical work that is consistent with doing what you call (i) - (iii) and does not involve a lot of guesswork. After all, lot of theoretical work is empirically informed, even if it's not itself intended to gather new data. And a lot of this theoretical work is quite decision relevant. A simple example is effective altruism itself: early work in EA was empirically informed theoretical work. Another example that's close to my heart is value of information work. There are existing problems in how to identify high and low value of information, when to explore vs. exploit, and so on. I suspect that doing empirically informed theoretical work on these question would be more fruitful than trying to solve them through empirical means only. So my inclination is to take this on a case to case basis. We see radical leaps forward sometimes being generated by theoretical work and sometimes being generated by novel empirical discoveries. It seems odd to not draw from two highly successful methods.

What, then, about pure a priori work like mathematics and conceptual work? I think I agree with Owen that this kind of work is important for building solid foundations. But I'd also go further in saying that if you find good, novel foundational work to do, then it can often bear fruit later. E.g. work in economics and game theory is of this sort, and yet I think that a lot of concepts from game theory are very useful for analyzing real world situations. It would have been a shame if this work had been dismissed early on as not decision relevant.

I don't think I'm arguing what you think I'm arguing. To be clear, I wouldn't claim a bright dividing line, nor would I claim that more philosophical work, or pure mathematics has no use at all. Now would I claim that we should avoid theory altogether. I agree that there are cases of theoretical work that could be useful. For examples, there is AI safety, and there may be some important crossover work to be done in ethics and in understanding human experience and human values. But that doesn't mean we just need to throw up our arms and say that everything needs to be taken on a case by case bases, if in-fact we have good reasons to say we're overall overinvesting in one kind of research rather than another. The aim has to be to do some overall prioritization.

I agree that thinking about exploration vs exploration tradeoffs is both interesting and useful. However, the Gittins Index was discovered in 1979. Much of the payoff of this discovery came decades afterward. We have good reasons to have pretty high discount rates, such as i) returns on shaping research communities that are growing at high double-digit percentages, ii) double digit chances of human-level AI in next 15 years.

There's very little empirical research going into important concrete issues such as how to stage useful policy interventions for risky emerging technologies (Allan Dafoe, Mathias Mass notwithstanding), how to build better consensus among decision-makers, how to get people to start more good projects, how to better recruit, etc that many important decisions of EAs will depend on. It's tempting to say that many EAs have wholly forgotten what ambitious business plans and literature reviews on future-facing technologies are even supposed to look like! I would love to write that off as hyperbole but I haven't seen any recent examples. And it seems critical that theory should be feeding into such a process.

I'd be interested to know if people have counterconsiderations on the level of what should be a higher priority.

There are two different claims here: one is "type x research is not very useful" and the other is "we should be doing more type y research at the margin". In the comment above, you seem to be defending the latter, but your earlier comments support the former. I don't think we necessarily disagree on the latter claim (perhaps on how to divide x from y, and the optimal proportion of x and y, but not on the core claim). But note that the second claim is somewhat tangential to the original post. If type x research is valuable, then even though we might want more type y research at the margin, this isn't a consideration against a particular instance of type x research. Of course, if type x research is (in general or in this instance) not very useful, then this is of direct relevance to a post that is an instance of type x research. It seems important not to conflate these, or to move from a defense of the former to a defense of the latter. Above, you acknowledge that type x research can be valuable, so you don't hold the general claim that type x research isn't useful. I think you do hold the view that either this particular instance of research or this subclass of type x research is not useful. I think that's fine, but I think it's important not to frame this as merely a disagreement about what kinds of research should be done at the margin, since this is not the source of the disagreement.

You're imposing on my argument a structure that it didn't have. My argument is that prima facie, analysing the concepts of effectiveness is not the most useful work that is presently to be done. If you look at my original post, it's clear that it had a parallel argument structure: i) this post seems mostly not new, and ii) posts of this kind are over-invested. It was well-hedged, and made lots of relative claims ("on the margin", "I am generally not very interested" etc. so it's really weird to be repeatedly told that I was arguing something else.

The general disagreement about whether philosophical analysis is under-invested is source of about half of the disagreement. I've talked to Stefan and Ben, and I think that I was convinced that philosophical analysis was prima facie under-invested atm, then I would view analysis of principles of effectiveness a fair bit more favorably. I could imagine that if they became fully convinced that practical work was much more neglected then they might want to see more project proposals and literature reviews done too.

Thank you for this article. My own concern is that I've personally had little access to guidance on movement building (in the Netherlands) from people more experienced/knowledgeable on this area. I've therefore have had to try to understand the risks and benefits for considerations like EA's 'strictness' vs. the number of people it appeals to myself. I don't think someone with a 'coordinator' role like me should be expected to rigorously compile and evaluate research on movement building by him or herself.

My default position with which I started last year was to get as many concrete EA actions happening immediately as possible (e.g. participants, donations, giving pledges, career changes, etc.) to create the highest multiplier that I could. What intuitively follows from that is to do lots of emotionally appealing marketing (and get on TV if possible). I've encountered other people at other local groups and national organisations who seemed to think this way at least in part (I'm probably exaggerating this paragraph slightly, also to drive home my point).

I'd like to point out that in the last year, EA Flanders, EA France, EA Australia and EA Netherlands (and probably others which I've missed) have launched. Also, the number of local groups still seem to be growing rapidly (I count 313 on EA Hubs though some are missing and some are inactive). I think it would be a mistake to (implicitly) assume that the entrepreneurial people taking initiative here will come to conclusions that incorporate the currently-known risks and benefits to the future impact of the EA movement by themselves.

If the CEA Research Division is building an expertise in this area, I would suggest it starts giving the option to these individual leaders of grassroots local & national groups (with say member count >20) to do short Skype calls where it can share and discuss its insights on movement building as it's relevant in each local context.

I'd happily connect CEA researchers with other interested national group leaders to test this out (and be the first to sign up myself). Feel free to send me a quick message at remmeltellenis(at}gmail{dot)com (an alternative is to go through CEA Chapters/the Group Organisers call.)

Edits: some spelling & grammar nitpicks, further clarification and call-to action.

Very insightful post. One note - I think reversibility considerations seem to count against some but not all political causes. Most obviously, they seem to count against taking sides on hot controversial political issues, such as EU membership or support for some specific political party. However, they don't count against low heat political issues. Some important ones include: more evidence-based policy, improving forecasting in government, changing metrics in health resource prioritisation etc.

Insightful post, thanks - researching movement-building strategy always throws up fascinating new concepts for the toolkit.

On a related note - this is a fascinating read (long but worth it): Dominic Cummings, the chief Brexit campaign strategist discusses the campaign, with lots of provocative points about option values, branching futures, unrecognised simplicities of effective action, and non-linearities of political progress. While I may not be a particular fan of his politics, he's a provocative and uncompromising thinker and perhaps has a few similarities to how EAs approach complex strategic questions (he's wary of ideology and grand historical teleologies, and is a fan of Tetlock and of quantifying wherever possible). Plus, he's a serious autodidact: after leaving government he spent two and a half years in a bunker he and his father built for him on their farm in Durham, reading science and history and trying to understand the world.

https://dominiccummings.wordpress.com/2017/01/09/on-the-referendum-21-branching-histories-of-the-2016-referendum-and-the-frogs-before-the-storm-2/