Summary

This is a response to the Windfall Clause proposal from Cullen O’Keefe et al, which aims to make AI firms promise to donate a large fraction of profits if they become extremely profitable. While I appreciate their valiant attempt to produce a policy recommendation that might help, I am worried about the practical effects.

In this article I argue that the Clause would primarily benefit the management of these firms, resulting in an increased concentration of effective wealth/power relative to a counterfactual where traditional corporate governance was used. This could make AI race dynamics worse and increase existential risk from AI.

What is the Windfall Clause?

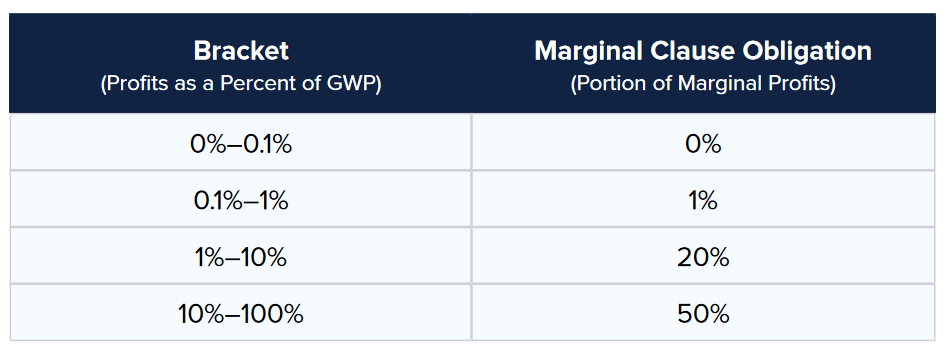

The Clause operates by getting firms now to sign up to donate a large fraction of their profits for the benefit of humanity if those profits become very large. The idea is that, right now, profits are not very large, so this appears a ‘cheap’ commitment in the short term. In the future, if the firm becomes very successful, they are required to donate an increasing fraction.

This is an example structure from O’Keefe document:

Many other possible structures exist with similar effects. As an extreme example, you could require all profits above a certain level to be donated.

Typical Corporate Governance

The purpose of a typical corporation is to make profits. Under standard corporate governance, CEOs are given fairly broad latitude to make business decisions. They can determine strategy, decide on new products and pricing, alter their workforces and so on with limited restrictions. If the company fails to make profits, the share price will fall, and it might be subject to a hostile takeover from another firm which thinks it can use the assets more wisely. Additionally, in the meantime the CEO’s compensation is likely to fall due to missed incentive pay.

The board also supplies oversight. They will be consulted with on major decisions, and their consent is required for irreversible ones (e.g. a major acquisition or change of strategy). The auditor will report to them so they can keep apprised of the financial state of the company.

The amount of money the CEO can spend without oversight is quite limited. Most of the firm’s revenue probably goes to expenses; of the profits, the board will exercise oversight into decisions around dividends, buybacks and major acquisitions. The CEO will have more discretion over capital expenditures, but even then the board will have a say on the total size and general strategy, and all capex will be expected to follow the north star of future profitability. A founder-CEO might retain some non-trivial economic interest in the profits (say 10% if it was a small founding team and they grew rapidly with limited need for outside capital), which is truely theirs to spend as they wish; a hired CEO would have much less.

How does the clause change this?

In contrast, the clause appears to give a lot more latitude to management of a successful AGI firm.

Some of the typical constraints remain. The firm must still pay its suppliers, and continue to produce goods and services that others value enough to pay for them more than they cost to produce. Operating decisions will remain judged by profitability, and the board will continue to have oversight over major decisions.

However, a huge amount of profit is effectively transferred from third party investors to the CEO or management team. They go from probably a few percent of the profits to spend as they wish to controlling the distribution of perhaps half. Additionally, some of this is likely tax deductible.

It is true that the CEO couldn’t spend the money on personal yachts and the like. However, extremely rich people often have significant political interests, and these would seem to be in-scope for the Clause. If you are a Warren Buffett or Peter Thiel or Mark Zuckerberg or Elon Musk or George Soros, having control of a Windfall Account that can only be spent on ‘philanthropic’ purposes is roughly as good as having the money personally.

The clause says that this money should be spent for the benefit of all humanity. But this is very subjective, and I doubt ‘all humanity’ would be able to bring a class action lawsuit here. In practice, it would likely be up to management to distribute the spoils.

In theory the board might provide some oversight. However, in practice this doesn’t seem like that much of an improvement - it’s not like corporate boards are really designed for this purpose, and many technology startups have relatively tame boards due to supervoting shares and the like.

The Clause proposal discusses some restrictions (p13), but in practice I think would not work. Some of them are simply absurd, like all countries getting an equal amount of money regardless of population. Others, like fiduciary duties or transparency measures, are more appropriate for avoiding self-dealing and personal enrichment than substantive benefits. Finally, the requirement that spending be ‘effective’ seems like it would be meaningless in practice due to subjectivity - or perhaps the authors simply hoped that they or their allies would get to be the ones determining what is effective.

Alas, when evaluating such a policy, we should not assume that we will be the ones given control of the money.

Concentration of Wealth

This seems like it could result in a significant concentration of influence.

A typical public company has a very broad investor base; almost everyone who has invested in an index fund owns a bit of GOOGL and MSFT, for example. Even private firms will typically have venture capital investors, which in turn might have pension funds, insurance funds, etc. among their investors. The clause effectively expropriates a fraction of the profits which would otherwise accrue to this broad group, and transfers it to the CEO.

Given the size of the clause, it seems plausible to me that, conditional on a firm triggering the highest thresholds, this clause could be the largest transfer and effective concentration of wealth in history.

Aggravating Race Dynamics

One of the primary motivations behind the Clause is to reduce race dynamics, by both reducing the rewards to first place (as they will be taxed by the clause) and increasing the rewards to second place (as they will benefit from the windfall). If this was the case, it could be worthwhile even if it resulted in a significant concentration of power; it is much better to have some trillionaires in a world made rich by AI servants than for everyone to be dead.

However, if it is true that the primary effect of the Windfall Clause is to concentrate money/power in the hands of AGI startup CEOs, this argument will likely not work. Rather, from the point of view of the CEOs, the spoils available to winning are now even larger than before, because you will get a massive slush fund for political pet projects, and the losers will not even get the consolation prize of their 401(k) doing so well. To many tech CEOs, “your competitor will be able to spend trillions of dollars on the stuff he cares about while you become irrelevant” is very far from reassuring!

This is even worse when you consider that many tech CEOs are very politically active and often disagree with each other. Depending on your ideology, preventing Soros/Thiel from winning the race potentially seems much more important than beating IBM’s diversified investor base.

So overall my guess is that overall the Clause encourages a destructive race to AGI and worsens prospects for safety.

How could we fix this?

One approach would be to fix the beneficiaries of the windfall in advance, rather than leaving it to the subsequent discretion of management. If all firms chose the same beneficiaries, the incentive to race would be significantly reduced. This also potentially reduces rent seeking in the future.

Who could this be? There are a variety of options, none of which seem ideal.

- Minority investors without a vote or legal right to profits

- Special share class?

- Effectively be a normal company in most ways.

- Don’t base management compensation on distributions to this class.

- Investors in all AGI firms

- Potentially reduces race incentives.

- Probably antitrust violation.

- Buying bitcoin

- Effectively makes BTC owners economic investors without rights.

- Anyone can buy in, easily to implement.

- Wastes a lot of electricity.

- Invest in global stock market, never redeem

- Effectively a subsidy for global growth.

- Wealth transfer to investors.

- Put it in a bank account, never use it.

- Ignoring some issues caused by financial stability regulations, effectively reduces inflation / wealth transfer to USD owners.

- All people, equally

- GiveDirectly style.

- Could be hard to implement.

- Important that this is a cash transfer, not just ‘spend on behalf of’.

- The US Federal Government

- Aligns incentives with global hegemon.

- Relatively benevolent by the historical standards of governments.

- Quite wasteful.

- Somewhat arbitrary dividing line.

- All Americans, equally

- Some random blogger suggested this a while ago.

- Easier than global.

- Somewhat arbitrary dividing line.

- The UN

- Theoretically represents most people in the world.

- Quite wasteful.

- King Charles II

- Centuries of family experience being trusted with extreme power and not using it.

- Give it to me

- Clear schelling point to solve the problem via the person who first noticed it.

- I will spend it wisely.

- Advantages too numerous to list, few drawbacks.

- My personal favourite option.

Is this just Against Billionaire Philanthropy again?

Many people are critical of billionaires giving to charity, and this argument has some features in common with their argument. If by some chance you haven’t come across this argument before, Scott’s article on the subject is a reasonable starting point.

I believe this argument is distinct from that. Specifically, in contrast to the typical argument, I believe:

- Many billionaires (in the US etc.) came by their money in morally neutral or positively laudworthy ways.

- Policy should not have reducing the number of billionaires as an object.

- Billionaire philanthropy does a lot of good in the world and should be praised.

Where this post differs is:

- While we shouldn’t try to reduce the number of billionaires relative to a ‘neutral’ free market outcome, we shouldn’t pro-actively try to increase it either.

- This policy uniquely worsens AI race dynamics in a manner not typical of most billionaire philanthropy.

- The primary concern is reducing existential risk, not egalitarianism.

Of course, if you were concerned about billionaire philanthropy, you should be even more convinced by this argument!

Conclusion

By default the Windfall Clause could lead to a huge concentration of money and influence in the hands of AI CEOs/Management, and reduce incentives for AI safety by accelerating race dynamics. This effect could be partly ameliorated if AGI firms could all agree on the precise beneficiaries ahead of time.

Addendum: Could the clause drive AI winners to bankruptcy?

This is a very trivial point that I include here only as a curio.

The clause is clear that it refers to pre-tax profit:

Profits: income before income taxes. (p4)

Furthermore, the US tax code limits the tax deductibility of donations in typical years to 10%.

In the event of AGI, it seems quite plausible that governments might try to expropriate the profits and raise corporate taxes a lot. Because of this, for sufficiently high levels of profit, taxes and windfall clause, there is no guarantee that their sum will be less than 100%.

This issue, at least, could be easily avoided by changing the definition to refer to income after income taxes. This is a more typical definition.

Thanks to everyone who gave feedback on this document; all mistakes remain my own.

I hadn't looked into the details of Windfall Clause proposed execution and assumed it was prescribing something closer to GiveDirectly than "CEO gets to direct it personally." CEO gets to direct it personally does seem obviously bad.

How much of the objection would be fixed if Windfall Clause required the donations to be under the board's oversight?

Good question!

My guess is not that much, though it depends on the details.

In a traditional corporation, the board is elected by the shareholders to protect their interests. If everyone is attentive, it seems like the shareholders might start voting partly based on how the board members would influence the windfall. You could imagine political parties nominating candidates for the board that shareholders would choose between depending on their ideology as well as their expertise with regard the object-level business of the firm. If this is the case, it seems we've basically reverted to a delegated democracy version of shareholder primacy where shareholders effectively get part of their dividend in the form of a pooled DAF vote.

If directors/shareholders act with a perhaps more typical level of diligence for corporate governance, I would expect the board to provide a check on the most gross violations (e.g. spending all the money on yachts for the CEO, or funding Al Qaeda) but to give the CEO and management a lot of discretion over playpumps vs AMF or Opera vs ACLU.

In practice, the boards of many tech startups seem quite weak. In some cases the founders have super-voting shares; in other cases they are simply charismatic and have boards full of their friends. You can verify this for many of the large public tech companies; I don't know as much about governance at the various LLM startups but in general I would imagine governance to be even weaker by default. In these cases I wouldn't expect much impact from board oversight.

More as food for thought... but maybe "broad investor base" is a bit of exaggeration? Index funds are likely to control a significant fraction of these corporations, and it's unclear if the board members they appoint would represent ordinary people. Especially when owning ETF != owning actual underlying stocks.

From an old comment of mine:

This is a cool point, thanks for making it.

Can't this be fixed by just stating that the windfall is distributed by shareholders on a pro rata basis?[1]

Also, if I'm understanding Appendix II of the original report correctly, it seems like the proposals all involve distributing the windfall to a trust which the AI developer does not have control of. I guess your point is that e.g. Open AI might distribute its windfall to "the trust for buying Sam Altman yachts" or something, but I think it is per se incorrect to describe the CEO as having control over spending the windfall?

"Fixed" in scare quotes - I'm not sure it's actually better for the world to have random VCs spending the money than CEOs of AGI companies.

Thanks for the suggestions!

That would be an improvement in terms of CEO race incentives yes, although it would reduce the distinctiveness of the clause significantly. For shareholders who already give to charity, fungibility of money means this might be simply equivalent to no Clause for them.

Yes, I guess here I'm really critiquing 'what I think attempts to implement the Windfall Clause in practice look like right now'. I'm not aware of any firm which has signed up to a Windfall Clause or similar having actually implemented an associated legally binding trust; even if they intended to do so, the CEO and management team would presumably have a huge amount of discretion about its makeup.

I appreciate the red-team of a commonly-discussed AI governance proposal. I'm confused about the section on race dynamics:

How are the spoils of winning larger under the Windfall clause? I suppose the spoils of being part of the Windfall clause are large (i.e., if the top 20 labs get to participate in the clause, then it's really important to be in the top 20). But it seems to be like the dangers of race dynamics are primarily concentrated in the desire to be in 1st place-- that is, the top 3-5 actors racing really hard against each other seem like the thing that actually causes people to develop/deploy systems prematurely.

The 401(k) point seems very confusing to me. I think you mention somewhere that "many people own some Microsoft and Google", but this seems pretty unpersuasive. The Rich and Powerful People who own way more Microsoft and Google than everyone else are the ones who will disproportionately benefit. If people who own 0.0000000000000000001% of Microsoft are going to be able to influence the post-TAI future, I don't think it's going to be because of their investment portfolio (but maybe it'll happen if the people who are in control of TAI do something like deliberative democracy).

In summary, I don't see how the Windfall Clause reduces race dynamics (particularly the racing between the top 1-5 actors, which is where I expect most of the danger comes from). When I imagine myself being one of the top 5 actors, I feel more comfortable "losing the race" if I've signed a Windfall Clause than if I'm relying on traditional corporate governance.

I think the claim is:

Yup, this is a good summary.

Ah, thanks for the summary of the claim. Apologies for misunderstanding this in my initial skim.

I agree with Rameon's comment.

I think Ben West correctly captured my primary argument, so I'll just address a minor subpoint.

Firstly, I'm not sure where you're getting '0.0000000000000000001%'. MSFT has a total sharecount of around 7.5bn, so if you own a single share ( $248.59 at time of writing) that's over 100,000,000,000x more than you suggest. Even if you own a single share of an S&P500 ETF, and hence only a fractional share of MSFT, you're still off by many orders of magnitude.

Secondly, it's unclear which of two points in the post you're referring to, partly because instead of actually quoting a section of the post you instead made up a fake quote.

One argument, about egalitarianism, mentions GOOGL and MSFT:

This argument is a relative argument. With over half of all Americans having some exposure to the stock market, even though the distribution is far from equal, it is far less concentrated than a world where the CEO directly controls the windfall.

I don't think this is a super important argument though, as I mention, because I am a lot more concerned about AI race dynamics than egalitarianism.

The second argument, about CEO incentives and race dynamics, mentions 401(k)s:

I expect AGI firm CEOs, which are the people whose incentives we primarily care about, to be reasonably wealthy, either from previous entrepreneurship or just compensation from the job, so they would likely have significant wealth invested in the stock market. The "Rich and Powerful People who own way more Microsoft and Google than everyone else [and] are the ones who will disproportionately benefit" include the competitor CEOs (and VCs, management teams, etc.) whose incentives determine how bad AI races will be.

This argument is not about egalitarianism or fairness or inclusion; it is simply about race dynamics. For this purpose, inequality is actually advantageous, as increases the rewards for the most influential actors for not racing.

But I don't actually regard either of these points as the key part of the overall argument.

What if it's payed as tax to a democratically elected world government?