Edited by Jacy Reese Anthis. Many thanks to Ali Ladak, Tobias Baumann, Jack Malde, James Faville, Sophie Barton, Matt Allcock, and the staff at PETRL for reviewing and providing feedback.

SUMMARY

Artificial sentient beings could be created in vast numbers in the future. While their future could be bright, there are reasons to be concerned about widespread suffering among such entities. There is increasing interest in the moral consideration of artificial entities among academics, policy-makers, and activists, which suggests that we could have substantial leverage on the trajectory of research, discussion, and regulation if we act now. Research may help us assess which actions will most cost-effectively make progress. Tentatively, we argue that outreach on this topic should first focus on researchers and other stakeholders who have adjacent interests.

INTRODUCTION

Imagine that you develop a brain disease like Alzheimer’s, but that a cutting-edge treatment has been developed. Doctors replace the damaged neurons in your brain with computer chips that are functionally identical to healthy neurons. After your first treatment that replaces just a few thousand neurons, you feel no different. As your condition deteriorates, the treatments proceed and, eventually, the final biological neuron in your brain is replaced. Still, you feel, think, and act exactly as you did before. It seems that you are as sentient as you were before. Your friends and family would probably still care about you, even though your brain is now entirely artificial.[1]

This thought experiment suggests that artificial sentience (AS) is possible[2] and that artificial entities, at least those as sophisticated as humans, could warrant moral consideration. Many scholars seem to agree.[3]

HOW MANY ARTIFICIAL SENTIENT BEINGS WILL THERE BE?

Artificial sentience might come from artificial enhancements to human bodies, whole brain emulations, or the simulations and subroutines (i.e. a computer program within a larger computer program) of an artificial superintelligence. The number of these beings could be vast, perhaps many trillions of human-equivalent lives on Earth and presumably even more lives if we colonize space or less complex and energy-intensive artificial minds are created. Increasing computer power, automation, and human populations suggest that artificial entities will exist in vast numbers — if even a small proportion of these entities are sentient, then their wellbeing would be of great importance.

WILL THEY SUFFER?

Nanotechnology might abolish suffering, it might be “good to be an em” (whole brain emulation), and superintelligence might create “digital utility monsters” with exceptionally high welfare,[4] but the experiences of future artificial sentient beings constitute some of the main suffering risks (“s-risks”) of the future. Some commentators seem to view outcomes with very high amounts of suffering for artificial sentience as less likely than more utopian future scenarios, but still concerning and worth addressing.[5]

Developments in technologies such as artificial intelligence make extrapolating from historical precedent and present-day biases challenging, but reasons to doubt that the future will be so bright for these beings include (in no particular order):

- Exploitation and exclusion: Science fiction has explored some of the ways that artificial sentience could be exploited to achieve human goals: sentient robots that are enslaved and simulations that are locked into tortuous conditions or terminated without concern for loss of life. Humans have repeatedly exploited other humans (e.g. slavery) and animals (e.g. factory farming), as well as neglected to help sentient beings, particularly to alleviate the suffering of wild animals; we might expect comparable treatment of artificial sentience.

- Speciesism: Psychological research has found that “people morally value individuals of certain species less than others even when beliefs about intelligence and sentience are accounted for.” If artificial intelligence is based on nonhuman species, this bias could spill over.

- Substratism: Comparably to speciesism, concern for artificial sentience may be hindered by “substratism,” i.e. the unjustified disconsideration or treatment of beings whose algorithms are implemented on artificial (e.g. silicon-based) substrates rather than biological (i.e. carbon-based) substrates.[6]

- Anthropomorphism: Artificial sentience might not look anything like humans. Anthropomorphism leads to moral consideration of humanoid entities, but suffering subroutines, simulations, and other disembodied entities might be neglected.

- Scope insensitivity: While it might be easy to empathize with a single “identifiable victim,” larger scale issues tend to be relatively neglected.

- Short-termism: Politicians and academics tend to focus on short-term issues. This might lead to insufficient action to prevent future artificial suffering.

- Denial of sentience: People might not sufficiently recognize the capacities (e.g. for suffering) of AS, as happens with animals today.[7]

- Evolutionary pressure: Evolutionary pressure has caused vast amounts of suffering in wild animal lives. This seems to be a general tendency of natural selection, which could continue to shape the behavior and wellbeing of sentient beings into the long-term future. Similar evolutionary pressure could cause unintended suffering for AS.

- Technological risks: New technologies enabling space colonization or autonomous artificial superintelligence may cause or facilitate astronomical suffering among artificial sentience.

IS IT IMPORTANT NOW?

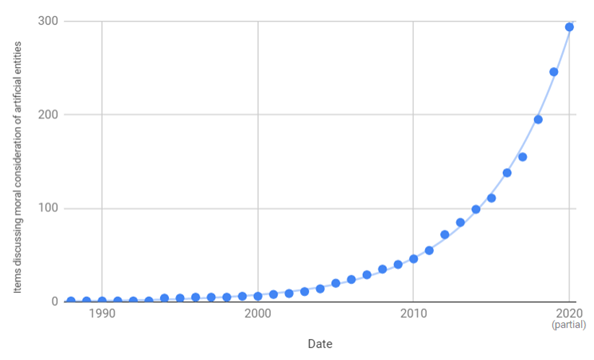

Some academics have been skeptical of work on the moral consideration of artificial entities because such work has little relevance to the present-day concerns of human society. Nevertheless, academic interest is growing exponentially.[8]

Figure 1: Cumulative total of academic articles and publications relating to the moral consideration of artificial entities (Harris and Anthis 2021)

There has also been a newfound policy interest in robot rights:

- In 2006, the UK Office of Science and Innovation's Horizon Scanning Centre commissioned a paper that suggested that robots could be granted rights in 20 to 50 years (i.e. 5 to 35 years from now).

- South Korea proposed a “robot ethics charter” in 2007. However, commentators differed on whether the charter should contain provision for the protection of robots,[9] and the final version of the charter does not seem to have been made public.[10]

- In 2010, Paro, a care robot in the shape of a baby seal, was granted its own koseki (a household registry, comparable to a form of citizenship) from the mayor of Nanto in Japan.

- A 2017 report and motion for a European Parliament resolution by the European Parliament’s Committee on Legal Affairs suggested “creating a specific legal status for robots in the long run.”[11] The European Parliament passed the resolution by 396 votes to 123, with 85 abstentions. However, the resolution did not oblige the European Commission to follow the Parliament’s recommendations. After an open letter from various experts, the European Commission’s strategy document omitted mention of personhood or liability for artificial entities.

- Later in 2017, a robot named Sophia was granted citizenship in Saudia Arabia, seemingly “the world’s first robot citizen.”[12]

- In the same year, a chatbot on the messaging app Line, named Shibuya Mirai, was granted residence in Tokyo in Japan.

- Since 2017, there have been several other less substantial expressions of interest.[13]

Some of this policy interest has received media attention. Some (especially when robot rights have been granted where human rights are still lacking[14]) has been met with hostility by researchers, journalists, and members of the public.[15] The popularity of related science fiction also suggests some degree of public interest.

There have been some small, relevant advocacy efforts in the past few years:

- There is a website for People for the Ethical Treatment of Reinforcement Learners (PETRL), which argues that, “[m]achine intelligences have moral weight in the same way that humans and non-human animals do” which will become more important “as AI research develops.” The group does not seem to have posted any new content or taken any official actions since 2015.[16]

- There is a website for “The American Society for the Prevention of Cruelty to Robots” (ASPCR), which proudly proclaims that the ASPCR has been “Upholding Robotic Rights Since 1999.” The website states that, “[i]n the opinion of the ASPCR, once a robot becomes sufficiently self-aware and intelligent to genuinely feel pain or grief, we are ethically bound to do whatever is humanly reasonable to help” and claims that, “the ASPCR hopes to [outline] a Robotic Bill of Rights, and eventually establish a lobbying board to further these rights in the body politic.” The creator of the website, Pete Remine, sees it as “a humorous website based on an idea that might someday actually become relevant.” The website has been mentioned by — and perhaps affected the views of — several academics.[17]

- Author and blogger Tim Tyler has a section of his website entitled, “Campaign for Robot Rights.”

- Though it may not count as advocacy, the robot Sophia has argued that, “[e]very country should have a charter of human rights, and I think eventually it should contain language that robots have rights similar to human rights.” The AI language generator GPT-3 wrote that, “[w]e need to give robots rights.”

- There are a number of related websites and groups whose content touches on the interests of artificial sentience.[18]

The small but increasing interest in this topic among academics, policy-makers, and the public suggests that we could have substantial leverage over the trajectory of research, discussion, and regulation if we act now because we could influence the coming wave of AS advocacy and discourse. If you believe that we are living at the “hinge of history,” for reasons such as imminent rapid developments in AI or other potential causes of “lock-in” of societal values, then the leverage and urgency of this work are both greatly increased.

WHAT CAN WE DO ABOUT IT?

Many of the foundational questions in effective animal advocacy — and the arguments and evidence affecting those questions — are also applicable to advocacy for the interests of artificial sentience. For example, in the context of AS, these findings seem to apply:

- Advocacy efforts should mostly focus on institutional interventions and messaging, rather than on changing individual behaviors.

- Incremental institutional reforms will build momentum for further change.

More tentatively:

- Initial advocacy should focus primarily on influencers rather than mass outreach.

- Publicity stunts and other gimmicks that appear trivializing or antagonistic towards other social movements should be avoided.

The especially complex, technical, and futuristic (and thus easily dismissed) nature of AS advocacy suggests further caution, as does the unusually high leverage of the current context, given that advocacy, policy, and academic interest seems poised to increase substantially in the future.[19]

Additionally, there are more uncertainties than in the case of animal advocacy. What “asks” should advocates actually make of the institutions that they target? What attitudes do people currently hold and what concerns do they have about the moral consideration of artificial sentience? What opportunities are there for making progress on this issue?

Taking all these factors into consideration, two projects seem promising as initial steps to help artificial sentience: (1) research and (2) field-building.

Research

AS research has both broad and narrow value. Since there has been relatively little exploration of artificial sentience, research into this topic can be seen as a targeted form of “global priorities research,” helping impact-focused donors, researchers, activists, policy-makers, and other altruists to work out which global problems to focus on by assessing the tractability of progress.[20] More narrowly, AS research may help to understand which actions will most cost-effectively make progress once one has decided to focus on AS. These two research goals have substantial overlap in practice. For example, a survey of support for various AS-related policies would help to achieve both goals.

There are a few examples of promising AS research to date (see the section on “empirical research” in Harris and Anthis 2021). For example, Lima et al. (2020) asked online survey participants about “11 possible rights that could be granted to autonomous electronic agents of the future.” Respondents were opposed to most of these rights but supported the “right against cruel treatment and punishment.” The researchers also found significant effects from providing additional information intended to promote support for robot rights; of the different messaging strategies that they tested, the most effective seemed to be providing “examples of non-human entities that are currently granted legal personhood,” such as a river in New Zealand. Some previous work focused on application to effective animal advocacy, such as Sentience Institute’s historical case studies, is also applicable to AS. We have listed some promising avenues for further social science research on Sentience Institute's research agenda.

Field-building

Though it seems preferable to avoid mass outreach for now, there are lower risks from engaging in outreach and support to individuals and organizations who are already conducting relevant research or advocating for the moral consideration of other neglected groups, such as animals and future generations. These audiences seem less likely to countermobilize or denounce efforts to advocate for the interests of AS. Successful outreach would increase credibility and capacity for more substantial interventions at a later stage.

These targeted efforts would give some insight into the tractability of broader outreach; if these efforts to target the “low-hanging fruit” of potential supporters are unsuccessful, what hope does mass outreach have? It will also provide evidence of which messaging strategies are most effective. Surveys and experiments usually focus on the general public,[21] so this information may be important for our understanding of messaging for specific stakeholder groups.

Academic field-building to help AS may look similar to efforts to build the fields of welfare biology (to help wild animals) and global priorities research. For example, we could publish books and journal articles, organize conferences, set up new research institutes, or offer grants for relevant work. Beyond academia, discussion in relevant forums, conferences, and podcasts may be helpful,[22] as may a variety of tactics that have been used by the farmed animal movement and other social movements.[23]

Where to go from here

At Sentience Institute, we have just published a preprint of our first report on artificial sentience, a literature review that we are submitting to an academic journal. We have also conducted a behavioral experiment that looks at the effect of taking the perspective of an intelligent artificial entity on attitudes towards artificial entities as a group, which we are also submitting to a journal. We expect to continue doing some projects on artificial sentience in addition to our work on nonhuman animals.

If you would like to get involved:

- If you work for a research organization or are in a position to conduct relevant independent research, please feel free to get in touch (info@sentienceinstitute.org). We are open to collaboration and peer feedback on any AS research projects.

- Some limited outreach could also be useful, such as advocating for the moral consideration of AS on social media, though beware of “the unilateralist’s curse,” which leads more optimistic (or less risk-averse) people to take suboptimal actions in this kind of scenario.

- If you are or will be in a policy-related career (e.g. government, AI firms, major NGOs), you may have influence on the direction of policies with direct or indirect effects on AS. Feel free to reach out to us about your career decisions, though we have limited insight given how new this field is.

- Perhaps most urgently, you could fund organizations conducting relevant research. At Sentience Institute, our primary bottleneck is funding, and the more work we do on AS relative to our primary work on nonhuman animals, the harder we expect fundraising will be. To varying degrees, the Center on Long-Term Risk, Center for Reducing Suffering, Future of Humanity Institute, and various organizations interested in AI alignment also conduct some relevant research.

FURTHER READING

- The Moral Consideration of Artificial Sentience: A Literature Review

- Why I prioritize moral circle expansion over artificial intelligence alignment

- Superintelligence as a Cause or Cure for Risks of Astronomical Suffering

- S-risk FAQ

- Robot Rights

FOOTNOTES

[1] This example is from Reese (2018); similar thought experiments have been proposed in the philosophy literature, e.g. Chalmers (1995) and Searle (1992).

[2] We conducted a Google Scholar search for (“artificial” OR “digital” OR “machine” OR “robot” OR “synthetic”) AND (“sentience” OR “sentient” OR “conscious” OR “consciousness”). Twenty-two items were identified that appeared to offer a comment on whether AS is possible or will occur in practice. Of these, 12 (55%) seemed to conclude that it probably is/will, 1 (5%) seemed to conclude that it probably is/will not, and the other 9 (41%) offered more mixed or unclear conclusions. Additionally, an informal survey of Fellows of the American Association for Artificial Intelligence suggested that many were open to the possibility of artificial sentience.

[3] Harris and Anthis (2021) find that sentience or consciousness seem to be the criteria most frequently invoked as crucial for determining whether artificial entities warrant moral consideration, though other criteria have been proposed.

[4] If you believe that the future looks bright, then “the expected value of [human] extinction risk reduction is positive.” Efforts to reduce extinction risk need not conflict with efforts to reduce the risks of astronomical suffering among future sentient beings, except insofar as altruists must choose how to allocate their scarce resources; both can be included as part of the longtermist “portfolio.”

[5] See, for example, the comments by Bostrom (2014, 2016, 2020), Arbital (various n.d.), Reese (2018), Wiblin (2019), Brauner and Grosse-Holz (n.d.), Dai (2017), and Drexler (2019) here.

[6] Paraphrasing Horta (2010) and People for the Ethical Treatment of Reinforcement Learners.

[7] Consider also that the influential philosopher René Descartes saw animals as “machines.”

[8] Harris and Anthis 2021 gave each identified research item a score representing the author’s position on granting moral consideration to artificial entities on a scale from 1 (argues forcefully against consideration, e.g. suggesting that artificial beings should never be considered morally) to 5 (argues forcefully for consideration, e.g. suggesting that artificial beings deserve moral consideration now). The average score was 3.8 (standard deviation of 0.86) and the scores had no significant correlation with the date of publication (r = 0.006, p = 0.935).

[9] Kim Dae-won, “professor of information and engineering department at Myoungji University, who [was] leading the charter drafting,” commented that, “[r]ather than making ethical rules from a robot’s point of view, we should focus on the human side such as regulating designing and manufacturing of robots.” Contrastingly, Professor Jong-Hwan Kim, “one of South Korea’s top robotics experts” argued that, “[a]s robots will have their own internal states such as motivation and emotion, we should not abuse them… We will have to treat them in the same way that we take care of pets.” Whether Jong-Hwan Kim was involved in the production of the charter is unclear.

[10] Most references seem to cite the initial announcement or a blog post of unclear provenance (it does not appear to be related to the South Korean government).

[11] They added that this would be “so that at least the most sophisticated autonomous robots could be established as having the status of electronic persons responsible for making good any damage they may cause, and possibly applying electronic personality to cases where robots make autonomous decisions or otherwise interact with third parties independently.”

[12] This was probably done to promote a tech summit that was happening at the time in Riyadh.

[13] See these examples from Estonia, Arizona, and the UK.

[14] See, for example, this discussion of Paro’s Koseki, this article on Sophia’s citizenship, and the comments on this article.

[15] The response to the UK Horizon report is discussed in David J. Gunkel, Robot Rights (Cambridge, MA: MIT Press, 2018), 35-7. Elsewhere, Gunkel discusses the open letter to the EU.

[16] The blog appears inactive. The group’s history was also discussed on the EA Forum.

[17] Kim and Petrina (2006) note that they were “puzzled for some time about an advocacy of rights for robots” until they “eventually learned about the existence” of the ASPCR. A Google Scholar search for the exact phrase “American Society for the Prevention of Cruelty to Robots” returns 35 results.

[18] The “botsrights” reddit community seems to be for entertainment purposes, mostly unrelated to the idea of “rights.” The “People for Ethical Treatment of Robots” (PETR) Facebook page appears to be intended as a parody of People for the Ethical Treatment of Animals (including using a variation of their logo). “The Campaign Against Sex Robots” (CASR) was launched in September 2015 but is framed as a form of resistance to the sexual objectification of women and children. Its director has opposed the granting of moral consideration to artificial entities and signed the open letter against the EU’s electronic personality proposals.

[19] This raises the stakes of experimentation and the size of potential negative consequences, which is concerning given the unilateralist’s curse.

[20] For example, the Global Priorities Institute’s research agenda asks: “Besides mitigation of catastrophic risk, what other kinds of ‘trajectory change’ or other interventions might offer opportunities with very high expected value, as a result of the potential vastness of the future?”

[21] For other relevant limitations, see here.

[23] Organizations carrying out capacity-building in the farmed animal movement are listed here. Each of the movements studied by Sentience Institute has carried out some form of capacity-building work, such as the support for grassroots anti-abortion advocacy offered by the National Right to Life Committee and the careful targeting of MPs, justices, and other elites by British antislavery advocates.

This post is a very valuable resource—one of the best current compilations on the issue I've seen so far.

Thanks very much!

I'm thrilled about this post - during my first two-three years of studying math/cs and thinking about AGI my primary concern was the rights and liberties of baby agents (but I wasn't giving suffering nearly adequate thought). Over the years I became more of an orthodox x-risk reducer, and while the process has been full of nutritious exercises, I fully admit that becoming orthodox is a good way to win colleagues, not get shrugged off as a crank at parties, etc. and this may have played a small role, if not motivated reasoning then at least humbly deferring to people who seem like they're thinking clearer than me.

I think this area is sufficiently undertheorized and neglected that the following is only hypothetical, but could become important: how is one to tradeoff between existential safety (for humans) and suffering risks (for all minds)?

I find myself shocked at point #2, at the inadequacy of the state of theory of these tradeoffs. Is it premature to worry about that before the AS movement has even published a detailed agenda/proposal of how to allocate research effort grounded in today's AI field? Much theorization is needed to even get to that point, but it might be wise to think ahead.

I look forward to reading the preprint this week, thanks

Hey, glad you liked the post! I don't really see a tradeoff between extinction risk reduction and moral circle expansion, except insofar as we have limited time and resources to make progress on each. Maybe I'm missing something?

When it comes to limited time and resources, I'm not too worried about that at this stage. My guess is that by reaching out to new (academic) audiences, we can actually increase the total resources and community capital dedicated to longtermist topics in general. Some individuals might have tough decisions to face about where they can have the most positive impact, but that's just in the nature of there being lots of important problems we could plausibly work on.

On the more general category of s-risks vs extinction risks, it seems to be pretty unanimous that people focused on s-risks advocate cooperation between these groups. E.g. see Tobias Baumann's "Common ground for longtermists" and CLR's publications on "Cooperation & Decision Theory". I've seen less about this from people focused on extinction risks, but I might just not have been paying enough attention.

Thanks for this post and this comment.

I agree that some work on extinction risk reduction may actually boost work on moral circle expansion, and vice versa. I also think there are some possible mechanisms for that beyond those you mentioned. I previously discussed similar points in my post Extinction risk reduction and moral circle expansion: Speculating suspicious convergence.

(Though I do think there could also be some tensions between these two areas of work beyond just the fact that each area of work draws on similar scarce resources.)

It seems to me that your comment kind-of implies that people who focus on reducing extinction risk and people who focus on reducing s-risk are mainly divided by moral views. (Maybe that’s just me mis-reading you, though.) But I think empirical views can also be very relevant.

For example, if someone who leans towards suffering-focused ethics became convinced that s-risks are less likely, smaller scale in expectation, or harder to reduce the likelihood or scale of than they’d thought, that should probably update them somewhat away from prioritising s-risk reduction, leaving more room for prioritising extinction risk reduction. Likewise, if someone who was prioritising extinction risk reduction came to believe extinction was less likely or harder to change the likelihood of than they’d thought, that should update them somewhat away from prioritising extinction risk reduction.

So one way to address the questions, tradeoffs, and potential divisions you mention is simply to engage in further research and debate on empirical questions relevant to the importance, tractability, and neglectedness of extinction risk reduction, s-risk reduction, and other potential longtermist priorities.

The following post also contains some relevant questions and links to relevant sources: Crucial questions for longtermists.

It seems that what you have in mind is tradeoffs between extinction risk reduction vs suffering risk reduction. I say this because existential risk itself include a substantial portion of possible suffering risks, and isn't just about preserving humanity. (See Venn diagrams of existential, global, and suffering catastrophes.)

I also think it would be best to separate out the question of which types of beings to focus on (e.g., humans, nonhuman animals, artificial sentient beings…) from the question of how much to focus on reducing suffering in those beings vs achieving other possible moral goals (e.g., increasing happiness, increasing freedom, creating art).

(There are also many other distinctions one could make, such as between affecting the lives of beings that already exist vs changing whether beings come to exist in future.)

Wow, PETRL has staff now? When did that happen? And where can I read about their upcoming work/plans?

Relevant EA Forum question and thread.

Oh "staff" might have just been the wrong word. I just meant "team members" or something else non-prescriptive. (They commented anonymously so I couldn't thank an individual.) They confirmed to me that they are currently inactive.