Epistemic status[1]: There’s consistent meta-analytic evidence for the interventions and models presented here. Still, that research is only one piece of your evidence-based decision-making. I’ve been directive for brevity’s sake. Use your judgement, adapt to the context, and let me know where you disagree in the comments.

Summary: we need to learn to teach better

Education is the #2 focus area for the Centre for Effective Altruism. Many of us educate, from field building to fundraisers, from coffees to conferences. As a result, I think we can do better by applying the meta-analyses on what works from educational psychology. We can use models and strategies that have been shown to work for hundreds of thousands of students, rather than feeling like community building is a whole new paradigm.

- I think the EA community can make better decisions, and track their progress more effectively, with an evidence-based theory of change for community building.

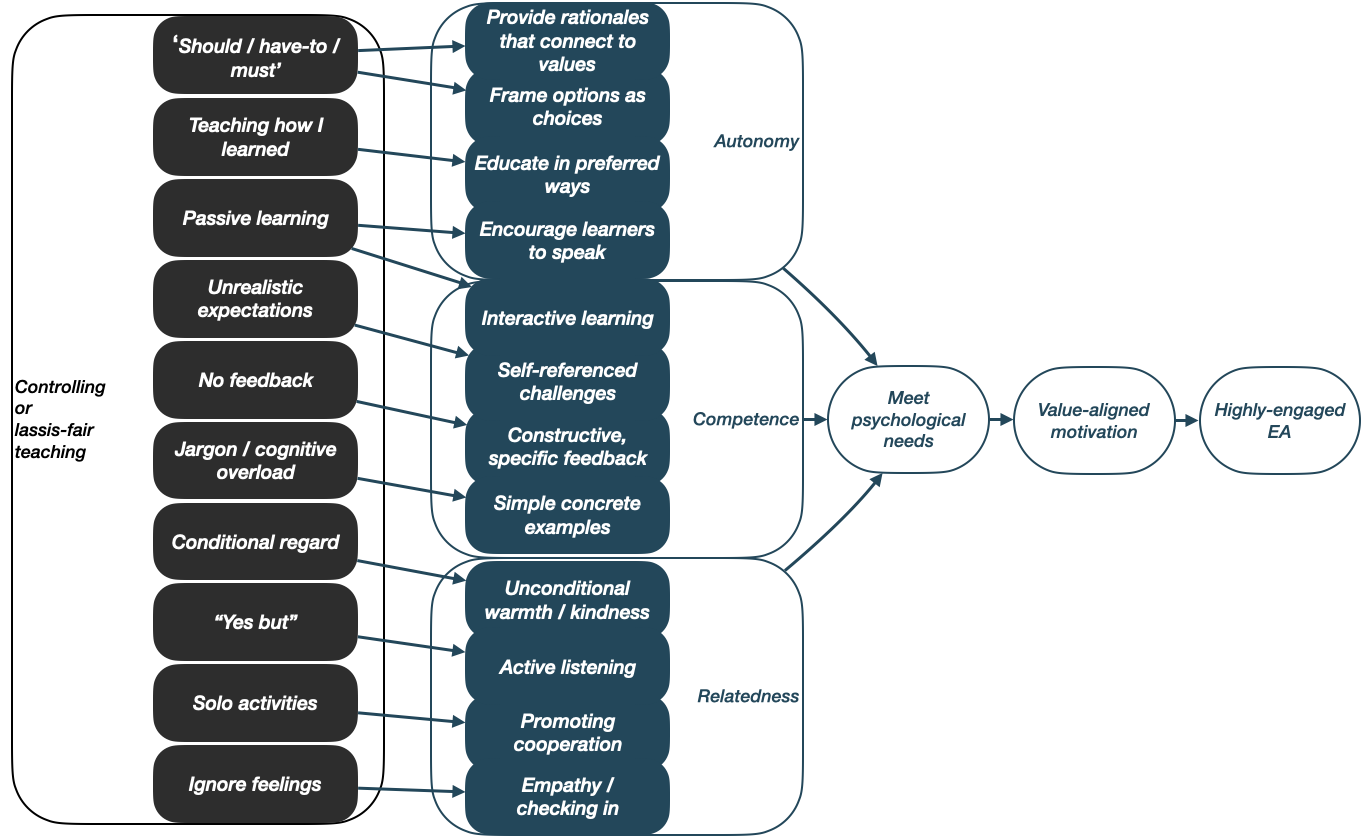

- One useful and robustly supported model, self-determination theory,[2] suggests we can be more effective if we help community members:

- Feel more competent by helping them developing valuable skills using evidence-based teaching strategies (rather than focusing so much on knowledge, especially via passive learning)

- Feel more autonomy by making learning more meaningful and aligning motivation with their personal values and goals (rather than using quite so much guilt and fear)

- Feel more connected by empathising and accepting their experiences, promoting collaboration, and providing relatable role models.

- Some of these ideas seem obvious, but the community is still affected by burnout, imposter syndrome, and perceived rejection. By better supporting psychological needs, we can mitigate the risks of people bouncing off or burning out.

- If we want to know how well our community building is working, I think measuring these needs will give us an upstream predictor of highly-engaged EAs

- Of these psychological needs, I think community-building efforts in EA struggle mostly in promoting feelings of ‘competence’, and neglect the long list of evidence-based strategies for building skills.

- Most efforts are currently reading, listening to talks, and engaging in discussions. There are far more effective methods of helping people learn valuable skills and aptitudes.

- Primarily, we should give people more time doing hands-on practice with the most important skills in an environment where they get constructive feedback. We should also learn to better use multimedia.

I appreciate and admire all those who do community building. If I identify any groups that could improve, it’s because I think they have the skills and track record to do an incredible amount of good. I want them to thrive, and I hope they see any feedback here as constructive.

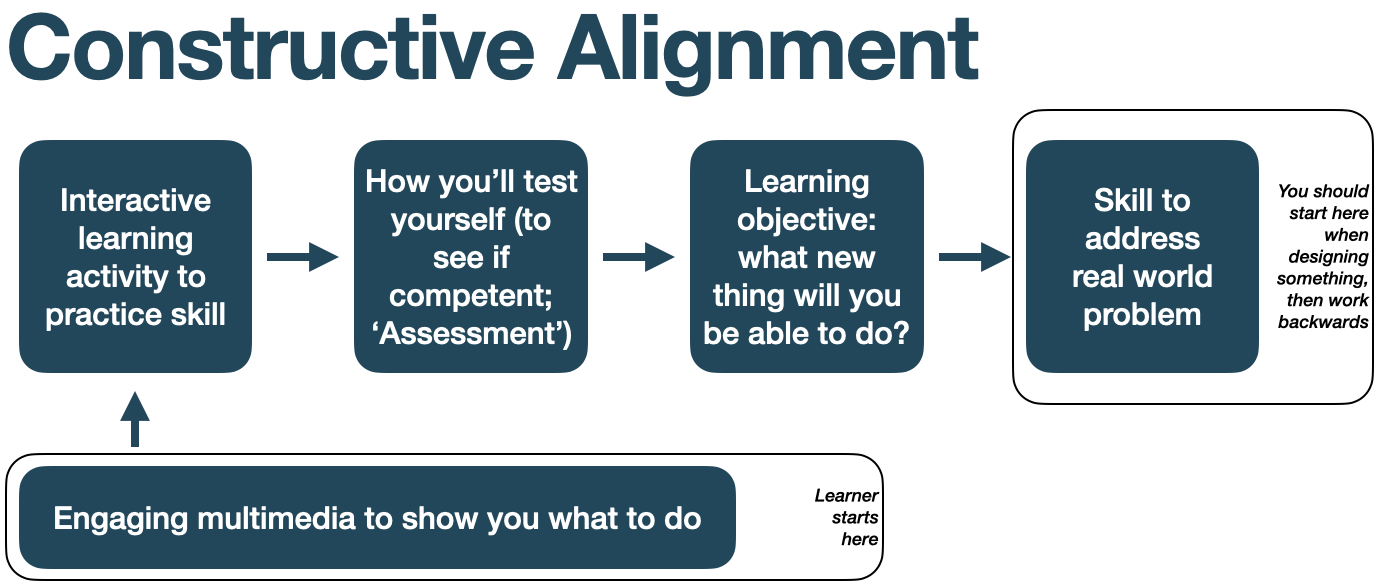

How to teach effectively, in five steps

For those of you who don’t care about the causal model, or the evidence, the following summary will explain what good teaching looks like. I think we could frame more community building events using these steps. Doing so will build capacity in the community, and build members’ motivation and engagement. This obviously isn’t everything we should be doing to build a community—social events, 1 on 1s, conferences, and reading groups still have their place. But, if we’re trying to build a capable and motivated group, we should try the following more often.

- Generally, work backward from some new skills you’re going to help people learn. These are your learning objectives. Knowledge is obviously good, but is most valuable and motivating when connected to important skills.

- For example, don’t say “learn about AI” but instead “make well-calibrated forecasts about the future of society” or “make career plans that do as much good as possible”

- Make these learning objectives explicit to the learners, so they know what you’re trying to achieve together

- For longer programs, consider assessing people’s competence at the end, in a way that helps both you and the learner know how you’re going. This is your ‘summative assessment.’

- For example, don’t just run a discussion group with no assessment, and avoid ‘knowledge tests’ like exams. Instead, have them do the skill (like make and justify some forecasts, make a career plan) and provide some feedback on how they’re going.

- If you’re teaching an important skill, then practising it in this way should be motivating. If they’re not doing it, the skill might not be important enough, you might not have explained how it’s important, you might have set the bar too high, or might have forgotten to craft formative activities.

- Craft ‘formative’ activities for them to practise the skill with you. This lets you give them feedback. You don’t learn to play tennis by watching tennis or talking about tennis. It’s hard to learn to volley for the first time by playing a real match. Show them how to volley, then have them hit lots volleys, then make it progressively closer to a match.

- Match the formative assessments to the summative assessments (and therefore, the learning outcomes).

- Don’t just use discussions if you want people to finish your program by posting some research on the EA forum.

- Piece these activities together so they add up to your ‘summative assessment.’ Don’t require skills on the summative assessment that you haven’t practised together.

- If you want people to post research on the forum, break that skill down into parts. Practice each part together so you can provide feedback on their skills.

- Add variety to formative activities so they don’t get boring.

- Make them easier than you think they need to be. You have probably forgotten how hard it was to learn this skill. Make them progressively harder.

- Some formative activities are better than others. For example, at some point consider using concept maps, having peers work together, having them grade each other’s work, or simulate a professional role.

- Match the formative assessments to the summative assessments (and therefore, the learning outcomes).

- Now think about what knowledge or demonstrations might help people first practise the skill. You can learn to better hit tennis balls by seeing someone show good technique (slowly), or by understanding why top-spin is good. Present new content in small bites that engage both the learner's eyes and ears.

- Our brains are built for multimedia that uses sight and sounds. So, in general, video is better than text + pictures, which is better than just text or sound.

- Make these simpler than you think they need to be. Use short sentences and simple language. You like skills, you probably forget how hard it was to understand this knowledge the first time. Start with a concrete or relatable example (like ‘humans run the world, not chimps’) before moving to something abstract (like ‘AI could be catastrophic’).

- Make it really obvious where learners should focus. Don’t give them more than one thing to focus on at once. Don’t distract them with (too many) memes, jokes, and asides unless they directly contribute to the learning points.

- Empathise with misconceptions, but then correct them (like "many people think the top charities are about twice as impactful as the average one, but it turns out the number is closer to 100 or 1000 times"). By focusing your plan on correcting misconceptions, you're more likely to update beliefs rather than entrenching existing ones.

- Intersperse this knowledge with frequent opportunities to think, remember, and apply what you’re saying. Add little multiple choice questions, opportunities for discussion, or ask them to apply the idea immediately to a new example.

- Provide warm, encouraging, understanding role-models (including yourself).

- Take care with things that might induce guilt or fear. Show empathy for how people might be feeling (like “many people, including me, feel a pang of guilt with this thought experiment”). Check your values align (ask “what’s important to you?”). Provide them with options to better reach their goals (for example, “Things are less scary when we feel like we’re doing something to fix the problem. Here are some things I found helpful.”)

- Encourage people for the progress they’ve made rather than making them feel inadequate for not doing enough (“donating 1% to the best charities is amazing. You’re doing more good than most people.”)

- Have high expectations but take (at least some) ownership for supporting people to get there (“I know you can get a paper into NeurIPS. My job is to get you there.”; “I’m not sure I explained that very well, can you try to say it back to me?”)

- Paraphrase the learner’s position back to them before correcting any misconceptions (like “your saying that this is too sci-fi to be real. Is that right?”)

- Be careful how you spend your weirdness points: focus on the more relatable parts of your identity so you have enough social capital to push on the more important intuitions (like if your goal is longtermism for the general public, probably don’t also bring up donating 10% and veganism at the same time; instead focused on shared appreciation for promoting education, democracy, and protecting the climate).

- Tell stories of people, including yourself, who have embodied the values you’re aspiring to cultivate (like altruism, compassion, truth-seeking, collective growth, determination)

We need better models of community building so fewer people bounce off

Community building in EA is a precarious tightrope walk. We want to be truth-seeking but socially accepting. We want to challenge ideas without being adversarial, to push around ideas but not push around people. We want to be able to be avant garde while having many ideas adopted by mainstream institutions. We want to welcome weird thinking without looking so weird that promising people bounce off.

If you’re like me, you’ve seen many promising people bounce off. I’ve seen committed, vegan medical students who are earning to give bounce off their EA groups. People made Jeremy[3] feel he wasn’t doing enough to help others.

I feel the tension. I want Jeremy to see if he fits direct work too. We want to help people who feel comfortable challenging their career plans. But, we also want value aligned people like Jeremy to feel like they belong. If we don’t, then we won’t be able to help them challenge those plans.

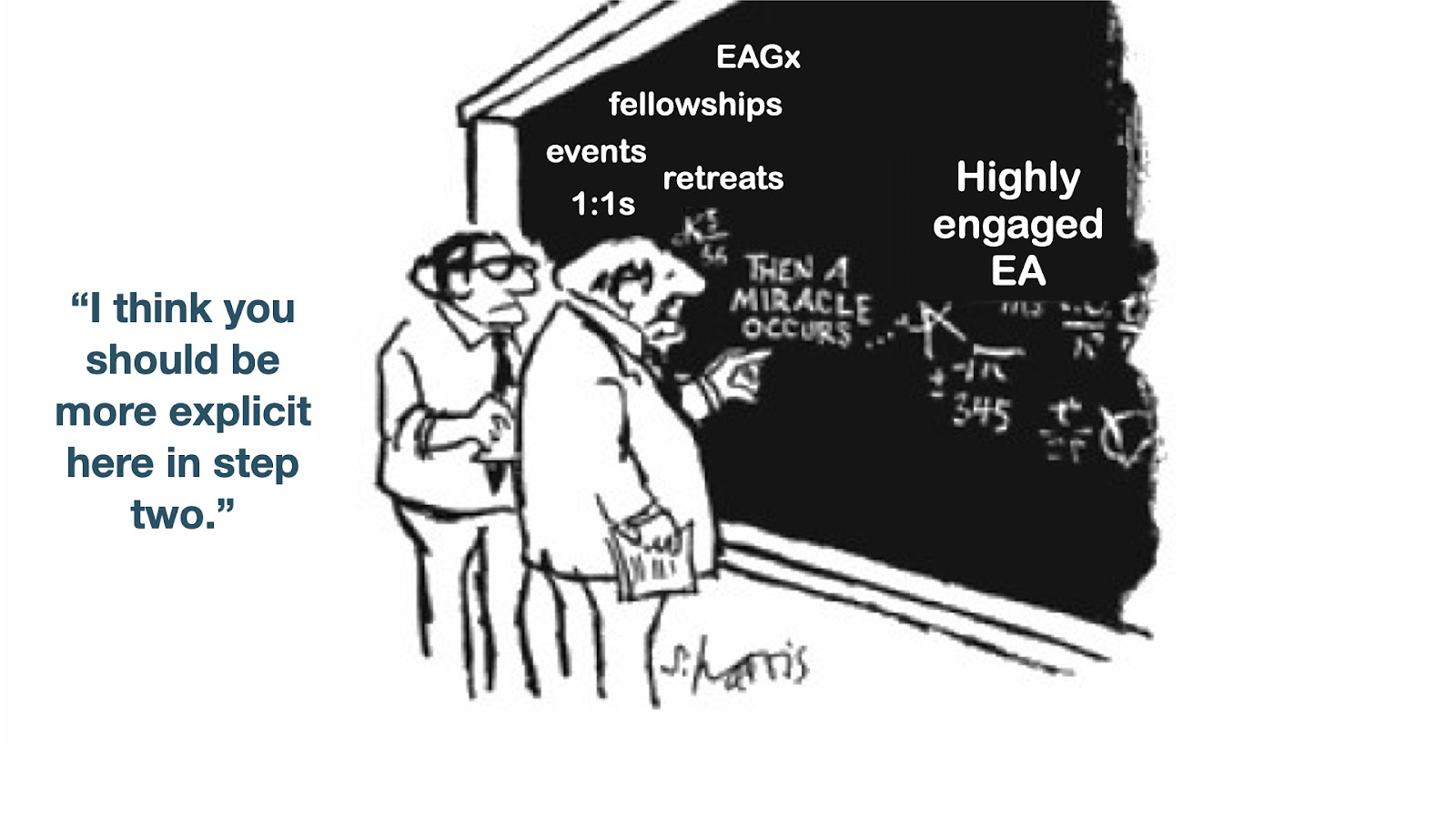

That EA group was doing all the right things. They were tabling, had a fellowship, were running intro events and doing 1:1s. But, I don’t think they knew what it meant to do those things well. Some groups measure outcomes: ‘people go from table to email list, and from email list to event, from event to fellowship’. But, if we only focus on outcomes then it’s hard to understand how to get better at the process. Why is Nikola better at tabling than Trevor? A better theory of change would help people know what they should be trying to improve. How do we create highly engaged EAs?

If we don’t know why some things work, how can we measure if it’s working, and how do we choose what to prioritise, or what to change?

A ‘highly engaged EA’ is someone who has internalised the values and knowledge of the EA community, and is autonomously motivated to act on them

If we want people to make clear, impartial, and prosocial decisions—like dedicating their time, their money, or their careers to the causes EAs care about—it can require sacrifices and emotional strength. To do that, I think we need:

- People who have internalised the values of EA (including the value to challenge ideas)

- People whose life goals align with the intrinsic values of EA (like wanting to have an impactful career, to build the community, do the most good through their donations)

- People who are motivated to act on those values for their own reasons, not out of guilt or pressure or to seek status, and

- People who feel the agency and confidence to take action on the basis of those values, rather than feeling paralysed by impending doom or intractable problems

- Ultimately, this likely leads to behaviours like choosing a career aligned with EA ideas, donating money to effective charities, and attending community events, but a ‘highly engaged EA’ doesn’t have to do all of these.

There are well-supported psychological theories that explain how people internalise values, how they set goals, and how they develop motivation, confidence and agency. In this post I’ll explain self-determination theory (or SDT) applied to these questions, and the data that supports SDT in application.

Self-determination theory is a very well supported model of how to develop motivation, agency, and confidence

SDT explains many motivational puzzles, and explains many controversies in EA. What happens if we guilt people with the drowning child? Should we encourage or discourage people from identifying as ‘effective altruists’?

Importantly, the evidence for SDT is compelling. Here’s an abbreviated list of meta-analytic findings on SDT in practice. For reference, in psychology, a ‘large’ effect size is a correlation of 0.3 (r = .3; about ~10% of the variance explained [R² = .10]; an intervention that increases an outcome by about 0.8 standard deviations [d = 0.8]). These are important for putting these findings in perspective. Any jargon used here I will explain in the following sections.

- The ‘autonomous’ or ‘self-determined’ types of motivation described in SDT robustly predict outcomes we care about. A meta-analysis of 223,209 students found self-determined forms of motivation have very large effects on engagement (r = .57), effort (r = .51), and enjoyment (r = .56). Effects on objectively measured academic performance are small but reliable (r = .1).

- We can build those ‘autonomous’ types of motivation by supporting psychological needs. Another meta-analysis of 79,000 students found that the level of ‘psychological need support’ explains about 30–40% of the variance in autonomous motivation. This is 3 to 4 times the size of a ‘large’ effect in psychology.

- Educators can learn to support these psychological needs. An 10-year old meta-analysis of 20 studies showed teachers can learn to become more supportive of psychological needs (d = 0.63) in 1–3 hours. In a meta-analysis of all interventions to improve motivation, those informed by SDT had the second biggest effect sizes (d = .7 from 11 studies; #1st was at d = .74 with only 4 studies on ‘transformative experiences’)

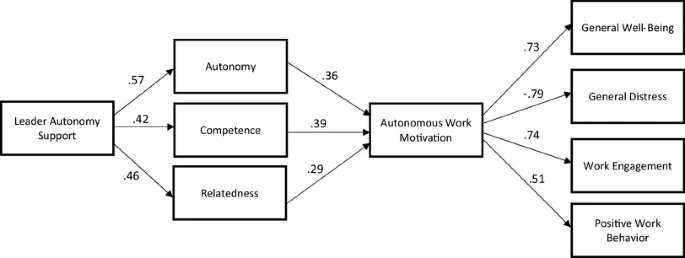

- The same patterns show up in leadership, health, and physical activity. SDT interventions change how supported people feel. Feeling supported makes people more motivated, engaged, and happy.

- For example, this graph from a meta-analysis of 32,780 employees shows SDT at work. Good leaders make their followers feel competent, connected, and in control. Those followers are then more motivated, happier, and perform better.

- For example, this graph from a meta-analysis of 32,780 employees shows SDT at work. Good leaders make their followers feel competent, connected, and in control. Those followers are then more motivated, happier, and perform better.

Understanding SDT helps us know what we’re trying to do in community building. We’re trying to support people’s psychological needs as they learn how to improve the world. If we target those needs, and measure how well we're doing it, we can help people productively and happily do as much good as they can.

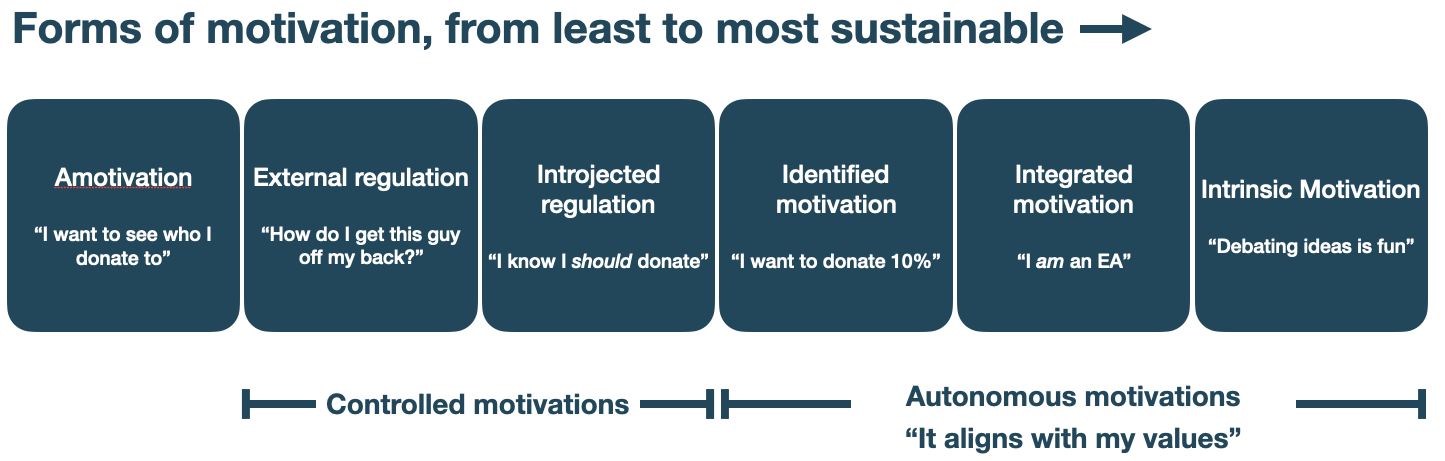

SDT describes how motivation varies not just in quantity, but in quality

When I was 18, I was on a date and a Hare Krishna gave me a book. Distracted by my teenage infatuation, I accepted the book and kept walking. In a classic ‘persuasion’ move (like from Cialdini's Influence), they asked for a donation. I obviously paid it to avoid looking stingy in front of my date. I then tossed the book, saw the Hare Krishna pick it up, and repeat the ruse on someone else. This got the donation, but if we did this for the Against Malaria Foundation, why does it make us cringe? Isn’t $10 dollars from a distracted man still life-saving bed-nets for people in need?

Controlled types of motivation are weak, short-term, and fragile

Many donation-solicitation methods involve guilt and pressure to get a short-term outcome (see our meta-review here). We can pressure people into motivation (external regulation) or have them internalise beliefs that make them feel guilty (introjected motivation). Both of these do create short term behaviour change.

But, these controlled forms of motivation are not what I think we—as a community—would hope for. They are why charity solicitation develops a bad name. They’re ‘controlled’ because we feel motivated by a force that doesn’t align with our values. It generally leads to short-term motivation, or even covert resistance.

That’s not to say we should never do things that lead to guilt. Extrinsic forms of motivation can provide a strong impetus when it’s also value aligned. People use Beeminder to provide extrinsic motivation that’s aligned with what’s important to them (e.g., to exercise). That’s very different from the time my mum paid me $10 every time I lost a kilo of weight. Her incentive turned into an excellent money-spinner: I would lose a few kilos, buy a new video game, put the weight back on again, and the cycle would repeat. The problem here was the extrinsic motivations didn’t match what was important to me.

Autonomous motivations are the sustainable, value aligned motivation of highly engaged EAs

So if extrinsic motivation is weak, what are the alternatives? Why do we play video games, music, go bouldering, do yoga, or donate to charity when there are often few extrinsic rewards?

It’s because these are autonomously motivating. Intrinsic motivation is a special type of autonomous motivation: it’s where we’re driven purely by the joy of the activity itself. This is more relevant for sport, music, art, or gaming, where people often find the activity fun. But, donating to charity isn’t fun. Eating healthily and intense cardio aren’t fun. These are driven by different types of motivation. We often do these things because they’re aligned with our personal goals and values (known as identified motivation), or because they’re part of who we want to be (known as integrated motivation).

These better explain why people, for example, might sustainably avoid eating animal products. It’s not fun to have a less varied diet, and most people I know don’t avoid animal products out of guilt or pressure. People do it because they care about animals and the planet, and want to minimise their impact on both (which is identified motivation). Or, they identify with being vegetarian or vegan; that is, it’s part of their identity (which is integrated motivation). All of these ‘autonomous’ sources of motivation—identified, integrated, and intrinsic—are sustainable and distinct from extrinsic motivation because they mean the behaviour aligns with your values.

To promote highly engaged EAs, we want people driven to act by these autonomous sources of motivation. We want them to look for the best way to impartially do the most good (and take action on it) because that intellectual project aligns with their personal goals and values.[4] This framing helps answer some of the controversies in EA already.

- Do we want people to experience guilt in response to the drowning child metaphor? Maybe, but only if the person already sees how EA aligns with their values. If they don’t see that alignment, you might get short-term compliance or a hefty dose of resistance.

- Do we want people to identify as ‘an effective altruist’? Yes, as long as that identity also includes the values of truth seeking and a scientific mindset. As outlined in Scout Mindset, it’s true that some identities do cloud our abilities to see the truth, but most agree an EA identity explicitly involves truth-seeking as a value. If we discourage people from holding EA identities, we may be turning them away from a powerful source of sustainable motivation. Not using EA as an identity is like not using nuclear power. Yeah, sometimes it causes a meltdown, but most of the time it’s safe, low carbon, base-load energy.

Right, so autonomous motivation good, controlled motivations (generally) bad. But how do we, as a community, promote autonomous motivation?

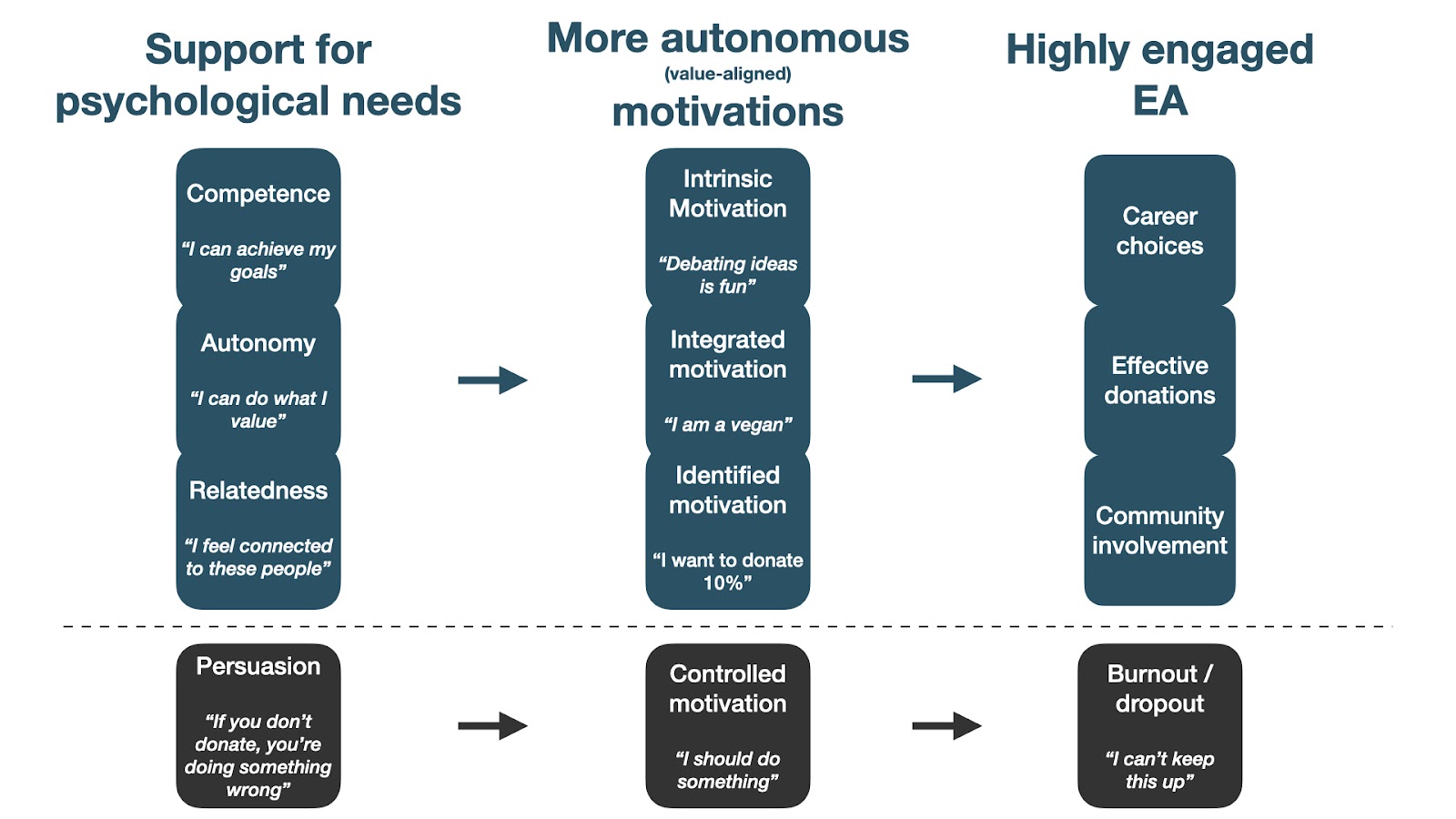

We say ‘educate, don’t persuade’, but what’s the difference? Support people’s psychological needs

I like the idea of ‘educate, don’t persuade.’ It rings true to me. But what does it mean? Education and persuasion both involve one person communicating ideas or beliefs to another person. When is communicating about, say, factory farming ‘education’ and when is it ‘persuasion’?

Persuasion has a bad reputation because it often fails to support people’s psychological needs, like their freedom of choice. ‘Educating’ feels more supportive of these needs.

The three most commonly accepted basic psychological needs are:

- a need to feel like you can align your behaviour to what you value (the need for ‘autonomy’),

- the need to feel like you have what you need to achieve your goals (the need for ‘competence’),

- and the need to belong and feel connected to others (the need for ‘relatedness’).

When we satisfy these needs, we motivate people over the long-term. When we don’t we might get short-term compliance, but nothing sustainable.

If you’re having dinner with a friend and they ask you where you donate, you probably have a good feel for their values. If you talk about why you donate to the Long Term Future Fund, but given what you know about them, how they might prefer the Global Health and Development fund or Top Charities Fund, that feels more supportive. You’re connecting with them, acknowledging what they value, and giving them a path to effectively achieve those goals.

If you instead say “anything you do in the present is probably meaningless over the long term, so you should only really donate to the Long Term Future Fund or you’re neglecting to protect your great-grandchildren…” that feels more like bad ‘persuasion’. You’re ignoring what they value, making them feel hopeless toward achieving their goals, and guilting them into doing what you value.

I don’t think people in the community do the latter very often. However, consistently supporting psychological needs is a very difficult challenge with a mixed audience. That is, it’s hard to appeal to people’s values when their values might differ. It’s hard to give all people the information they need to achieve their goals when their goals diverge. A place where you belong and feel connected might be one where I feel alienated.

We’re more likely to be open to the views of others when we connect with them—when we feel like we belong in their group. Both the source of the message and the message itself matters to whether or not we believe it. For example, pro-environmental messages were only persuasive to conservatives when coming from a conservative source (even if the message was tailored to their values). I focused on autonomy first because I think value alignment is a better method of signalling in-group relationships than external cues (e.g., appearance). I don’t think EAs should wear MAGA hats to reach republicans in the US. I think they’re better aligning on the benefits of market solutions, or the desire to make government spending decisions cost-effective. We can make EA ideas helpful to many groups.

Still, EA won’t be a supportive place for everyone. Most people want to do good, and as Hillary Graves says:

“All you really need is some component of your moral philosophy which acknowledges that making the world a better place is a worthwhile thing to do. And that’s really all you need to get the effective altruist project off the ground.”

We could focus on this shared common ground, and helping people better live by their values. Instead, I’d hazard we bounce people because many focus on the counterintuitive or demanding conclusions from EA (e.g., AI doom). Yes, we make normative arguments (e.g., ‘it’s better to do more good than less’). But, we don’t have to make normative arguments in a controlling way.

By being ‘need supportive’, we can help people feel understood, competent, and empowered. If they feel this way, they are more likely to listen to your ideas. It makes them more likely to take on new beliefs and values (e.g., a more impartial sense of altruism). Then, as they become more aligned, it’s easier to support their psychological needs. It creates a virtuous cycle.

So, this post aims to outline some gaps between what research says on building long-term motivation, and what sometimes happens in EA events I’ve been to.

Guilt and pressure are bad long-term motivators.

Use existing values instead.

Like many EAs, I found Peter Singer’s Drowning Child thought experiment persuasive. It was certainly part of my initial drive toward donating to global poverty, and those donations were central to becoming an EA.

It also took me to a pretty dark place, where I felt I couldn’t justify my life decisions or minor purchases, once converted into lives I could have saved. Some EA forum posts even suggested we use this as a persuasive appeal (see the ‘currency’ described in this post; I found the currency too unpalatable to repeat here). It took a few good years of therapy, supportive colleagues, and some quality writing from the EA community[5] to get me out of that space into something more sustainable.

I also noticed the downing child metaphor got the hackles up in other people. Yes, I needed to be more tactful with some of them, but with others, they just found the conclusions too demanding to even entertain. I don’t think my experiences here are unique. I think they align with the research around motivation.

The problems with guilt or fear appeals are two-fold:

- They rely upon the recipient feeling you have a shared set of values. In cases where that isn’t explicit, or when change is hard, guilt- or fear-based appeals can lead to resistance, both to the messenger and the idea. For example:

- The Behavioural Insights Team’s report on changing diets summarised a range of studies showing guilt-based appeals can backfire, and pride and positivity appeals are more likely to work. For example, for most people, moralising around meat can backfire: people often eat more meat in the month afterwards.

- Interventions that target controlled motivations tend to not work for reducing criminal reoffending (see this meta-review for effects across a range of interventions; a classic example of this failure known to the EA community is the harm caused by Scared Straight where shaming juvenile offenders led to higher rates of recidivism)

- Our recent meta-analysis of 167 studies showed that—across cross-sectional, longitudinal, and experimental designs—controlled motivation did not increase prosocial behaviour, but increased antisocial behaviour. Autonomy-supportive environments were what increased prosocial behaviour (preprint here).

- Other meta-analyses have found that guilt and fear don’t harm engagement or performance (see in work and education). But, even if people work hard, I worry those forms of motivation may lead to mental health problems and burn-out in the community. For example:

- Obsessive passion is where someone is strongly committed to an activity but for controlled reasons (e.g., driven by guilt). A meta-analysis of 1,308 effect sizes showed obsessive passion was associated with higher burnout, negative affect, anxiety, rumination, and activity/life conflict.

- A meta-analysis of a range of health behaviours found guilt-based motives do lead to positive health behaviours, but lead to negative mental health outcomes. Similarly, experiences of guilt and shame are strongly correlated with depressive symptoms (meta-analysis of 108 studies).

Whether we’re trying to educate about the importance of the long-term future, the plight of factory farmed animals, or the opportunities to improve global health, there are better alternatives to guilt and fear.[6] Many of the core claims of EA are intuitive to most people, for example:

- all else being equal, we should do more good rather than less;

- science and reason are good methods for identifying what works;

- we’ll make better decisions if we see the world as it is, rather than as we want it to be;

- future generations matter;

- animals can suffer;

- luck determines where and when you were born;

- it’s good to help others;

- it’s better to help them with what they need rather than what is nice for us to give.

EA provides some ideas that might be novel to many people, but aligning on the intuitive claims first can establish value-alignment first. Some of these might sound like applause lights, but they serve to align us with the audience. As pointed out by others, EA can sound less weird, if we want it to. We should spend our weirdness points more wisely. We can frame EA in ways that explain it accurately, clearly, and convincingly (e.g., Gidon Kadosh’s piece).

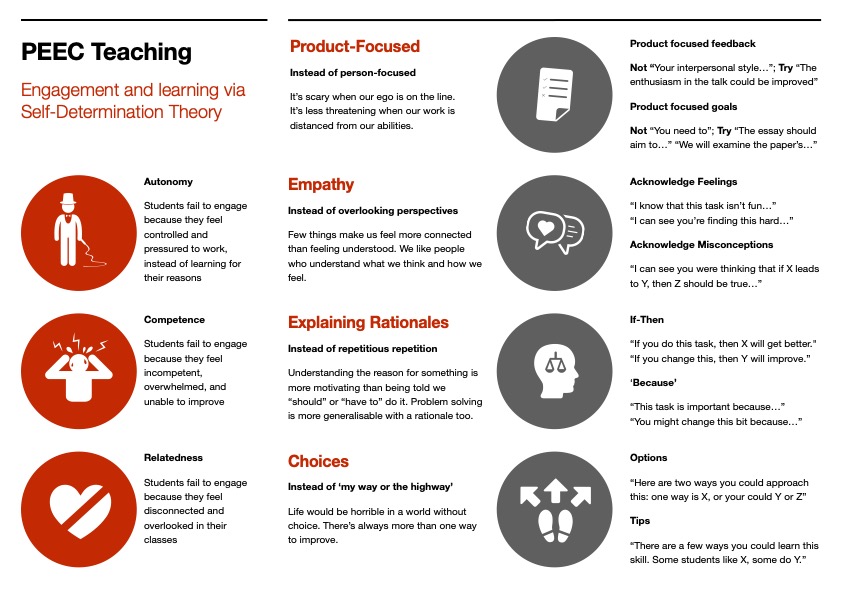

Using values and avoiding guilt are just part of creating a ‘need supportive environment’

We had over 30 world experts in self-determination theory agree on what makes a motivating learning environment.[7] They agreed on a range of strategies that would have moderate-to-strong improvements to motivation. Expert opinion is relatively weak evidence but the goal here is to make more concrete what a ‘need supportive’ environment looks like. I’ll focus below on the less obvious ones.

For more detail, I made a short video series talking about how to satisfy these psychological needs. I’ll explain the key ideas in the rest of this post, but if you want more, you might find this playlist of 5 short videos (5 minutes each) useful, or this one-page summary:

Educators that support autonomy tend to…

- Provide rationales that connect to the learners’ values

- For example, a clean-meat speaker starts with environmental and health benefits, because she knows people are more likely to respond to these values than they are for animal welfare benefits (see our other meta-review),

- Explain the real problem in the world you’re hoping to help the person solve, how they’re going to learn to solve it, and how they’ll know they’ve learned it

- The technical term here is ‘constructive alignment’.

Basically, work backwards from real world problem to the learning objective(s) to the assessment(s) to the learning activity, for example:- “As you’ll learn in this program, AI is likely to become a powerful force in the world, and we don’t yet know how to build it safely.” (real world problem)

- “In this program I want to help you identify whether you think you might fit a career in AI safety.” (specific learning objective)

- “At the end of the program, you’ll have an opportunity to do a 4-week project to test your fit for this kind of work. I will help you craft something that fits your interests.” (assessment task)

- “Today, to help prepare you for that project, we are going to…” (explain rationale for the learning activity)

- In EA education and outreach, these four components often do not align well. For example:

- We have fellowships that are almost exclusively discussions but projects (‘assessments’) that are almost all writing. If I need to learn to write for the project, the learning activities should help me practice my writing with opportunities for feedback.

- In an AI and Economics course I started, the content pivoted to the history of Claude Shannon and Information Theory. For me to stay motivated, I need to understand why learning about Information Theory (the learning activity) helps me solve economic problems around AI (the real world problem).

- The technical term here is ‘constructive alignment’.

- Use the language of choices, empathy, and values instead of ‘shoulds’ and ‘musts’

- It’s okay to make normative and scientific claims, but the language can be more or less autonomy supportive. There’s a big difference between a doctor showing empathic concern about a client’s drug use and…

- It’s okay to make normative and scientific claims, but the language can be more or less autonomy supportive. There’s a big difference between a doctor showing empathic concern about a client’s drug use and…

- People really don’t like feeling judged and pressured. So, instead of…

- “Don’t you care about animals?”

- “You should really think about another career.”

- “If you don’t donate, you’re doing something wrong.”

- Try…

- Talking about what others do: “Many people I know have made small changes that make a big difference for animals.”

- Using if-then rationales: “If you want to have an impact with your career, 80k has some great resources.”

- Preemptively empathising: “Some people find Singer’s argument pretty demanding. What did you think?”

To build a sense of belonging and relatedness, educators can…

- Follow CEA’s guiding principles (e.g., commitment to others, openness, integrity, and collaborative spirit)

- Explicitly showing empathy to people in a range of ways, like:

- In their current feelings or beliefs, even if you differ: “So you think there’s basically no chance of humans ever going extinct. Yeah I know that can be hard to imagine.” (see also Rapoport’s Rules) or

- In their misconceptions: “I used to think the top charity might be 2x the average charity, as well…”

- Being expressive and enthusiastic ourselves

- Role-modelling the kind of behaviour we want to see in the group is an important component of transformational leadership (i.e., ‘idealised influence’), which is one of the most well-established models of leadership for producing the outcomes we care about (see this meta-analysis)

- Help people cooperate, share, and interact

- Creating shared tasks where people work together to produce something valuable (e.g., rather than only using discussion prompts, I had my AGI Safety Fundamentals cohort work together on creating a concept map of what they’d learned so far and how it all fit together)

- Create environments where people are likely to form friendships, not just learn (e.g., see recent forum post here), by providing opportunities for either unstructured (e.g., food afterward) or structured social interaction (e.g., some activities purely for fun, or the [sometimes dreaded] ice-breaker)

And to make people feel competent, we can…

Use the hoards of meta-analyses on what improves learning. There are dozens of these that are relevant to community builders. I’ll turn to those now.

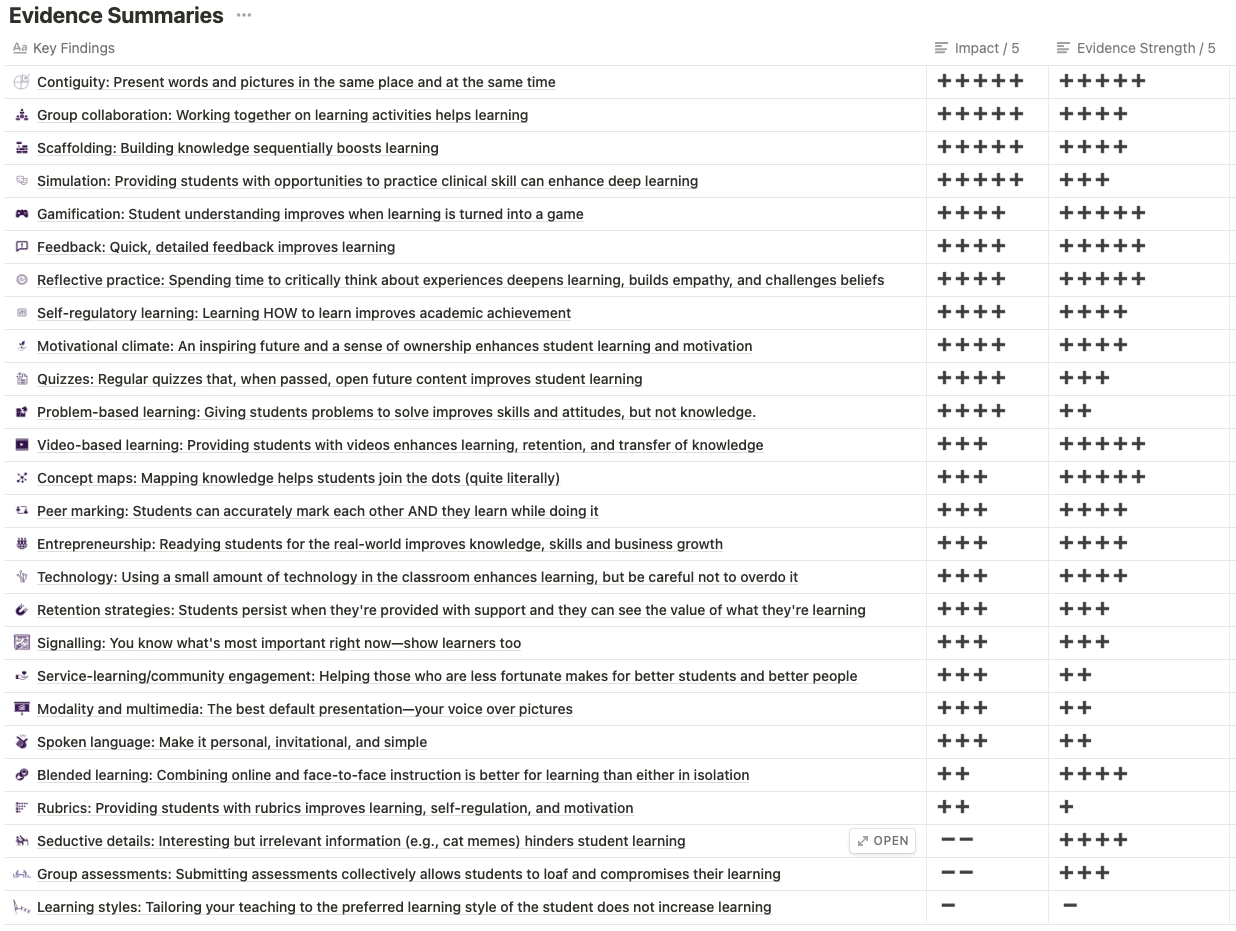

If educate is the goal, use evidence-based education strategies

There are many repositories of evidence-based teaching strategies, most of them targeting school children (e.g., Visible Learning, the Educational Endowment Foundation, and Evidence for Learning). Adult learning can look and feel different from school classrooms. As a result, we’ve created a toolkit summarising the meta-analytic evidence for what works in adult learning (usually universities) here.[8] See these links if you want to know why meta-analyses are important for teaching, how we tried to make them more accessible, and how to use them in your teaching.

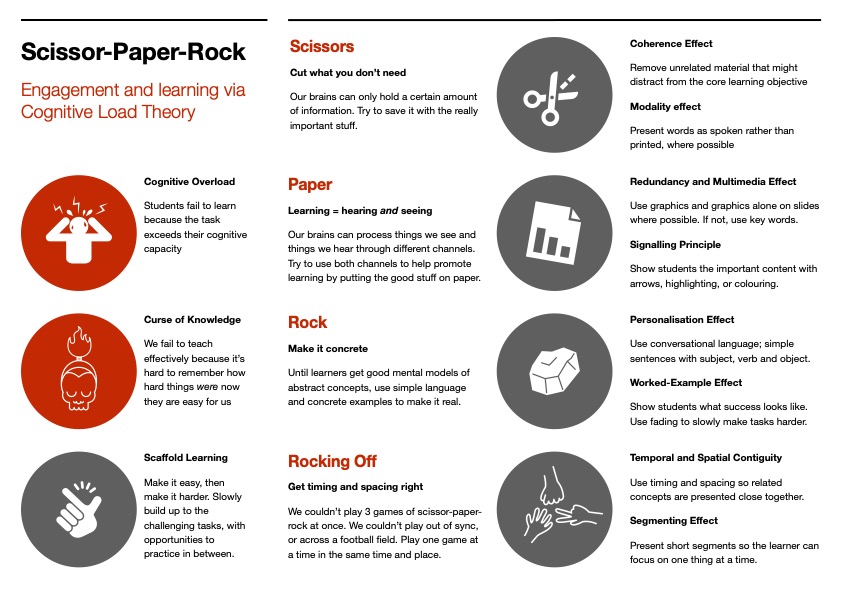

These strategies are not all common sense: many educators use strategies that have been shown to reduce learning. For example, I still hear people espousing so-called ‘facts’ about learning styles (e.g., ‘I’m tailoring this to visual learners’). Learning styles don’t work—instead use speech for words and images alongside them, wherever possible. Make the language as simple as you can, because you’re probably plagued with a curse of knowledge. That means—as is often recommended on the forum—that we should avoid using jargon (see Michael Aird, Akash Wasil, Rob Wiblin) unless the goal of the session is to learn that jargon (e.g., ‘instrumental convergence’ in the AI Safety Fundamentals course) or we know everyone has covered that jargon (e.g., later weeks of that course).

More controversially, meta-analyses show that too many jokes and memes distract an audience, so they’re more likely to remember the joke than what you’re trying to teach. This is controversial because, you know, funny stuff is funny. For example, I love Last Week Tonight, or Robert Miles’ AI safety videos. Their jokes make things more engaging—but I do often notice myself attending to a meme and forgetting what he was saying. I obviously don’t think we should eschew humour completely—I still add terrible jokes to my videos—but we need to be careful to make the jokes relevant to the learning objectives, so the learning still comes through.

Other—possibly obvious but often neglected—evidence based strategies include:

- Make things interactive

- We usually only realise what we know and what we missed when we try to explain our thinking, or use our knowledge to solve problems. Learning is roughly proportional to the amount of interactivity you create:

- Passive listening and reading leads to the lowest learning

- Highlighting and taking notes is better (active listening)

- Answering questions and solving problems is even better (constructive learning)

- Collaborating with others means you can fill their knowledge gaps, and they can fill yours (interactive learning)

- We usually only realise what we know and what we missed when we try to explain our thinking, or use our knowledge to solve problems. Learning is roughly proportional to the amount of interactivity you create:

- Provide gradually increasing, self-referenced challenges, instead of one challenge for everyone

- ‘Scaffolding’ is an effective way of doing this, where learners solve easier problems before being challenged by more difficult ones. This is generally obvious: start with The Precipice instead of Greaves and MacAskill’s working paper on Axiological and Deontic Strong Longtermism. But, for developing skills, the community is not always great at scaffolding. I enjoyed the AGISF but the independent project ‘snuck up’ on many of my colleagues. Some got overwhelmed and stopped. If we want people to eventually be able to do an independent project around AI Safety, then the learning design might want to give people fewer discussions, and more group activities that look like ‘mini projects’

- Provide encouraging and constructive feedback on how they’re going

- Discussions can be good if they encourage people to voice their understanding of a topic, and then get feedback on how well they’ve understood it. The problem is that—often—many people in the group don’t get any feedback on their understanding. If you speak last, you might not be able to really check your knowledge if everyone has spoken before you.

- Instead, ensure each person gets fast and reliable feedback. One of the best reasons for doing group, hands-on activities is that it’s easier to give feedback on what people are learning. Give each person an opportunity to show what they know so you can supportively give fast, accurate feedback on how well they know it.

- Discussions can be good if they encourage people to voice their understanding of a topic, and then get feedback on how well they’ve understood it. The problem is that—often—many people in the group don’t get any feedback on their understanding. If you speak last, you might not be able to really check your knowledge if everyone has spoken before you.

- Related to constructive alignment (i.e., working backward from the skill to the assessment to the learning activity, see above), one of the most robust findings in evidence-based teaching is the benefit of so-called ‘simulation’: basically, have people practise the thing you want them to eventually do. If you want people to be able to facilitate a discussion about EA, then don’t have them read a forum post—they’ll learn the best if you have them actually facilitate a real session, facilitate a pretend session, or have them watch a real session and make decisions about how they would respond. With all of these, though, remember the key mechanism is fast and faithful feedback.

- Have learners build their own concept maps (either individually or in a group) helps them consolidate their knowledge and flag areas of disagreement.

- Design case studies with problems for people to work through (i.e., problem based learning)

- Most of these strategies can be used in group, collaborative settings, which not only builds connections, but group collaboration is one of the best things we know to improve learning

- Don’t make projects or ‘assessments’ collaborative. The opportunity to let others do the work (so called ‘social loafing’) is too tempting for many to resist. As a result, group assessments lead to less learning than individual ones, and there’s no good data that they lead to increased ‘soft skills’ like ‘communication’. My experience is that those soft skills need to be taught explicitly and deliberately practised.

- If it makes sense in your setting, then use quizzes to help provide people with feedback on their learning. In a perfect world, some gamification of the course can make it more engaging, but is usually costly and hard to implement. Still, there are lots of educational technologies that improve learning, especially if they make it easier to promote the learning mechanisms described above (e.g., better, faster feedback via H5P; better group collaboration via Miro; better simulation of the real-world task like learning statistical analyses in R using Swirl)

Learn how to use multimedia so it doesn’t overload your audience

Even if you’re not making curricula or designing virtual programs, you’re probably trying to communicate your ideas publicly. You might be writing on the forum, in which case learning to write in a clear and compelling way is going to increase your impact (HT Kat Woods). But writing is an inefficient mechanism for communication. Our brains are designed to hear words[9] and see pictures (which is why videos work better than many other forms of teaching and communication). There are a handful of strategies shown to improve learning from multimedia from hundreds of experiments (see our meta-review from last year). I’m going to practise what I preach here and direct you to one of three multimedia methods for learning these principles. The best is this video playlist (five videos, roughly five minutes each) that walks through how to make multimedia that works. If you’re strapped for time, this twitter thread covers all the evidence-based strategies. If you don’t want to leave this post, this one-page summary covers most of the key ideas.

If possible, try to use a narrative arc that makes the problem concrete, hits an emotion, has something unexpected, then a concrete resolution. My adaption of Chip and Dan Health's excellent book ('Made to stick'; my video summary here) involves the following five steps:

- Problem with emotion: who's the hero? What problem do they face? How did they feel?

- Stuck point with misconception: where did they get stuck? What unhelpful belief kept them stuck?

- Empathy then refutation of stuck point or misconception, made by someone credible: Show you understand why people have the refutation. Then, explain why it's wrong.

- Principle for next steps, using an analogy: what should the hero believe instead? Ideally, provide an analogy to the solution.

- Solution to the original problem, with a new and positive emotion: how did the hero win using the new belief? How did they feel afterwards?

Zan Saeri used some of my old video scripts to turn this into a GPT-3 preset. Have fun.

In summary, we’re all teachers.

Learn to do it well.

So much of community and field building involves what great teachers do: we share important ideas, make a motivating environment to explore the ideas, and create activities that help us engage with them. It’s not the only thing, of course, but by trying some of the ideas in this post, you can make this part of your community building more effective and evidence based. In doing so, we can better grow the community of people who are committed to EA.

If you want to study how to do this better, reach out.

I'm an academic in the School of Psychology at the University of Queensland. I think building the EA, rationalist, x-risk and longtermist communities are very important pathways for doing a huge amount of good. If you're interested in this and want to do research or run projects in this space, don't be afraid to reach out via noetel at gmail dot com

Thanks to the following people who provided constructive feedback on this post.

Alexander Saeri, Peter Slattery, Emily Grundy, Yi-Yang Chua, Jamie Bernardi, and Sebastian Schmidt were all autonomy supportive teachers that helped me improve this post. Mistakes are my own.

- ^

I’m acutely aware of the irony that a forum post including prohibitions against jargon contains jargon as the first two words.

- ^

Per Ivar Friborg has also published a great summary of SDT for EA here

- ^

Gender and name randomised for anonymity

- ^

This aligns well with Luke Muehlhouser’s conceptualisation of Effective Altruism as a project that some people are excited about, but others aren’t, and I think that difference is the degree of alignment between the project and their own values

- ^

There were too many forum and blogs posts to name each one that helped me become more sustainably motivated, but if I had to choose one, it’d be Julia Wise

- ^

I’m obviously not the first person to suggest we should replace guilt (e.g., Nate Soares)

- ^

The experts also agreed on mistakes some exhausted, defeated teachers might make, like yelling at students, but I didn’t include them here because I can’t imagine an EA community builder making the same mistakes.

- ^

We haven’t finished porting INSPIRE from our beta version to the proper website, so I actually prefer the user experience on this version on Notion. Still, I’ve linked to the new website so this post is more likely to be evergreen.

- ^

Another hat tip to Kat and Nonlinear for the Nonlinear Library. Not only are podcast versions of the forum more practical for many people, we’re likely to learn just as well, if not better (assuming the posts don’t have many images that we’d miss). The Astral Codex Podcast does an amazing job of this where Solenoid Entity explains the graphs in exquisite detail, and has them show up on your podcast player (🧑🍳😙🤌)

You may be interested in this convo I had about research on pedagogical models. The tl;dw if you just want the interventions that have replicated with large effects sizes:

This is a useful list of interventions, some of which are mentioned in the post (e.g., quizzes; we've summarised the meta-analyses for these here). I think steps 1, 2 and 3 from the summary of the above post are the 'teacher focused' versions of how to promote deliberate practice (have a focus, get feedback, fix problems). Deliberate practice literature often tells learners how they should structure their own practice (e.g., how musicians should train). Teaching to others is a useful way to frame collaboration in a way that makes it safe to not know all the answers. Thanks for the nudges.

Thanks so much for writing this up.

I carefully read through the "how to teach effectively in five steps" and got a lot of value from it. I've yet to read through other aspects of the blog post.

The thing I love the most about this section is that it builds on robust findings from meta-analyses. Why do I love this? Because i) nothing in community-building is based on robust evidence and ii) it counteracts a tendency for EA to rely to much on what's EA - reinventing a new paradigm rather than recalling that the majority of what's useful in the world in the world isn't "EA" (we're substantially standing on the shoulders of giants).

Additionally, here's a couple of reactions and questions to five step section specifically:

1. I appreciate the focus on defining a learning objective as a skill and then working backward from that. I think that "creating personal theory of changes and impactful career plans that one can wholeheartedly (or agentically) pursue" is a good default to have in mind. One downside to this approach is that it might lead to Goodharting and leading the teacher to go in "exploitation" mode. E.g., I worry that I might become too attached to a specific outcome on behalf of the students and tacitly start to persuade (similar to some concerns expressed by Theo Hawkins) and/or neglect other important opportunities that might emerge during the program. How do you think of that risk?

2. Can you say anything about what forms summative assessments are particularly useful? For Future Academy, we're contemplating pitching project ideas or presentation and discussion of career plans (although we likely wouldn't label it as an assessment but rather as an exciting culmination of their work).

3. I think there's a typo under 3a. (“Formative assessments” —> formative activities)?

4. While I appreciate the rigor of the evidence upon which this post is built, I worry about this being true for on average for average university students and might not generalize to the subpopulation that some portion of community-building efforts is targetted towards (e.g., people who are in the 90th percentile on various domains, including openness to experience, conscientiousness, need-for-cognition, etc.). How worried are you about this?

5. Strongly agree with the importance of role models. Humans are deeply social and our social incentives (including role models) might be the most important things to change. In fact, being generally good people (or virtuous) in addition to the unique virtues you mentioned appears important as we have some research showing that this might be off-putting. Finally, same-race role-models appear to be particularly important.

Thanks for taking the time to add these really useful observation, Seb.

It's been a while since I read Theo's post so I might be missing the mark here. I agree both explore and exploit are important, especially for young people. I haven't thought deeply about this but my intuition says "if it's also important to x, be explicit that you have multiple goals." For example, to use 'create personal theory of change' via Future Academy is the goal, you might also want people to 'create tentative career plans for 5 distinct careers', or 'develop connections so you have 3 people you could call to ask for career advice'. Sure the latter isn't a 'learning objective' and it might be better un-said. Still, I think a generally good way of goodharting might be using multiple goals or criteria for success.

The word that comes to mind is to make it 'authentic.' Basically, make it as close as possible to the real world skill you want people to do. This is rare. Universities expect critical thinking, creativity, and communication, but use recall-based multiple-choice questions. I've seen essays and reflections to assess interpersonal skills, instead of videos or presentations. Pitching project ideas and presenting career plans sounds well above average. If I had to nit-pick, I've never 'presented my career plans', so to make it slightly closer to something people might do anyway would be 'write a grant application.'

Both are things. I should have clarified it. Formative assessments are formative activities that count toward a grade or completion status. As mentioned by another commenter, low-stakes quizzes are helpful for providing feedback and accountability to learners, but better fit university courses than fellowships etc.

This is an important question. I don't think I know yet how big a problem this is (as I said, people should reach out if they want to work on it). One of the benefits of having worked in sport and performance psychology is that it mostly focuses on people in the top 1–5% of their field. As far as I can tell, the core principles underlying most of the above (psychological needs; deliberate practice; cognitive load limits) still apply to those people. You do need to calibrate the challenge to the person. People in the top 5% are going to be bored if you spend 10 hours explaining a t-test. So, I'm sure some things don't generalise perfectly, but I think that's more likely to be the specific techniques (e.g., 'use quizzes') than the mechanisms (e.g., 'provide feedback').

Yeah I didn't go into this much so it's a good pickup. Both are useful to remember.

This is great content! Thank you for writing and sharing it. I added the tag "High Impact Psychology" because this is an example of how psychology can help to increase EA impact.

There's a typo:

"(r = .3; about ~10% of the variance explained [R² = .01]" --> "R^2=0.1"

Ahhh! Yes, thanks. Fixed.

Regarding self-determination theory. How would you relate that to agency (similarities and differences)? Agency (or the related self-efficacy) appears relatively popular among EA and has been around in psychology for a while.

So in education 'agency' is often defined as 'agentic engagement'—basically taking ownership over your own learning. I couldn't find any good systematic reviews on interventions that increase agentic engagement. This is pretty weak evidence and might have a healthy dose of motivated reasoning (my end, and theirs), but people who have thought about agency for longer than I have seem to think...

... so I don't have any better ideas than those described above.

That makes sense in the context of education. However, I'd add that agency also contains some components related to general ability (and perceived ability) to exert influence on the world in a way that's aligned with one's values. The section on what autonomy supportive educators tend to do is highly valuable. Appears quite related to coaching (although coaching is a much broader term).

Thanks for writing this post! I would love to see how elements of what is reported here could potentially improve CEA's virtual introductory program and be more discussed among/taught to community builders on a consistent basis. I look forward to digesting these topics more properly to see how it can benefit the work I do as university group supporter.

It will take me some time to digest the information in this post, and I've only skimmed through part of it so far, but I want to express my appreciation for providing such a resource-dense post.