This is a link to a blogpost outlining my current thinking on why GiveWell should explicitly quantify the uncertainty in every parameter of its cost effectiveness model. It explains precisely how not quantifying uncertainty at all, or only quantifying the uncertainty in some parameters, leads to suboptimal allocation of funds.

The table of contents (also serving as a summary) is below.

Contents

- Define complete and partial uncertainty quantification.

- Explain why GiveWell should use uncertainty quantification at all:

- Bayesian adjustments for post-decision surprise may imply a change in the relative ranking of New Incentives.

- Uncertainty quantification allows for the alternative decision rules introduced by Noah Haber in ‘GiveWell’s Uncertainty Problem’.

- Uncertainty quantification allows us to calculate the value of information on a given parameter (if its uncertainty is quantified), thereby allowing for a systematic approach to generating a research agenda.

- Explain why partial uncertainty quantification is inadequate:

- Post-adjustment rankings are affected by the absolute variance in the naïve estimates, not just the relative variances.

- Partial uncertainty quantification risks introducing systematic biases, depending on which key parameters are chosen for quantification.

Some diagrams without context

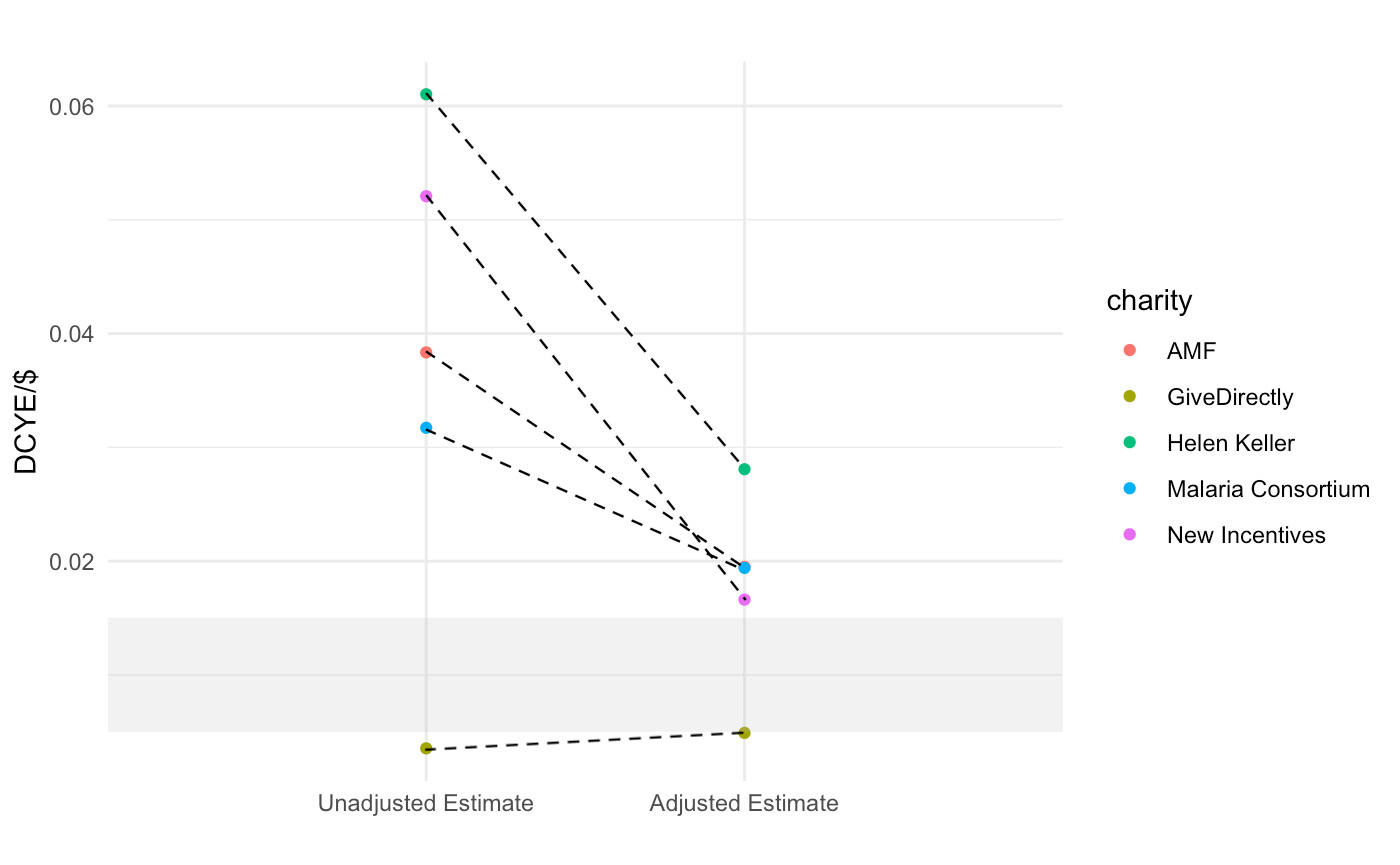

Explicit Bayesian adjustment of GiveWell's current cost effectiveness estimates

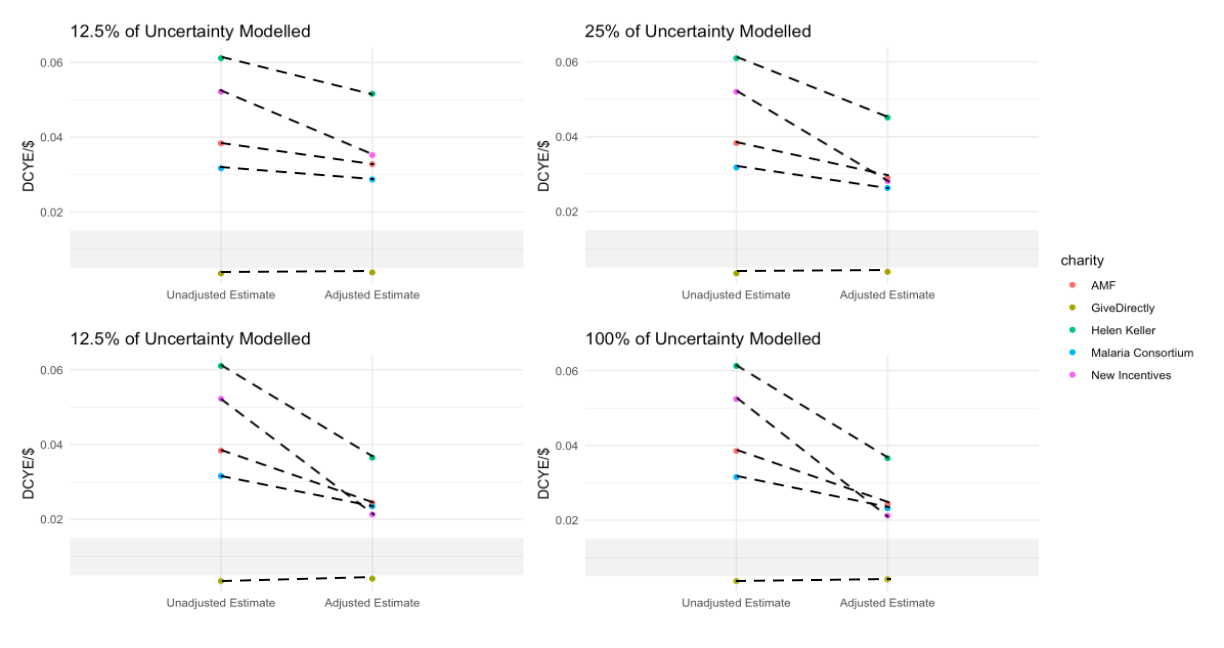

How this adjustment changes if only some of the uncertainty is explicitly modelled

*The bottom left panel should read '50% of Uncertainty Modelled'.

This work would not be possible without previous contributions on this topic from Sam Nolan and Hannah Rokebrand, as well as related criticisms of GiveWell (most recently from Noah Haber).

Nice!