Some terms and concepts that are important to many longtermists are existential risk, extinction risk, global catastrophic risk, and suffering risk. Also important are the terms and concepts for the four types of catastrophes corresponding to those four types of risks.[1]

Unfortunately, people sometimes mistakenly use one of these terms as if synonymous for another of these terms, or mistakenly present one concept as entirely fitting as a subset of another. Other times, people discuss these concepts in complete isolation from each other, which is not a mistake, but may sometimes be a missed opportunity.

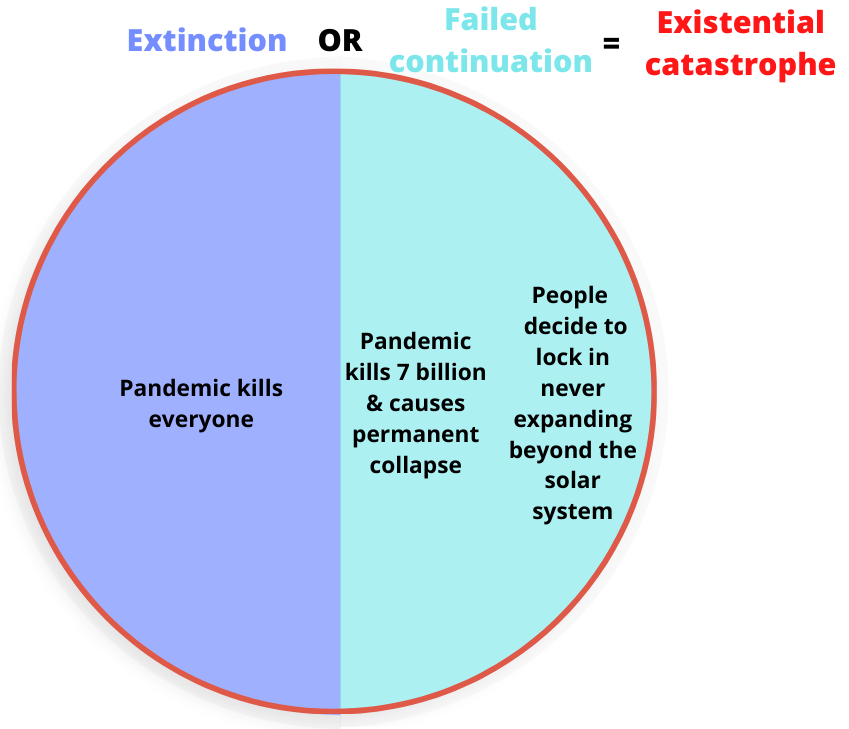

In reality, there are important distinctions and important overlaps between these terms and concepts. So this post reviews definitions and descriptions of those four types of catastrophes, discusses how they relate to each other, and presents three Venn diagrams (which include example scenarios) to summarise these points. I hope this can help increase the conceptual clarity of discussions and thinking regarding longtermism and/or large-scale risks.

This post primarily summarises and analyzes existing ideas, rather than presenting novel ideas.

Existential catastrophe, extinction, and failed continuation

In The Precipice, Toby Ord defines an existential catastrophe as “the destruction of humanity’s longterm potential.”[2] Extinction is one way humanity’s long-term potential could be destroyed,[3] but not the only way. Ord gives the following breakdown of different types of existential catastrophe:

(For those interested, I’ve previously collected links to works on collapse and on dystopia.)

We could thus represent the relationship between the concepts of existential catastrophe, extinction, and failed continuation via the following Venn diagram:[4]

I’ve included in that Venn diagram some example scenarios. Note that:

- All of the example scenarios used in this post really are just examples; there are many other scenarios that could fit within each category, and some may be more likely than the scenarios I’ve shown.

- Whether a scenario really counts as an existential catastrophe depends on one’s moral theory or values (or the “correct” moral theory of values), because that affects what counts as fulfilling or destroying humanity’s long-term potential.

- The sizes of each section of each Venn diagram are not meant to reflect relative likelihoods. E.g., I don’t mean to imply that extinction and failed continuation are exactly as likely as each other.

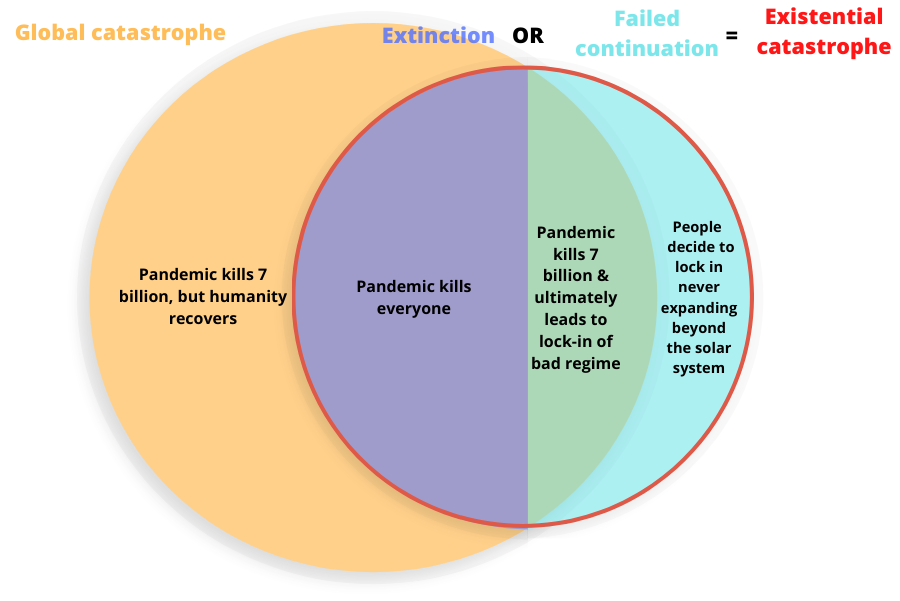

Global and existential catastrophes

Multiple definitions of global catastrophic risks have been proposed, some of which differ substantially. I’ll use the loose definition provided by Bostrom & Ćirković (2008, p.1-2) in the book Global Catastrophic Risks:

The term 'global catastrophic risk' lacks a sharp definition. We use it to refer, loosely, to a risk that might have the potential to inflict serious damage to human well-being on a global scale.

[...] a catastrophe that caused 10,000 fatalities or 10 billion dollars worth of economic damage (e.g., a major earthquake) would not qualify as a global catastrophe. A catastrophe that caused 10 million fatalities or 10 trillion dollars worth of economic loss (e.g., an influenza pandemic) would count as a global catastrophe, even if some region of the world escaped unscathed. As for disasters falling between these points, the definition is vague. The stipulation of a precise cut-off does not appear needful at this stage. [emphasis added]

Given this definition, many existential catastrophes would also be global catastrophes. This includes all potential extinction events, many or all potential unrecoverable collapse events, and many potential transitions to unrecoverable dystopia. However, the terms “existential catastrophe” and “global catastrophe” are not synonymous, for two reasons.

Firstly, a wide array of global catastrophes would not be existential catastrophes. Indeed, “global catastrophe” is a notably “lower bar”, and so global catastrophes may be much more likely than existential catastrophes. (See also Database of existential risk estimates.)

Secondly, some existential catastrophes wouldn’t be global catastrophes (given Bostrom & Ćirković’s definition), because they wouldn’t involve any sudden spike in deaths or economic damage. This applies most clearly to “desired dystopias”, in which a large portion of the people at the time actually favour the outcomes that occur (e.g., due to sharing a deeply flawed ideology). A desired dystopia may therefore not be recognised as a catastrophe by anyone who experiences it.[5] Ord’s (2020) “plausible examples” of a desired dystopia include:

worlds that forever fail to recognise some key form of harm or injustice (and thus perpetuate it blindly), worlds that lock in a single fundamentalist religion, and worlds where we deliberately replace ourselves with something that we didn’t realise was much less valuable (such as machines incapable of feeling).

It’s also possible that some transitions to undesired or enforced dystopias (e.g., totalitarian regimes) could occur with no (or little) bloodshed or economic damage.[6]

In contrast, unrecoverable collapse seems likely to involve a large number of deaths. It also seems to almost “by definition” involve major economic damage. And extinction would of course involve a huge amount of death and economic damage.

We could thus represent the relationship between the concepts of global catastrophe, existential catastrophe, extinction, and failed continuation via the following Venn diagram:

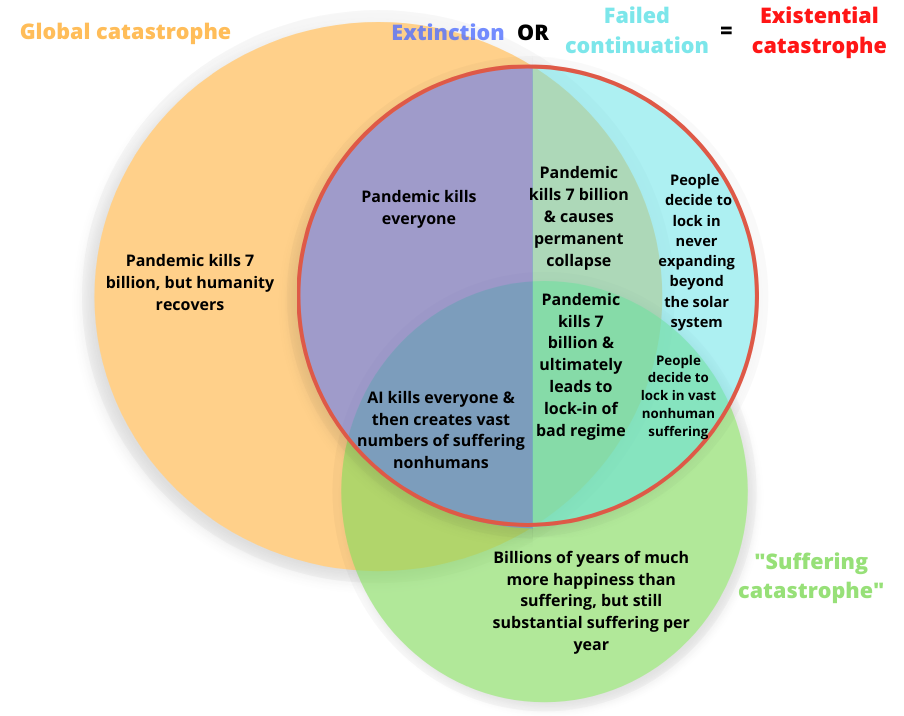

Suffering, existential, and global catastrophes

Suffering risks (also known as risks of astronomical suffering, or s-risks) are typically defined as “risks where an adverse outcome would bring about suffering on an astronomical scale, vastly exceeding all suffering that has existed on Earth so far” (Daniel, 2017).[7] (Update in 2022: That source has been updated to give the following definition instead: "S-risks are risks of events that bring about suffering in cosmically significant amounts. By “significant”, we mean significant relative to expected future suffering." This definition does seem better to me, for the reasons that Lukas Gloor mentions in a comment.)

I’ll use the term suffering catastrophe to describe the realisation of an s-risk; i.e., an event or process involving suffering on an astronomical scale.[8]

Two mistakes people sometimes make are discussing s-risks as if they’re entirely distinct from existential risks, or discussing s-risks as if they’re a subset of existential risks. In reality:

-

There are substantial overlaps between suffering catastrophes and existential catastrophes, because some existential catastrophes would involve or result in suffering on an astronomical scale.

- Most obviously, many unrecoverable dystopia scenarios would involve suffering of humans or nonhumans on an astronomical scale. For example, a stable, global totalitarian regime could cause many billions of humans to suffer in slavery-like conditions each generation, for many generations (see also Reducing long-term risks from malevolent actors). Or a “desired dystopia” might involve huge numbers of suffering wild animals (via terraforming) or suffering digital minds, because humanity fails to realise that that’s problematic.

- As another example, some AI-induced extinction events may be followed by large amounts of suffering, such as if the AI then runs huge numbers of highly detailed simulations including sentient beings (see e.g. Tomasik).[9]

-

But there could also be suffering catastrophes that aren’t existential catastrophes, because they don’t involve the destruction of (the vast majority of) humanity’s long-term potential.

- This depends on one’s moral theory or values (or the “correct” moral theory or values), because, as noted above, that affects what counts as fulfilling or destroying humanity’s long-term potential.

- For example, the Center on Long-Term Risk notes: “Depending on how you understand the [idea of loss of “potential” in definitions] of [existential risks], there actually may be s-risks which aren’t [existential risks]. This would be true if you think that reaching the full potential of Earth-originating intelligent life could involve suffering on an astronomical scale, i.e., the realisation of an s-risk. Think of a quarter of the universe filled with suffering, and three quarters filled with happiness. Considering such an outcome to be the full potential of humanity seems to require the view that the suffering involved would be outweighed by other, desirable features of reaching this full potential, such as vast amounts of happiness.”

- In contrast, given a sufficiently suffering-focused theory of ethics, anything other than near-complete eradication of suffering might count as an existential catastrophe.

Likewise:

-

There are substantial overlaps between suffering catastrophes and global catastrophes, because some suffering catastrophes (or the events that cause them) would involve major human fatalities and economic damage.

-

But there could also be suffering catastrophes that aren’t global catastrophes, because they don’t involve major human fatalities and economic damage.

- In particular, there could be suffering among nonhumans and/or ongoing human suffering without a spike in human fatalities at any particular time.

We could thus represent the relationship between the concepts of suffering catastrophe, existential catastrophe, and global catastrophe via the following Venn diagram (although this diagram might be inaccurate, given a sufficiently suffering-focused theory of ethics):

If you found this post interesting, you may also find interesting my previous posts Clarifying existential risks and existential catastrophes and 3 suggestions about jargon in EA.

I’m grateful to Tobias Baumann, Anthony D, and David Kristoffersson for helpful feedback. I’m also grateful to David for discussions that may have informed the ideas I expressed in this post. This does not imply these people's endorsement of all of this post’s claims.

I wrote this post in my personal time, and it doesn’t necessarily represent the views of any of my employers.

I’m making a distinction between “risk” and “catastrophe”, in which the former refers to the chance of a bad event happening and the latter refers to the bad event itself. ↩︎

Ord writes “I am understanding humanity's longterm potential in terms of the set of all possible futures that remain open to us. This is an expansive idea of possibility, including everything that humanity could eventually achieve, even if we have yet to invent the means of achieving it.” ↩︎

For the purposes of this post, “extinction” refers to the premature extinction of humanity, and excludes scenarios such as:

- humanity being “replaced” by a “descendant” which we’d be happy to be replaced by (e.g., whole brain emulations or a slightly different species that we evolve into)

- “humanity (or its descendants) [going] extinct after fulfilling our longterm potential” (Ord, 2020)

Those sorts of scenarios are excluded because they might not count as existential catastrophes. ↩︎

Alternative Venn diagrams one could create, and which could also be useful, would include a diagram in which failed continuation is broken down into its two major types, and a diagram based on Bostrom’s typology of existential catastrophes. ↩︎

However, certain other definitions of “global catastrophe” might capture all existential catastrophes as a subset. For example, Yassif writes “By our working definition, a GCR is something that could permanently alter the trajectory of human civilization in a way that would undermine its long-term potential or, in the most extreme case, threaten its survival.” Taken literally, that definition could include events that would involve no obvious or immediate deaths and economic damage, and that no one at the time recognises as a catastrophe. ↩︎

Seemingly relevantly, Bostrom’s classification of types of existential risk (by which I think he really means “types of existential catastrophe”) includes “plateauing — progress flattens out at a level perhaps somewhat higher than the present level but far below technological maturity”, as well as “unconsummated realization”. Both of those types seem like they could occur in ways such that the catastrophe doesn’t rapidly and directly lead to large amounts of death and economic damage.

It’s also possible that an existential catastrophe which doesn’t rapidly and directly lead to large amounts of death and economic damage could still lead to that eventually and indirectly. For example, the catastrophe might cause a failure to develop some valuable technology that would’ve been developed otherwise, and that would’ve saved lives or boosted the economy. If that happens, I personally wouldn’t say that that alone should make the existential catastrophe also count as a global catastrophe. But I also think that that’s a debatable and relatively unimportant point.] ↩︎

This definition of course leaves some room for interpretation. See the rest of Daniel’s post for details. Also note that, in December 2019, the Center on Long-Term Risk (CLR) repeated this definition but added that “This definition may be updated in the near future.” (CLR is arguably the main organisation focused on s-risks, and is the organisation Daniel was part of when he wrote the above-linked post.) ↩︎

I haven’t actually seen the term “suffering catastrophe”, and sometimes the term “s-risk” is itself used to refer to the event, rather than the risk of the event occurring (e.g., here). But it seems to me preferable to have a separate term for the risk and the event. And “suffering catastrophe” seems to make sense as a term, by analogy to the relationship between (a) the terms “existential risk” and “existential catastrophe”, and (b) the terms “global catastrophic risk” and “global catastrophe”. ↩︎

Also, more speculatively, if there are (or will be) vast numbers of suffering sentient beings elsewhere in the universe, and humans currently have the potential to stop or substantially reduce that suffering, then human extinction or unrecoverable collapse could also represent a suffering catastrophe. See also The expected value of extinction risk reduction is positive. ↩︎

Edit: I just noticed that this post I'm commenting on is 2 years old (it came up in my feed and I thought it was new). So, the post wasn't outdated at the time!

That definition is outdated (at least with respect to how CLR thinks about it). The newer definition is the first sentence in the source you link to (it's a commentary by CLR on the 2017 talk):

Reasons for the change: (1) Calling the future scenario “galaxy-wide utopia where people still suffer headaches every now and then” an “s-risk” may come with the connotation (always unintended) that this entire future scenario ought to be prevented. Over the years, my former colleagues at CLR and I received a lot of feedback (e.g., here and here) that this was off-putting about the older definition.

(2) Calling something an “s-risk” when it doesn’t constitute a plausible practical priority even for strongly suffering-focused longtermists may generate the impression that s-risks are generally unimportant. The new definition means they're unlikely to be a rounding error for most longtermist views as they are defined* (except maybe if your normative views imply a 1:1 exchange rate between utopia and dystopia).

(*S-risks may still turn out to be negligible in practice for longtermist views that aren't strongly focused on reducing suffering if particularly bad futures are really unlikely empirically or if we can't find promising interventions. [Edit: FWIW, I think there are tractable interventions and s-risks don't seem crazy unlikely to me.])

Thanks for flagging this! I've now updated my post to include this new definition (I still use the old one first, but have added an explicit update in the main text).

This definition does seem better to me, for the reasons you mention.

One further ambiguity that IMO would be worth resolving if you ever come to edit this is between 'unrecoverable collapse' and 'collapse that in practice we don't recover from'. The former sounds much more specific (eg a Mad Maxy scenario where we render so much surface area permanently uninhabitable by humans, such that we'd never again be able to develop a global economy) and so much lower probability.

I'm honestly wondering if we should deliberately reject all the existing terminology and try to start again, since a) as you say, many organisations use these terms inconsistently with each other, and b) the terms aren't etymologically intuitive. That is, 'existential catastrophes' needn't either threaten existence or seem catastrophic, and 'global' catastrophes needn't affect the whole globe, or only the one globe.

Also it would be useful to have a term that covered the union of any two of the three circles, esp 'global catastrophe' + 'existential catastrophe', but you might need multiple terms to account for the amibigity/uncertainty.

Why is "people decide to lock in vast nonhuman suffering" an example of failed continuation in the last diagram?

Failed continuation is where humanity doesn't go extinct, but (in Ord's phrase) "the destruction of humanity’s longterm potential" still occurs in some other way (and thus there's still an existential catastrophe).

And "destruction of humanity's longterm potential" in turn essentially means "preventing the possibility of humanity ever bringing into existence something close to the best possible future". (Thus, existential risks are not just about humanity.)

It's conceivable that vast nonhuman suffering could be a feature of even the best possible future, partly because both "vast" and "suffering" are vague terms. But I mean something like astronomical amounts of suffering among moral patients. (I hadn't really noticed that the phrase I used in the diagram didn't actually make that clear.) And it seems to me quite likely that a future containing that is not close to the best possible future.

Thus, it seems to me likely that locking in such a feature of the future is tantamount to preventing us ever achieving something close to the best future possible.

Does that address your question? (Which is a fair question, in part because it turns out my language wasn't especially precise.)

ETA: I'm also imagining that this scenario does not involve (premature) human extinction, which is another thing I hadn't made explicit.