Quick takes

I worked at OpenAI for three years, from 2021-2024 on the Alignment team, which eventually became the Superalignment team. I worked on scalable oversight, part of the team developing critiques as a technique for using language models to spot mistakes in other language models. I then worked to refine an idea from Nick Cammarata into a method for using language model to generate explanations for features in language models. I was then promoted to managing a team of 4 people which worked on trying to understand language model features in context, leading to t...

Hi William! Thanks for posting. Can you elaborate on your motivation for posting this Quick Take?

Quick poll [✅ / ❌]: Do you feel like you don't have a good grasp of Shapley values, despite wanting to?

(Context for after voting: I'm trying to figure out if more explainers of this would be helpful. I still feel confused about some of its implications, despite having spent significant time trying to understand it)

I read your post while I was writing up the wiki article on Shapley values and thought it was really useful. Thanks for making that post!

Episodes 5 and 6 of Netflix's 3 Body Problem seem to have longtermist and utilitarian themes (content warning: spoiler alert)

- In episode 5 ("Judgment Day"), Thomas Wade leads a secret mission to retrieve a hard drive on a ship in order to learn more about the San-Ti who are going to arrive on Earth in 400 years. The plan involves using an array of nanofibers to tear the ship to shreds as it passes through the Panama Canal, killing everyone on board. Dr. Auggie Salazar (who invented the nanofibers) is uncomfortable with this plan, but Wade justifies it in th

Who thought the userid did not have leftism as in left of center, but leftism as in those who left the community. :D

An alternate stance on moderation (from @Habryka.)

This is from this comment responding to this post about there being too many bans on LessWrong. Note how the LessWrong is less moderated than here in that it (I guess) responds to individual posts less often, but more moderated in that I guess it rate limits people more without reason.

I found it thought provoking. I'd recommend reading it.

...Thanks for making this post!

One of the reasons why I like rate-limits instead of bans is that it allows people to complain about the rate-limiting and to parti

I haven’t gone through this all the way, but I’ve loved Nicky Case’s previous explainers.

https://aisafety.dance/

Does requiring ex-ante Pareto superiority incentivise information suppression?

Assume I emit x kg of carbon dioxide. Later on, I donate to offset 2x kg of carbon dioxide emissions. The combination of these two actions seems to make everyone better off in expectation. It’s ex-ante Pareto superior. Even though we know that my act of emitting carbon and offsetting it will cause cause the deaths of different individuals due to different extreme weather events compared to not emitting at all, climate scientists report that higher carbon emissions will make...

Not sure how to post these two thoughts so I might as well combine them.

In an ideal world, SBF should have been sentenced to thousands of years in prison. This is partially due to the enormous harm done to both FTX depositors and EA, but mainly for basic deterrence reasons; a risk-neutral person will not mind 25 years in prison if the ex ante upside was becoming a trillionaire.

However, I also think many lessons from SBF's personal statements e.g. his interview on 80k are still as valid as ever. Just off the top of my head:

- Startup-to-give as a high EV caree

Watch team backup: I think we should be incredibly careful about saying things like, "it is probably okay to work in an industry that is slightly bad for the world if you do lots of good by donating". I'm sure you mean something reasonable when you say this, similar to what's expressed here, but I still wanted to flag it.

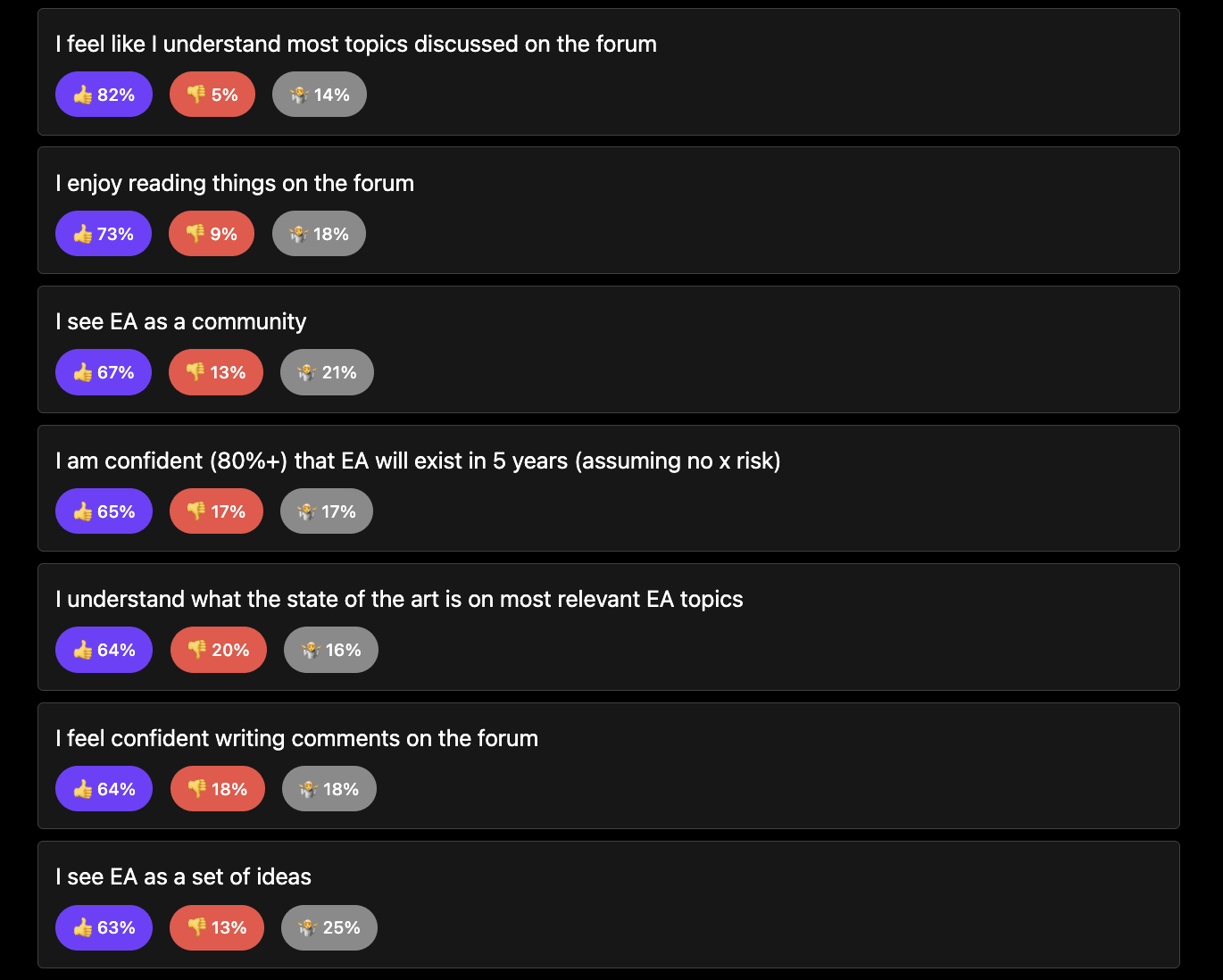

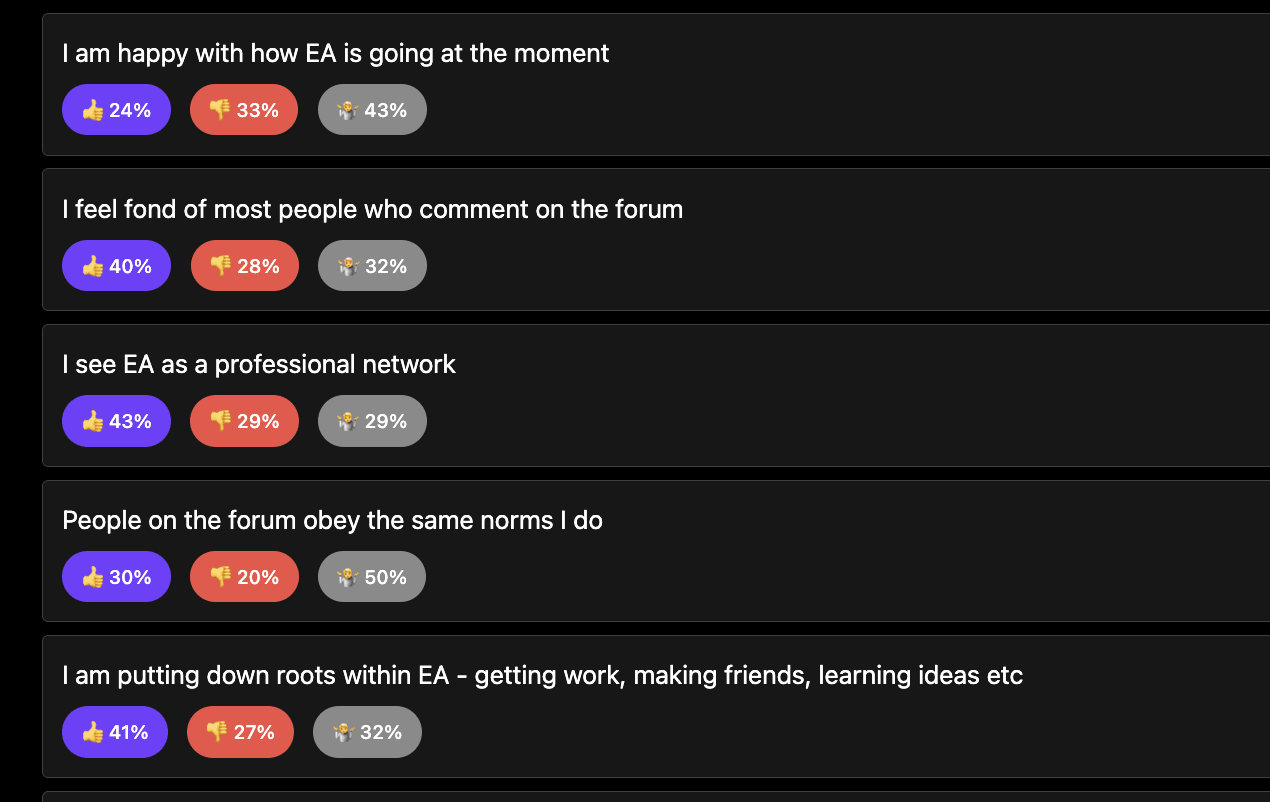

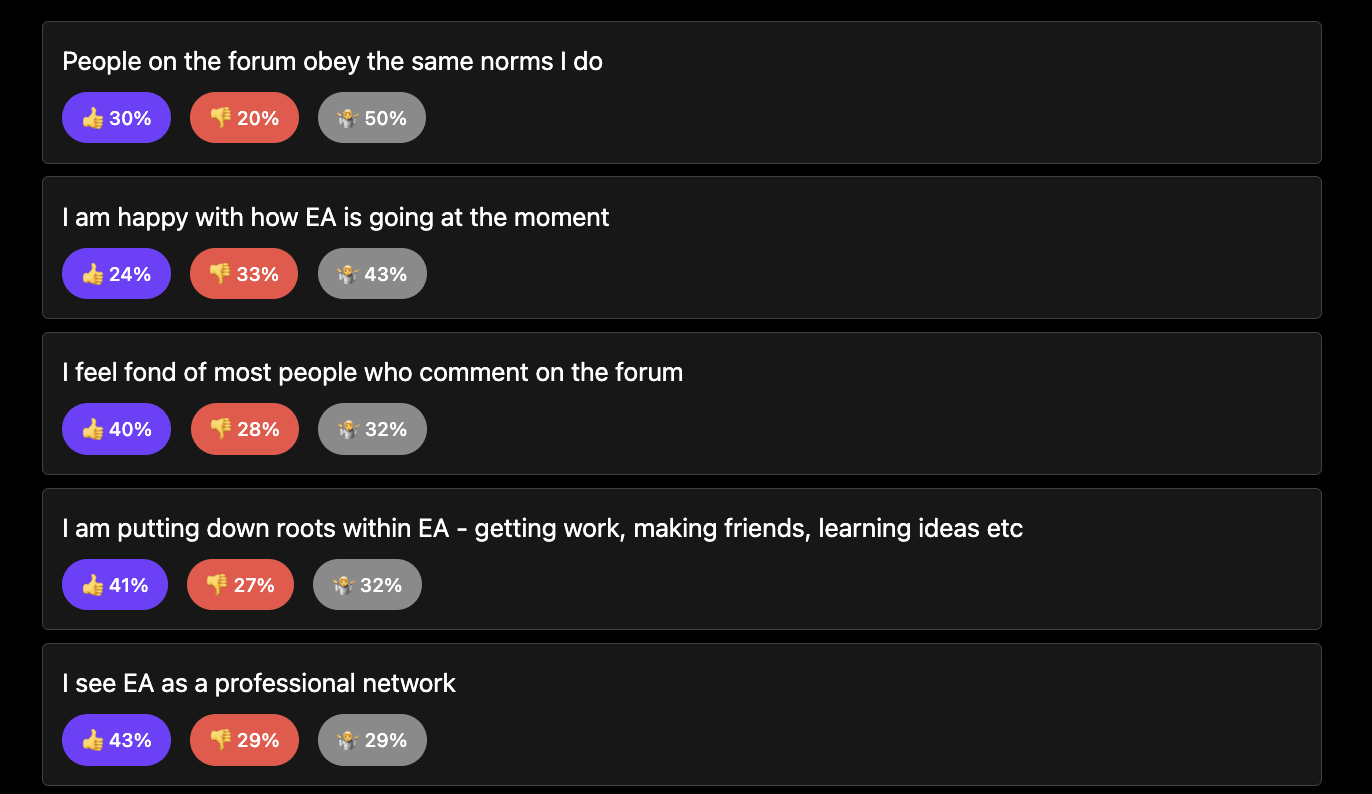

I make a quick (and relatively uncontroversial) poll on how people are feeling about EA. I'll share if we get 10+ respondents.

Currently 27-ish[1] people have responded:

Full results: https://viewpoints.xyz/polls/ea-sense-check/results

Statements people agree with:

Statements where there is significant conflict:

Statements where people aren't sure or dislike the statement:

- ^

The applet makes it harder to track numbers than the full site.

I've said that people voting anonymously is good, and I still think so, but when I have people downvoting me for appreciating little jokes that other people most on my shortform, I think we've become grumpy.

I intend to strong downvote any article about EA that someone posts on here that they themselves have no positive takes on.

If I post an article, I have some reason I liked it. Even a single line. Being critical isn't enough on it's own. If someone posts an article, without a single quote they like, with the implication it's a bad article, I am minded to strong downvote so that noone else has to waste their time on it.

What do you make of this post? I've been trying to understand the downvotes. I find it valuable in the same way that I would have found it valuable if a friend had sent me it in a DM without context, or if someone had quote tweeted it with a line like 'Prominent YouTuber shares her take on FHI closing down'.

I find posts like this useful because it's valuable to see what external critics are saying about EA. This helps me either a) learn from their critiques or b) rebut their critiques. Even if they are bad critiques and/or I don't think it's worth my...

I might start doing some policy BOTEC (Back of the envelope calculation) posts. ie where I suggest an idea and try and figure out how valuable it is. I think that do this faster with a group to bounce ideas off.

If you'd like to be added to a message chat (on whatsapp probably) to share policy BOTECs then reply here or DM me.

Is EA as a bait and switch a compelling argument for it being bad?

I don't really think so

- There are a wide variety of baits and switches, from what I'd call misleading to some pretty normal activities - is it a bait and switch when churches don't discuss their most controversial beliefs at a "bring your friends" service? What about wearing nice clothes to a first date? [1]

- EA is a big movement composed of different groups[2]. Many describe it differently.

- EA has done so much global health stuff I am not sure it can be described as a bait and switch. eg h

I think that there might be something meaningfully different between wearing nice clothes to a first date (or a job interview), as opposed to intentionally not mentioning more controversial/divisive topics to newcomers. I think there is a difference between putting your best foot forward (dressing nice, grooming, explaining introductory EA principles articulately with a 'pitch' you have practices) and intentionally avoiding/occluding information.

For a date, I wouldn't feel deceived/tricked if someone dressed nice. But I would feel deceived if the person in...

Trump recently said in an interview (https://time.com/6972973/biden-trump-bird-flu-covid/) that he would seek to disband the White House office for pandemic preparedness. Given that he usually doesn't give specifics on his policy positions, this seems like something he is particularly interested in.

I know politics is discouraged on the EA forum, but I thought I would post this to say: EA should really be preparing for a Trump presidency. He's up in the polls and IMO has a >50% chance of winning the election. Right now politicians seem relatively receptive to EA ideas, this may change under a Trump administration.

I'd class those comments as mostly a disagreement around ends . The emphasis on not getting the credit from his own support base and Republicans not wanting to talk about it are the most revealing. A sizeable fraction of his most committed support base are radically antivax to the point there was audible booing at his own rally when he recommended they got the vaccine, even after he'd very carefully worded it in terms of their "freedoms". It's less a narrow disagreement about a specific layer of Biden bureaucracy and more a recognition that his base sees l...

There have been multiple occasions where I've copy and pasted email threads into an LLM and asked it things like:

- What is X person saying

- What are the cruxes in this conversation?

- Summarise this conversation

- What are the key takeaways

- What views are being missed from this conversation

I really want an email plugin that basically brute forces rationality INTO email conversations.

Tangentially - I wonder if LLMs can reliably convert peoples claims into a % through sentiment analysis? This would be useful for Forecasters I believe (and rationality in general)

Do you believe that altruism actually makes people happy? Peter Singer's book argues that people become happier by behaving altruistically, and psychoanalysis also classifies altruism as a mature defense mechanism. However, there are also concerns about pathological altruism and people pleasers. In-depth research data on this is desperately needed.

Good question I also think about!

After being only for a few months deeply into EA I already realise that discussing with non EA-people makes me emotional, since I "cannot understand" why they are not getting easily convinced of it as well. How can something so logical not being followed by everyone? At least by donating? I think there is the danger to become pathetic if you don't reflect on it and be aware that you cannot convince everybody.

On the other side EA is already having a big impact on how I donate and how I act in my job - so in this ...

I think some of the AI safety policy community has over-indexed on the visual model of the "Overton Window" and under-indexed on alternatives like the "ratchet effect," "poisoning the well," "clown attacks," and other models where proposing radical changes can make you, your allies, and your ideas look unreasonable.

I'm not familiar with a lot of systematic empirical evidence on either side, but it seems to me like the more effective actors in the DC establishment overall are much more in the habit of looking for small wins that are both good in themselves ...

Is there any research on the gap between AI safety research and reality? I wanted to read Eric Drexler's report on R&D automation in AI development, but it was too long so I put it on hold.

It is very doubtful whether such things are within the controllable area.

(1)OpenAI incident

(2)Open source projects such as stockfish have their development process made public. However, it is very unclear and opaque (despite their best efforts).

Overall, I feel strongly that research on AI safety is disconnected from reality.

(EA) Hotel dedicated to events, retreats, and bootcamps in Blackpool, UK?

I want to try and gauge what the demand for this might be. Would you be interested in holding or participating in events in such a place? Or work running them? Examples of hosted events could be: workshops, conferences, unconferences, retreats, summer schools, coding/data science bootcamps, EtG accelerators, EA charity accelerators, intro to EA bootcamps, AI Safety bootcamps, etc.

This would be next door to CEEALAR (the building is potentially coming on the market), but mos... (read more)

For my org, I can imagine using this if it was 2x the size or more, but I can't really think of events I'd run that would be worth the effort to organise for 15 people.

(Maybe like 30% chance I'd use it within 2 years if had 30+ bedrooms, less than 10% chance at the actual size.)

Cool idea though!