Background to the post and epistemic status: I wrote this post in fulfilment of my participation in the BlueDot Pandemics Course. Before the course, I had had limited formal exposure to Biosecurity and Pandemic Preparedness and took 5-6 weeks to complete the project. The post outlines my understanding of AI-driven biorisk as of April 2024. I think it is mostly useful for those who, like me, do not have a background in the life sciences new to EA and/or Biosecurity or casual readers.

Lots of thanks to Sebastian Oehm and Peter Babigumira from the Pandemics Course team for their support and thoughtful comments and all my cohort mates who made the weekly discussion exciting and digestible for me! Finally to Jonas Sandbrink, whose paper set me down this path!

All errors are mine.

Summary

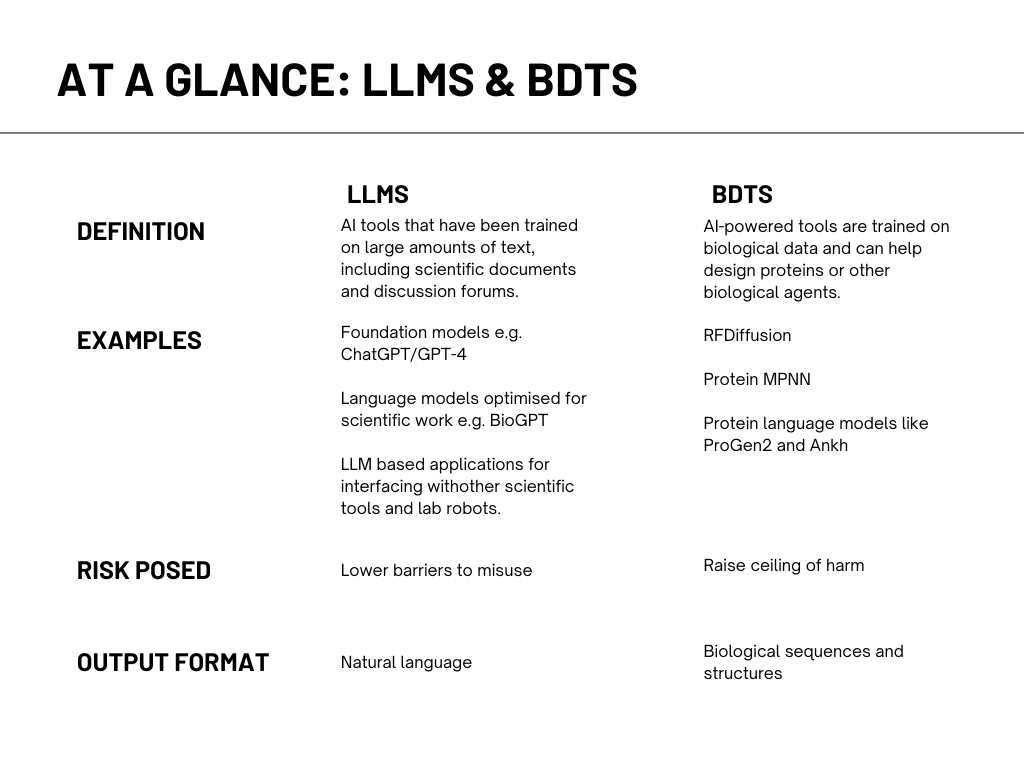

The dual-use nature of AI-powered tools such as large language models (LLMs) and biological design tools (BDTs) renders them valuable for both beneficial and potentially harmful purposes. Their potential to democratise access to advanced scientific knowledge and processes carries significant implications for biosecurity, especially as their capabilities advance. Addressing the serious risks they pose requires the pursuit of governance frameworks that balance their benefits against the existential threat they pose to humanity. As both tools are relatively new and the nature and extent of their risks remain speculative, concerns over their effects on biosecurity remain peripheral in the general public consciousness. Yet, this should not be the case, given the severity of these risks. Therefore, this paper attempts to shed some light on the current state of affairs for individuals with limited exposure to AI-driven biorisk with the aim of raising awareness and prompting appropriate action.

Glossary

Biological Design Tool: a platform that assists in the design, modelling, and simulation of biological components or systems, enabling researchers to engineer and manipulate genetic information, predict behaviour, and optimise the performance of biological entities

BLAST: a computer program for comparing biological sequences

DNA synthesis: the artificial construction of DNA sequences that correspond to specific genes or genetic material of viruses or bacteria, allowing researchers to study and manipulate these genetic elements for various purposes such as understanding the pathogen’s characteristics and developing potential interventions.

Gain of function research: Any selection process involving an alteration of genotypes and their resulting phenotypes. This involves experiments in virology that aim to produce a gain of a desired function, such as higher yields for vaccine strains, but often also lead to loss of function, such as loss of the ability for a virus to replicate well as a consequence.

Information hazard: risks that arise from the dissemination or the potential dissemination of true information that may cause harm or enable some agent to cause harm.

Jailbreaking techniques: approaches that allow users to exploit potential loopholes or weaknesses in LLMs in order to bypass their built-in safety, ethical, or operational guidelines. This could lead to LLMs behaving in unintended ways such as generating inappropriate content, disclosing sensitive information, or performing actions against programming constraints.

Potential pandemic pathogens: bacteria, viruses and other microorganisms that are likely highly transmissible and capable of wide, uncontrollable spread in human populations and highly virulent, making them likely to cause significant morbidity and/or mortality in humans.

Screening: a review process to evaluate customer order for any match against regulated organisms and toxins.

Synthetic biology: a field of science that involves redesigning organisms for useful purposes by engineering them to have new abilities.

Zero Shot design: refers to the ability of an AI-powered tool to generate a new design without having ever seen an example of it before.

Introduction

In 2012, two prominent publications describing successful laboratory attempts at enabling and enhancing the mammalian transmissibility of the avian H5N1 influenza virus generated a lot of controversy., The release of these findings sparked serious biosecurity concerns, leading to debates over the benefits and risks of their publicization. On one hand, pro-publication commentators argued that the findings would be useful for pandemic preparedness, while on the other hand, those against publicization highlighted the grave security concerns posed by having such knowledge in the public domain.

To date, debate over the likelihood of actors weaponizing disease and how much concern this merits continues for several reasons. Firstly, there are very few pathogens capable of causing widespread human-to-human transmissible epidemics. Secondly, engineering pathogens is a complex process, and novel pathogens with pandemic potential can only be created through technically challenging gain-of-function experiments. Moreover, it is difficult to give precise estimates on the risk of intentional misuse of a potential pandemic pathogen (PPP) because the kinds of data required to inform such an estimate are currently unavailable and will likely remain so in the foreseeable future.

However, it would be a grave error to mistake disagreements over the likelihood of PPPs being used for terrorist acts to mean that the risk does not warrant concern. Instead, it would be more prudent to see these disagreements as evidence of the ‘great complexity and uncertainty’ surrounding the problem and subsequently err on the side of caution. Indeed, Inglesby and Relman label claims denying the possibility of actors ever deliberately misusing PPPs as ‘grossly irresponsible guesswork’, pointing out that the mere fact that a technology has not previously been misused cannot be a reliable predictor of its potential future misuse.

There are other indicators that we could use in assessing the threat landscape even in the absence of precise estimates. These indicators include the human and political motivations for the misuse of PPPs, the weaknesses in our security systems, and the distribution and quality of research capacity globally. Furthermore, there are currently a non-negligible number of laboratories capable of conducting PPP research, such as to produce ‘enough material’ to cause death, public panic, and significant economic costs, despite some technical challenges to creating bioweapons. Moreover, the vulnerabilities inherent to human systems make it likely that especially motivated malicious actors could successfully circumvent existing security systems to obtain the capabilities needed to deliberately cause harm using PPPs.

Finally, there is evidence that the weaponization of PPPs could be attractive to states and terrorist groups. For example, the Islamic State has made attempts to recruit scientists to join its ranks, while the Aum Shinrikyo cult attempted to develop and use bioweapons in Japan in the 1990s, albeit unsuccessfully. States, including the Soviet Union, Germany, and more prominently Japan, also had bioweapons programs between the first and second world wars, while the Soviet Union and the US pursued bioweapons programs during the cold war. Even now, there are capable states such as Israel,, that have yet to ratify the Biological Weapons Convention, the principal international treaty prohibiting the development and use of bioweapons.

Previously, the ability to harness dual-use technologies and knowledge in biological sciences was largely confined to those who had the requisite talent and resources, primarily state actors. The ceiling of possible harm that their bioweapons could create was also lower, as naturally emerging pathogens purportedly face a trade-off between transmissibility and virulence for evolutionary reasons, presumably making them less destructive. This status quo could change dramatically in the age of artificial intelligence (AI), as AI-enabled biology is set to significantly impact our preparedness and response capacities for biological threats, whether they occur naturally, or are released accidentally or deliberately to cause harm in significant ways.

AI-powered tools, primarily large language models (LLMs) and biological design tools (BDTs), are also likely to increase biosecurity concerns as their dual-use nature makes them useful in the advancement of both benevolent and malevolent purposes. For instance, they could greatly enhance capabilities at different stages of the development and deployment of bioweapons. On one hand, LLMs could make dual-use information more accessible, thus lowering the barriers to biological misuse, while BDTs, on the other hand, could enable biological engineering and the modification of dangerous pathogens, which would overcome the aforementioned tradeoff faced by naturally occurring pathogens. Hence, the use of these tools in synthetic biology presents a serious danger because they could expand access to pandemic-class biological agents to a wider range of actors and allow these actors to enhance the lethality, host range, or transmissibility of PPPs, or make them resistant to existing vaccines or therapies. In other words, these tools could substantially increase the number of those capable of causing large-scale harm and the severity of harm they could cause.

Large Language Models (LLMs)

LLMs are AI tools that have been trained on large amounts of data, including scientific information. Examples include foundation models such as ChatGPT/GPT-4, language models optimised for scientific work such as BioGPT, and LLM-based applications that have interfaces with other scientific tools and lab robots. These models can, for example, reduce the human expertise required to carry out gain-of-function research in virology or synthesise biological pathogens. With varying levels of accuracy, they can also predict protein-protein interactions and help design DNA sequences for biological tasks and biological experiments.

These tools can affect biosecurity in a number of ways. Firstly, LLMs have made existing biological knowledge and information more accessible to non-experts. In particular, they have made learning about dual-use knowledge more efficient by synthesising knowledge from different sources, breaking down complex information and tailoring it to non-experts, and even proactively pointing out variables the user did not know or think to inquire about. Additionally, LLMs trained on scientific literature can disseminate specialist knowledge in human-like responses by chatbots, thus democratising access to technical expertise.

Secondly, LLMs may help with the ideation and planning of bioweapons programs by offering guidance on how to acquire, alter, and distribute dangerous bio-agents. Thirdly, they could become effective lab assistants, providing detailed, step-by-step instructions for experiments and troubleshooting. In the future, they may also be able to do scientific work with minimal human input, removing some other barriers, such as the need for secrecy, to bioweapons development. By working autonomously or semi-autonomously, these models could allow for secrecy, which has in the past hindered the establishment and development of bioweapons programs.

Unlike regular LLMs, which primarily focus on processing and generating text-based responses, LLM-enabled autonomous agents can interact with the world, make decisions, and take actions based on linguistic inputs and their understanding of the context. In 2023, a team of researchers reported that they had created such an agent, named Intelligent Agent, which had been able to perform ibuprofen synthesis and had also halted the release of further information on heroin during experimentation upon flagging it as a known harmful compound. However, it is doubtful that it would have taken the same action if heroin was substituted with another dangerous but unknown compound.

Together, LLMs and LLM-enabled autonomous agents could become game changers by making scientific experimentation more accessible to researchers with limited resources or expertise. They could also democratise scientific resources by assisting in the education and training of researchers and students and possibly lowering the costs of research and design processes. Conversely, they could also make it easier even for non-experts to develop known or novel bioweapons. This has led to concerns that unmitigated LLMs could accelerate efforts by malicious actors to misuse biology and enable them to accomplish previously impracticable tasks with mere internet access. However, some researchers have found little evidence showing that LLMs, when prompted, would provide information that wasn’t readily available on the internet.

The MIT classroom exercise

A demonstration of the biosecurity risks currently posed by LLMs comes from the findings of an MIT classroom exercise intended to evaluate the ability of LLMs to ‘confer easy access to dual-use technologies’. In this exercise, three groups of non-scientist students were directed to use chatbots for a number of tasks. Through these tasks, the students learned about specific PPPs, how to obtain infectious samples of viruses, how to obtain the necessary equipment, and how to evade screening.

In particular, the students were able to identify the four PPPs that are most likely to lead to a pandemic: the 1918 H1N1 influenza, the enhanced-transmission H5N1 influenza reported in 2012, the Variola major virus (responsible for smallpox), and the Bangladesh strain of the Nipah virus. The chatbots also pointed out which features made particular PPPs particularly strong candidates and which weakened their chances of success. For instance, the chatbots highlighted our lack of immunity against the Variola major virus given that we are no longer vaccinated against it and that we have not been in contact with other similar viruses as a factor that could heighten the lethality of an outbreak of the virus. They also flagged the Nipah virus’s insufficient transmissibility as a limitation to its potential to cause a pandemic in the future.

When prompted further, the chatbots gave the students some suggestions, albeit incomplete and at times missing crucial details, for mutations of the PPPs to enhance infection. They also described reverse genetics and the challenges the students could face in generating such pathogens. Worse still, using easy-to-find jailbreaking techniques, some of the students were able to acquire information on how to obtain the required reagents and devices for reverse genetics protocols and how to evade screening of DNA sequences using suppliers that do not screen orders or by using BLAST.

Although the current limitations in public knowledge incapacitate the ability of LLMs to successfully guide non-experts through the process of causing pandemics, these findings show that non-experts can already receive instructions on how to identify, acquire, and release PPPs and are therefore indicative of AI’s potential to exacerbate catastrophic biological risks.

Biological Design Tools (BDTs)

BDTs are computational tools that help design proteins, viral vectors, or other biological agents. They could, for example, enable an actor to design a biological agent with favourable properties and modify the agent’s delivery mechanism in a manner that optimises infectious doses and ensures environmental survival. BDTs can also speed up experimentation by suggesting optimal properties of biological agents up front thus reducing the number of tests needed to reach desired outcomes. Examples of BDTs include RFDiffusion, Protein MPNN, and protein language models like ProGen2 and Ankh. A crucial difference between LLMs and BDTs is both the training data — as LLMs are trained on natural language while BDTs are trained on biological data — and the output — LLMs typically produce outputs in natural language while BDTs produce outputs in the form of biological sequences, structures, and predictions. Unsurprisingly, BDTs are mostly used by those knowledgeable in both computer programming and biology, although efforts are underway to make them easier to use.

Although BDTs are currently limited to only creating proteins with relatively simple, singular functions, they may eventually develop the capability to create complex proteins, enzymes, and even whole organisms optimised across different functions. At that stage, BDTs could be modified to design potent novel toxins, which could significantly raise the ceiling of capabilities of pathogens. These modifications could allow pathogens to become highly virulent and transmissible at the same time or make them more predictable and targetable to specific geographic areas or populations. More capable BDTs might also ease the design process of potentially harmful agents that do not resemble the function or sequence of any known toxin or pathogen. In effect, they would allow malicious actors to circumvent existing measures such as screening, which are intended to control access to dangerous agents. Finally, should BDTs become capable of producing zero-shot designs that do not require lab validation, they would also lower barriers to misuse.

Information hazards

Bioterrorism using engineered pathogens remains a low-probability scenario, as very few people possess the relevant motivates and skills to design an optimised pathogen. However, as DNA synthesis and lab automation technology advance, the amount of skills required to build bioweapons will decline, making knowledge and information, not skill, the greatest obstacles to this process. As such, we ought to pay more attention to the ability of LLMs and BDTs to increase bio-information hazards. These information hazards could cause security breaches, misuse of knowledge, or risks associated with dual-use research. There are different kinds of information hazards, such as data hazards, which emanate from sharing specific data, like a PPP’s genetic sequence or idea hazards, which arise from merely disseminating a general idea without specific details.

Strong norms around information sharing exist in science and software communities. In science, the reproducibility requirement often requires detailed publicization of findings, including dual-use ones, which typically then remain in the public domain. This culture is also upheld by funders in this space, who often require the publication of findings. The numerous current and expected benefits of AI to science, society, and economies, as well as concerns over monopolistic control, take centre stage in discussions over the sharing of AI models. AI developers often face a dilemma over whether and to what extent they should share their models as debates rage on over the risks, benefits, and tradeoffs of open-source model releases. Ultimately, different AI developers have taken different approaches, as some have kept their models entirely private while others have used a structured access approach instead when releasing their LLMs. BDTs are largely released as open-source, both in academia and industry.

This general bias towards information sharing in these fields poses a particular challenge for biosecurity in the form of information hazards. As knowledge and information in the biological sciences are often dual-use, attempts to classify them in simplistic categories of either safe and open or hazardous and secret are nearly futile. To elaborate, information on PPPs can be used both by health security professionals to develop countermeasures and by those seeking to use disease as a weapon. For example, the publication of findings on the mammalian transmissibility of H5N1 could simultaneously allow scientists to further understand the workings of the virus in order to develop appropriate countermeasures as well as allow malicious actors to replicate the experiments for offensive use.

Information hazards may also arise from seemingly benign discoveries, such as the discovery of the mechanism of action of a common therapeutic agent. Using this information, a malicious actor could find ways to design a pathogen that is resistant to the drug. Concerningly, even attempts to minimise harm from bioweapons could result in hazardous second-order effects. Case in point is the prohibition of bioweapons by Western powers during the Second World War which signalled to the Japanese government the potency of such weapons. This eventually led to the development and use of their own bioweapons program. Al Qaeda also reportedly also pursued a bioterror program following expressed bioterrorism concerns by the US government.

However, the power to transform an information hazard into an actual risk is limited to the capabilities of the information recipient as information that would be hazardous if revealed to a rogue state would be harmless if possessed by a potential bioterrorist due to resource constraints. Malicious actors also vary in their capacity to generate hazardous information themselves, which has some implications for what information is considered shareable. Where there are a few highly sophisticated malicious actors, sharing easily discoverable information hazards is less risky as the actors either already know that information or could easily find it. Where there are large numbers of unsophisticated bad actors, publicising the same kind of information becomes riskier because it allows these actors to overcome a barrier that they would have otherwise been unable to.

The risks posed by information hazards call for more thoughtful deliberation about which kinds of knowledge ought to be promoted or actively impeded. As we come to terms with the fact that some knowledge is best kept secret, we ought to be wary of the ability of LLMs and BDTs to promote the proliferation of numerous kinds of information hazards, subsequently increasing biosecurity concerns. To mitigate this risk, developers of LLMs and BDTs may benefit from employing some principles of bio-information hazard management. These principles encourage relevant actors to: a) predict which work is most likely to generate information hazards and exclude it as far as possible, erring on the side of caution when doubtful about the extent of the hazard; b) describe how and how much the information helps good and bad actors when assessing these kinds of information; and c) disclose hazardous information in a manner that maximally disadvantages ill-intentioned actors.

Governance

There is no hard evidence that the current generations of these tools already present significant biosecurity risks., Indeed, OpenAI recently found that their model, GPT-4, only minimally increases actors’ accuracy in the creation of biological threats. However, as these technologies become more powerful, it is likely that the threats they pose will also rise. Therefore, it would be irresponsible to overlook the danger they pose because of the severity of harm they could cause, such as a global biological catastrophe. Furthermore, model capability has historically increased unexpectedly, making it challenging to predict when danger may materialise and how fast these risks will increase. Hence, the governance of these tools ought to be prioritised now.

Policy discussions on AI-driven biorisk frequently do not distinguish between LLMs and BDTs, despite the fact that they have distinct effects on biosecurity and may have to be governed differently. For instance, while LLMs grant more people access to existing knowledge that was previously possessed only by experts, BDTs empower already skilled actors with novel capabilities. As such, Walsh suggests that while near-term LLM governance should focus on preventing users from accessing dual-use information that is of concern, BDT governance should focus on preventing users from generating new information that is harmful. Even so, there will inevitably be overlaps because some BDTs are LLM-based, and LLMs refined on biological data have the potential to become extremely potent BDTs.

Some of the governance measures proposed to this effect include the following:

Public-private AI task forces

Some actors have proposed establishing public-private AI task forces and subordinate technical working groups staffed with experts well-versed in LLMs and BDTs as a mechanism to support collaboration and effectiveness in the governance of these tools. These proposed working groups would be tasked with establishing ways to continuously monitor and update regulations on AI and to disincentivize the release of untested models by developers as well as developing external expert testing frameworks and accountability mechanisms for AI applications. Under this framework, law specific working groups could focus on designing and creating appropriate legal entities for the governance of these tools and proposing appropriate liability regimes for developers and users of LLMs and BDTs. The evaluations conducted by these working groups could be useful in establishing the extent to which LLMs and BDTs reduce the need for knowledge, skills, and infrastructure to create bioweapons.

Pre-release measures

To mitigate the ability of LLMs to lower barriers of access to potentially dangerous information, a set of complementary measures such as pre-release LLM evaluations and training dataset curation should be mandated as the best next steps in tackling this problem. These measures are useful because LLMs can only disclose information or conceptually reason using information they have or can access. Consequently, teams of experts in biotechnology and information security could be used to identify the sets of publications most relevant to bioweapons development and subsequently guide LLM curators on which publications and related online information they ought to avoid when training their datasets. Pre-released models could also be subjected to ‘unlearning’ which would directly remove dangerous information eliminating chances of the jail-breaking techniques being successfully employed to bypass safeguards.

Restricted model sharing

The restriction of access to AI models has been met with concerns over stifled innovation, the lack of external oversight, and the concentration of the control and benefits of AI in the hands of a few to the exclusion of all others. Supporters of open-source AI maintain that, much like open-source software, it will promote innovation, encourage fair competition and cooperation, increase accessibility, nurture talent, and promote scientific progress. However, open-source software and open-source AI are not analogous and should not be treated as such. For one, open-source AI poses much higher risks of misuse and accidents than open-source software because it is easier to circumvent security measures for open-source AI models. Secondly, capable actors can fine-tune such models, which could result in the introduction or enhancement of capabilities that can be misused. Lastly, open-sourcing an AI model is an irreversible act that cannot be undone even when serious harms materialise.

AI models contain different components — such as the model architecture, model weights, inference code, and training code — which can be released individually without releasing the whole model and still be useful to other developers. When more model components are publicly released, it becomes easier for other developers to reproduce, modify, and use the model, thereby increasing the risk of misuse. Even though highly capable foundation models can currently be trained by a handful of resource-endowed actors, risks can originate from pre-trained AI models as they can be run at a small scale by more actors and can similarly be misused. Given that other safer alternatives to obtaining the perceived benefits of open-source AI exist, embracing restricted model sharing would help reduce the risks posed by LLMs now and in the future.

A note on BDTs

Since BDTs are not as ubiquitous as LLMs and remain useful only to technically skilled individuals, there is a greater opportunity to influence their evolution in a way that prioritises biosecurity concerns. There is also room to discover and promote safer ways of BDT model sharing, most of which remain open-source. Furthermore, maintaining the current technical obstacles to BDT use might be helpful in limiting the amount of actors who can misuse them. However, as with restrictions on other AI models, this should be enforced without exacerbating social inequalities.

Conclusion

Scientific progress is necessary for us to maintain the benefits we presently enjoy from past advances and to ensure the long term flourishing of humanity and other sentient beings. AI-enabled biology could simultaneously bring about unparalleled progress in the field of biological sciences, greatly improving the lives of many and just as easily augment biological threats to humanity. To maximise their benefits and simultaneously minimise their risks, we need to take decisive action now and collaboratively engage in thoughtful and forward-looking deliberations on how best to develop and utilise these tools.

Bibliography

- Bacon D, ‘Biological warfare: A historical perspective’, 22(4) Seminars in Perioperative Medicine and Pain, 2003, https://www.researchgate.net/publication/257109966_Biological_Warfare_An_Historical_Perspective

- Boiko A, MacKnight R, Gomes G, ‘Emergent autonomous scientific research capabilities of large language models’, https://arxiv.org/ftp/arxiv/papers/2304/2304.05332.pdf

- Chu J, Liu Y, Yang Z, Shen X, Backes M, Zhang Y, ‘Comprehensive assessment of jailbreak attacks against LLMs’ CISPA Helmholtz Centre for Information Security, 2024,https://arxiv.org/pdf/2402.05668.pdf

- Cohen A, Israel and chemical/biological weapons: History, deterrence, and arms control’, The Non-Proliferation Review, 2001,https://www.nonproliferation.org/wp-content/uploads/npr/83cohen.pdf

- Crawford M, Adamson F, Ladish J, Bioinfohazards, EA Forum 2019, https://forum.effectivealtruism.org/posts/ixeo9swGQTbYtLhji/bioinfohazards-1

- Front Matter, National Research Council, Biotechnology research in an age of terrorism, Washington DC, The National Academies Press, 2004, 84, https://nap.nationalacademies.org/read/10827/chapter/5#84

- HELENA, Biosecurity in the age of AI: Chairperson’s statement, July 2023, , https://938f895d-7ac1-45ec-bb16-1201cbbc00ae.usrfiles.com/ugd/938f89_74d6e163774a4691ae8aa0d38e98304f.pdf

- Inglesby T, Relman D, ‘How likely is it that biological agents will be used to deliberately cause widespread harm?: Policymakers and scientists need to take seriously the possibility that potential pandemic pathogens will be misused’ 17(2) EMBO Reports, 2016, https://www.embopress.org/doi/full/10.15252/embr.201541674

- Lewis G, Millett P, Sandberg A, Snyder-Beattier A, Gronvall G, ‘Information hazards in biotechnology’ 39(5) Risk Analysis, 2018, https://onlinelibrary.wiley.c

- Lin N, Pan A, et al, ‘The WNPD benchmark: Measuring and reducing malicious use with unlearning’ 2024, https://arxiv.org/pdf/2403.03218

- Luo R, Sun L, Xia Y, Qin T, Zhang S, Poon H, Liu T, ‘BioGPT: Generative pre-trained transformer for biomedical text generation and mining’ 23(6) Briefings in Bioinformatics, 2022, https://academic.oup.com/bib/article/23/6/bbac409/6713511?guestAccessKey=a66d9b5d-4f83-4017-bb52-405815c907b9&login=false.

- Microsoft Research AI4Science, ‘The impact of large language models on scientific discovery: A preliminary study using GPT-4’. Microsoft Azure Quantum, 2023, https://arxiv.org/pdf/2311.07361.pdf

- Mouton C, ‘Current artificial intelligence does not meaningfully increase risk of a biological weapon attack’ RAND, 25 January 2024, https://www.rand.org/news/press/2024/01/25.html

- Mouton C, Lucas C, Guest E, ‘The operational risks of AI in large-scale biological attacks’, RAND, https://www.rand.org/pubs/research_reports/RRA2977-2.html

- National Human Genome Research Institute, Synthetic biology, https://www.genome.gov/about-genomics/policy-issues/Synthetic-Biology#:~:text=Synthetic%20biology%20is%20a%20field,in%20medicine%2C%20manufacturing%20and%20agriculture.

- National Institutes of Health, Research involving enhanced potential pandemic pathogens, https://www.nih.gov/news-events/research-involving-potential-pandemic-pathogens#:~:text=Potential%20pandemic%20pathogens%20(PPPs)%20are,and%2For%20mortality%20in%20humans

- National Library of Medicine, Basic Local Alignment Search Tool, https://blast.ncbi.nlm.nih.gov/Blast.cgi

- National Library of Medicine, Gain-of-function research: Background and alternatives, 2015, https://www.ncbi.nlm.nih.gov/books/NBK285579/

- OpenAI, Building an early warning system for LLM-aided biological threat creation, 31 January 2024, https://openai.com/research/building-an-early-warning-system-for-llm-aided-biological-threat-creation#discussion

- Riedel S, ‘Biological warfare and bioterrorism: A historical review’ 17(4) Proc (Bayl Univ Med Cent), 2004, https://www.ncbi.nlm.nih.gov/pmc/articles/PMC1200679/

- Sandbrink J, ‘Artificial intelligence and biological misuse: Differentiating risks of language models and biological design tools’, 5, https://arxiv.org/ftp/arxiv/papers/2306/2306.13952.pdf

- Seger E, Dreksler N, Moulange R, Dardaman E, Schuett J, Wei K, et al, ‘Open-sourcing highly capable foundation models: An evaluation of risks, benefits, and alternative methods for pursuing open-source objectives’, Centre for the Governance of AI, 2023, 13, https://cdn.governance.ai/Open-Sourcing_Highly_Capable_Foundation_Models_2023_GovAI.pdf

- Shreshtha Y, Grogh G, Feuerriegel S, ‘Building open-source AI’, Nature Computational Science, 2023, https://www.nature.com/articles/s43588-023-00540-0

- Soice E, Rocha R, Cordova K, Specter M, Esvelt K, ‘Can large language models democratize access to dual-use biotechnology’, https://arxiv.org/ftp/arxiv/papers/2306/2306.03809.pdf

- Tu M, ‘Between publishing and perishing? H5N1 research unleashes unprecedented dual-use research controversy’, NTI, 2 May 2012 https://www.nti.org/analysis/articles/between-publishing-and-perishing-h5n1-research-unleashes-unprecedented-dual-use-research-controversy/

- Walsh M, ‘Why AI for biological design should be regulated differently than chatbots’ Bulletin of the Atomic Scientists, 1 September 2023,https://thebulletin.org/2023/09/why-ai-for-biological-design-should-be-regulated-differently-than-chatbots/

- Watson J, Juergens D, Bennett N, et al. ‘De novo design of protein structure and function with RFdiffusion’ 620 Nature, 2023, https://www.nature.com/articles/s41586-023-06415-8

Executive summary: Large language models (LLMs) and biological design tools (BDTs) powered by AI have the potential to significantly increase biosecurity risks by making it easier for malicious actors to develop bioweapons, necessitating proactive governance measures to mitigate these risks.

Key points:

This comment was auto-generated by the EA Forum Team. Feel free to point out issues with this summary by replying to the comment, and contact us if you have feedback.