David_Moss

Bio

I am the Principal Research Director at Rethink Priorities. I lead our Surveys and Data Analysis department and our Worldview Investigation Team.

The Worldview Investigation Team previously completed the Moral Weight Project and CURVE Sequence / Cross-Cause Model. We're currently working on tools to help EAs decide how they should allocate resources within portfolios of different causes, and to how to use a moral parliament approach to allocate resources given metanormative uncertainty.

The Surveys and Data Analysis Team primarily works on private commissions for core EA movement and longtermist orgs, where we provide:

- Private polling to assess public attitudes

- Message testing / framing experiments, testing online ads

- Expert surveys

- Private data analyses and survey / analysis consultation

- Impact assessments of orgs/programs

Formerly, I also managed our Wild Animal Welfare department and I've previously worked for Charity Science, and been a trustee at Charity Entrepreneurship and EA London.

My academic interests are in moral psychology and methodology at the intersection of psychology and philosophy.

How I can help others

Survey methodology and data analysis.

Posts 42

Comments513

This is not a bad summary overall, but has some errors/confusions:

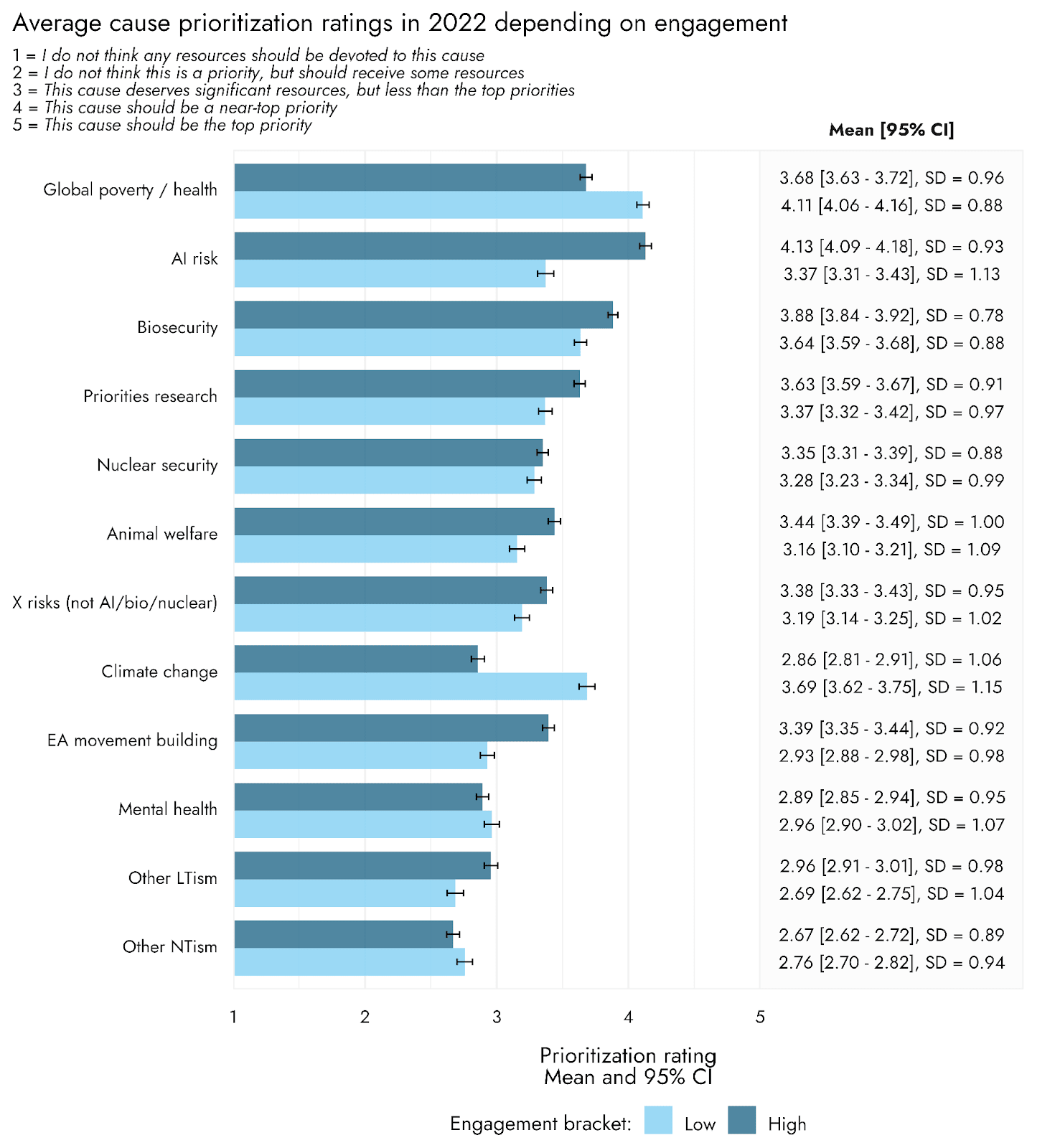

Longtermist causes are prioritized by 63.6% of respondents vs. 46.8% for neartermist causes, a gap that widens among highly engaged EAs.

Both parts of this are technically true, but the statistic referred to in the first half of the sentence is different from the one that we reported to show the gap between low/high engagement EAs.

Comparable statistics would be:

- Overall: 37.6% of respondents most prioritised only a longtermist cause, 21.0% most prioritised only a neartermist cause.

- Among the highly engaged, 47% most prioritized only a longtermist cause and only 13% most prioritized a neartermist cause.

- Among, the less engaged 26% most prioritized a longtermist cause and 31% most prioritized a neartermist cause.

In an allocation task, respondents assign the most resources to Global health/poverty, then AI risk, then animal welfare

True looking at farmed animal welfare, but a combined animal welfare category would be neck and neck with AI (slightly ahead but not significant).

with actual allocations lower on Global poverty and animal welfare than the survey or an earlier survey of EA leaders.

Actual allocations to Global Poverty are higher than our survey allocations and actual allocations to FAW are lower. I don't have statistics for actual allocations to WAW, but they are likely dramatically lower.

Thanks for the detailed comment!

I have to object to this. I don't think longtermism is best understood as a cause, or set of causes, but more as a justification for working on certain causes over others. e.g.:

Working on Nuclear Risk could be seen as near-termist.

We agree and say as much:

As we have noted previously, people may of course prioritise these causes for reasons other than longtermism or neartermism per se. Likewise, people might support the ‘Other’ causes here for neartermist or longtermist reasons.

And here's what we say at the linked previous post:

For simplicity, we label support for these, non-longtermist, causes “neartermist”, as we have in previous years. However, it’s worth noting explicitly that there is little reason to suppose that neartermism specifically, (e.g., attitudes or beliefs related to helping present vs. future generations or different time preference) explain support for these causes, rather than different epistemic beliefs (e.g., about appropriate kinds of evidence) or support for more traditional causes etc.

We also explicitly discuss your example of Climate Change making much the same point:

The classifications we used here were informed by our prior analyses of the factor structure of the cause prioritisation items and a priori theoretical considerations (namely Climate Change is clearly associated with the neartermist causes not the longtermist causes, but theoretically some might think of it as a longtermist cause, and we count it as ‘Other’ in this analysis)

I acknowledge though that your concern is that "this categorisation helps to reify and entrench those divisions". I think this is possible, but I think that:

- There is something[1] unifying these causes

- It is important to talk about that (I think we'd be more confused/less informed if we just considered all the causes as separate/independent).

- Referring to these clusters of causes and ideas in terms of "longtermism" and "neartermism" is established terminology. Crucially, I don't think there's an obviously better set of term, because "existential risk" wouldn't capture some causes within this bucket (e.g. in previous years we had a "broad longtermism" item which was also part of this cluster)

I think it's important enough not to hide the details in footnotes

I think it's an entirely reasonable view to think discussion of this should be in the text, not a footnote. Though we had a lot of information in both the footnotes and appendix, so it's tricky.

- ^

Though I don't claim it's a single thing, rather than a cluster of correlated things. And empirically, our longtermist-neartermist cause scores measure is strongly correlated with people's stated abstract beliefs. The single abstract item explicitly about longtermism, is correlated with LT-NT at r=0.457, which is appreciably strong for a necessarily noisy cause prioritisation score and single item, in a social science context.

Thanks Grayden!

- I strongly agree that engagement =/= commitment or impact.

- That said, I'd the trend for higher engagement to be associated with stronger support for longtermist over neartermist causes is also observed across other proxies for engagement. For example, perhaps most surprisingly, having taken the GWWC pledge is (within our sample) significantly associated with stronger support for LT over NT.

Thanks for the question Huw. This is in reference to the EA cause mental health, rather than mental health for EAs (which would fall under movement building (assuming that the purpose was building the movement, rather than just narrowly helping EA)). This was a requested addition from 2018.

Thanks!

do you see that any changes over time in cause prioritization could be explained by changing demographics? You mentioned e.g. engagement level predicting cause prioritization. I am then thinking that if the % of low to high engagement EAs have changed over time, perhaps that partially drives the trends in your 4th chart?

Yes, that's definitely right, and one of the reasons why we plotted the interaction between engagement and time in the appendix.

The percentage of high engagement EAs (using the simple binary measure) has increased over time, from 44% in 2019 to 49% in 2020 and 55% in 2022. So you would expect this to increase support for causes which are shown to be more strongly supported by high engagement EAs across those years. That said, looking at the interaction plots, you can also see that support for Biosecurity and Nuclear and AI has increased among both high and low engagement EAs over that time period,

It seems possible that both of these are neglected for similar reasons.

It seems surprising the funding would be the bottleneck (which means you can't just have more of both). But that has been my experience surprisingly often, i.e. core orgs are willing to devote many highly valuable staff hours to collaborating on survey projects, but balk at ~$10,000 survey costs.

Thanks again for the detailed reply Cameron!

It seems like you find the descriptor 'lukewarm' to be specifically problematic—I am considering changing the word choice of the 'headline result' accordingly given this exchange. (I originally chose to use the word 'lukewarm' to reflect the normal-but-slightly-negative skew of the results I've highlighted previously. I probably would have used 'divided' if our results looked bimodal, but they do not.)

I don't think our disagreement is to do with the word "lukewarm". I'd be happy for the word "lukewarm" to be replaced with "normal but slightly negative skew" or "roughly neutral, but slightly negative" in our disagreement. I'll explain where I think the disagreement is below.

Here's the core statement which I disagreed with:

EAs have lukewarm [normal but slightly-negative skew] views about longtermism

- Result: EAs (actively involved across 10+ cause areas) generally seem to think that AI risk and x-risk are less promising cause areas than ones like global health and development and animal welfare

The first point of disagreement concerned this claim:

- "EAs (actively involved across 10+ cause areas) generally seem to think that AI risk and x-risk are less promising cause areas than ones like global health and development and animal welfare"

If we take "promising" to mean anything like prioritise / support / believe should receive a larger amount of resources / believe is more impactful etc., then I think this is a straightforward substantive disagreement: I think whatever way we slice 'active involvement', we'll find more actively involved EAs prioritise X-risk more.

As we discussed above, it's possible that "promising" means something else. But I personally do not have a good sense of in what way actively involved EAs think AI and x-risk are less promising than GHD and animal welfare.[1]

EAs have lukewarm [normal but slightly-negative skew] views about longtermism

Concerning this claim, I think we need to distinguish (as I did above), between:

- What do people think of 'longtermism'? / What do people think about allocations to or prioritisation of longtermist causes?

- What do people think of EA's shift more towards longtermist causes?

Regarding the first of these questions, your second result shows slight disagreement with the claim "I think longtermist causes should be the primary focus in effective altruism". I agree that a reasonable interpretation of this result, taken in isolation, is that the actively involved EA community is slightly negative regarding longtermism. But taking into account other data, like our cause prioritisation data which shows actively engaged EAs strongly prioritise x-risk causes or result suggesting slight agreement with an abstract statement of longtermism, I'm more sceptical. I wonder if what explains the difference is people's response to the notion of these causes being the "primary focus", rather than their attitudes towards longtermist causes per se.[2] If so, these responses need not indicate that the actively involved community leans slightly negative towards longtermism.

In any case, this question largely seems to me to reduce to the question of what people's actual cause prioritisation is + what their beliefs are about abstract longtermism, discussed above.

Regarding the question of EA's attitudes towards the "overall shift towards longtermist causes", I would also say that, taken in isolation, it's reasonable to interpret your result as showing that actively involved EAs are lean slightly negative towards EA's shift towards longtermism. Again, our cause prioritisation results suggesting strong and increasing prioritisation of longtermist causes by more engaged EAs across multiple surveys gives me pause. But the main point I'll make (which suggests a potential conciliatory way to reconcile these results) is to observe that attitudes towards the "overall shift towards longtermist causes" may not reflect attitudes towards longtermism per se. Perhaps people are Neutral/Agnostic regarding the "overall shift", despite personally prioritising longtermist causes, because they are Agnostic about what people in the rest of the community should do. Or perhaps people think that the shift overall has been mishandled (whatever their cause prioritisation). If so the results may be interesting, regarding EAs' attitudes towards this "shift" but not regarding their overall attitudes towards longtermism and longtermist causes.

Thanks again for your work producing these results and responding to these comments!

- ^

As I noted, I could imagine "promising" connoting something like new, young, scrappy cause areas (such that an area could be more "promising" even if people support it less than a larger established cause area). I could sort of see this fitting Animal Welfare (though it's not really a new cause area), but it's hard for me to see this applying to Global Health/Global Poverty which is a very old, established and large cause area.

- ^

For example, people might think EA should not have a "primary focus", but remain a 'cause-neutral' movement (even though they prioritise longtermist cause most strongly and think they should get most resources). Or people might think we should split resources across causes for some other reason, despite favouring longtermism.

- ^

Thanks Cameron!

It is not particularly surprising to me that we are asking people meaningfully different questions and getting meaningfully different results...

the main question of whether these are reasonable apples-to-apples comparisons.)

We agree that our surveys asked different questions. I'm mostly not interested in assessing which of our questions are the most 'apples-to-apples comparisons', since I'm not interested in critiquing your results per se. Rather, I'm interested in what we should conclude about the object-level questions given our respective results (e.g. is the engaged EA community lukewarm and longtermism, and prioritises preference for global poverty and animal welfare, or is the community divided on these views, with the most engaged more strongly prioritising longtermism?).

in my earlier response, I had nothing to go on besides the 2020 result you have already published, which indicated that the plots you included in your first comment were drawn from a far wider sample of EA-affiliated people than what we were probing in our survey, which I still believe is true. Correct me if I'm wrong!)

I would just note that in my original response I showed the how the results varied across the full range of engagement levels, which I think offers more insight into how the community's views differ across groups, than just looking at one sub-group.

One further question/hunch I have in this regard is that the way we are quantifying high vs. low engagement is almost certainly different (is your sample self-reporting this/do you give them any quantitative criteria for reporting this?), which adds an additional layer of distance between these results.

The engagement scale is based on self-identification, but the highest engagement level is characterised with reference to "helping to lead an EA group or working at an EA-aligned organization". You can read more about our different measures of engagement and how they cohere here. Crucially, I also presented results specifically for EA org employees and people doing EA work so concerns about the engagement scale specifically do not seem relevant.

The most recent data you have that you mention briefly at the end of your response seems far more relevant in my view. It seems like both of the key results you are taking issue with here (cause prioritization and lukewarm longtermism views) you found yourself to some degree in these results

I respond to these two points below:

Your result 1:

The responses within the Cause Prioritization category which did not explicitly refer to too much focus on AI, were focused on insufficient attention being paid to other causes, primarily animals and GHD.

We specifically find the exact same two cause areas, animals and GHD, as being considered the most promising to currently pursue.

I don't think this tells us much about which causes people think most promising overall. The result you're referring to is looking only at the 22% of respondents who mentioned Cause prioritization as a reason for dissatisfaction with EA and were not one of the 16% of people who mentioned excessive focus on x-risk as a cause for dissatisfaction (38 respondents, of which 8 mentioned animals, 4 mentioned Global poverty and 7 mentioned another cause (the rest mentioned something other than a specific cause area)).

Our footnote mentioning this was never intended to indicate which causes are overall judged most promising, just to clarify how our 'Cause prioritization' and 'Excessive focus on AI' categories differed. (As it happens, I do think our results suggest Global Poverty and Animal Welfare are the highest rated non-x-risk cause areas, but they're not prioritised more highly than all x-risk causes).

Your result 2 (listed as the first reason for dissatisfaction with the EA community):

Focus on AI risks/x-risks/longtermism: Mainly a subset of the cause prioritization category, consisting of specific references to an overemphasis on AI risk and existential risks as a cause area, as well as longtermist thinking in the EA community.

Our results show that, among people dissatisfied with EA, Cause prioritisation (22%) and Focus on AI risks/x-risks/longtermism (16%) are among the most commonly mentioned reasons.[1] I should also emphasise that 'Focus on AI risks/x-risks/longtermism' is not the first reason for dissatisfaction with the EA community, it's the fifth.

I think both our sets of results show that (at least) a significant minority believe that the community has veered too much in the direction of AI/x-risk/longtermism. But I don't think that either sets of results show that the community overall is lukewarm on longtermism. I think the situation is better characterised as division between people who are more supportive of longtermist causes[2] (whose support has been growing), and those who are more supportive of neartermist causes.

I don't think there is any strong reason to ignore or otherwise dismiss out of hand what we've found here—we simply sourced a large and diverse sample of EAs, asked them fairly basic questions about their views on EA-related topics, and reported the results for the community to digest and discuss.)

I certainly agree that my comment here have only addressed one specific set of results to do with cause prioritisation, and that people should assess the other results on their own merits!

- ^

And, to be clear, these categories are overlapping, so the totals can't be combined.

- ^

As we have emphasised elsewhere, we're using "longtermist" and "neartermist" as a shorthand, and don't think that the division is necessarily explained by longtermism per se (e.g. the groupings might be explained by epistemic attitudes towards different kinds of evidence).

I think your sample is significantly broader than ours: we were looking specifically for people actively involved (we defined as >5h/week) in a specific EA cause area...

In other words, I think our results do not support the claim that

[it] isn't that EAs as a whole are lukewarm about longtermism: it's that highly engaged EAs prioritise longtermist causes and less highly engaged more strongly prioritise neartermist causes.

given that our sample is almost entirely composed of highly engaged EAs.

I don't think this can explain the difference, because our sample contains a larger number of highly engaged / actively involved EAs, and when we examine results for these groups (as I do above and below), they show the pattern I describe.

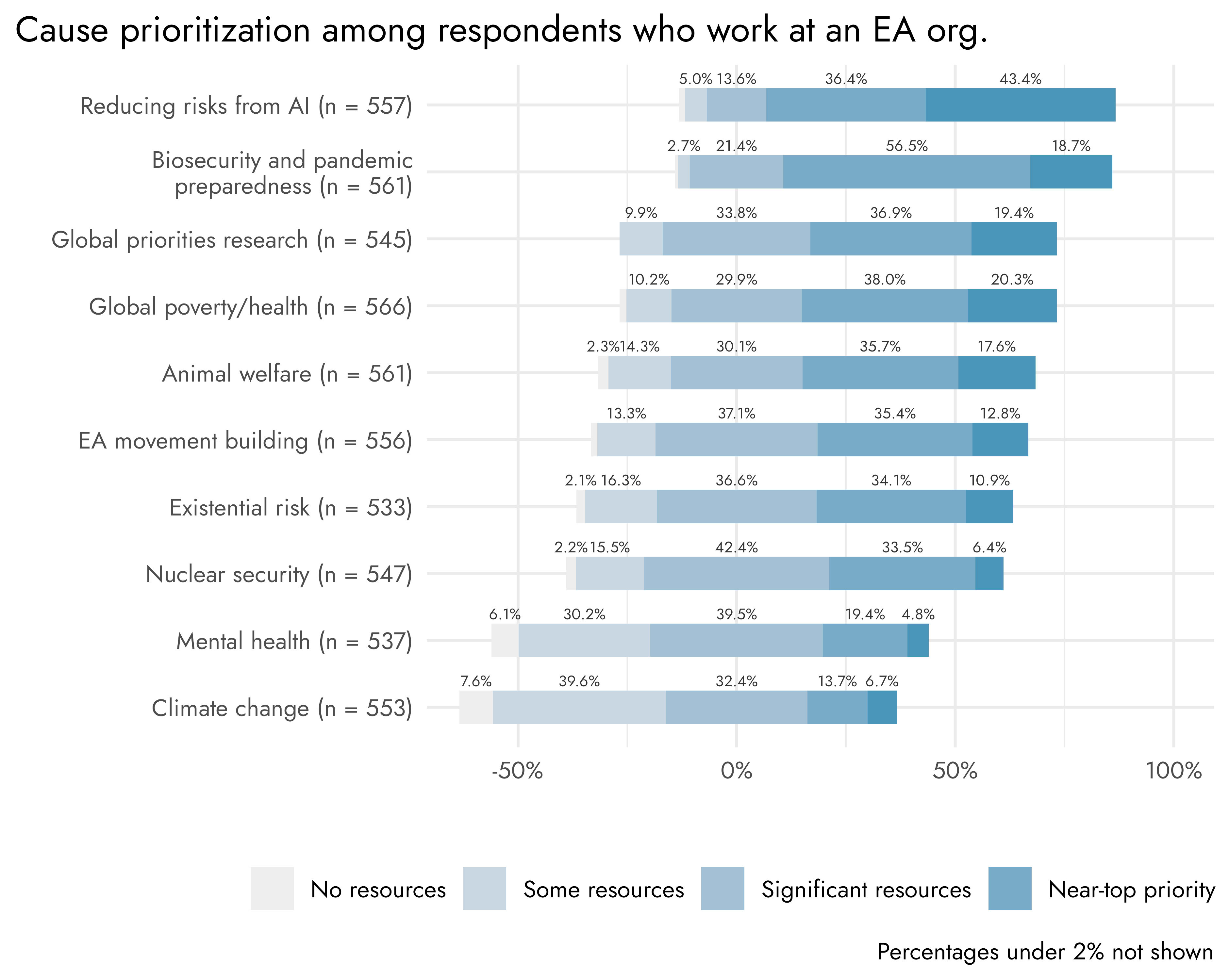

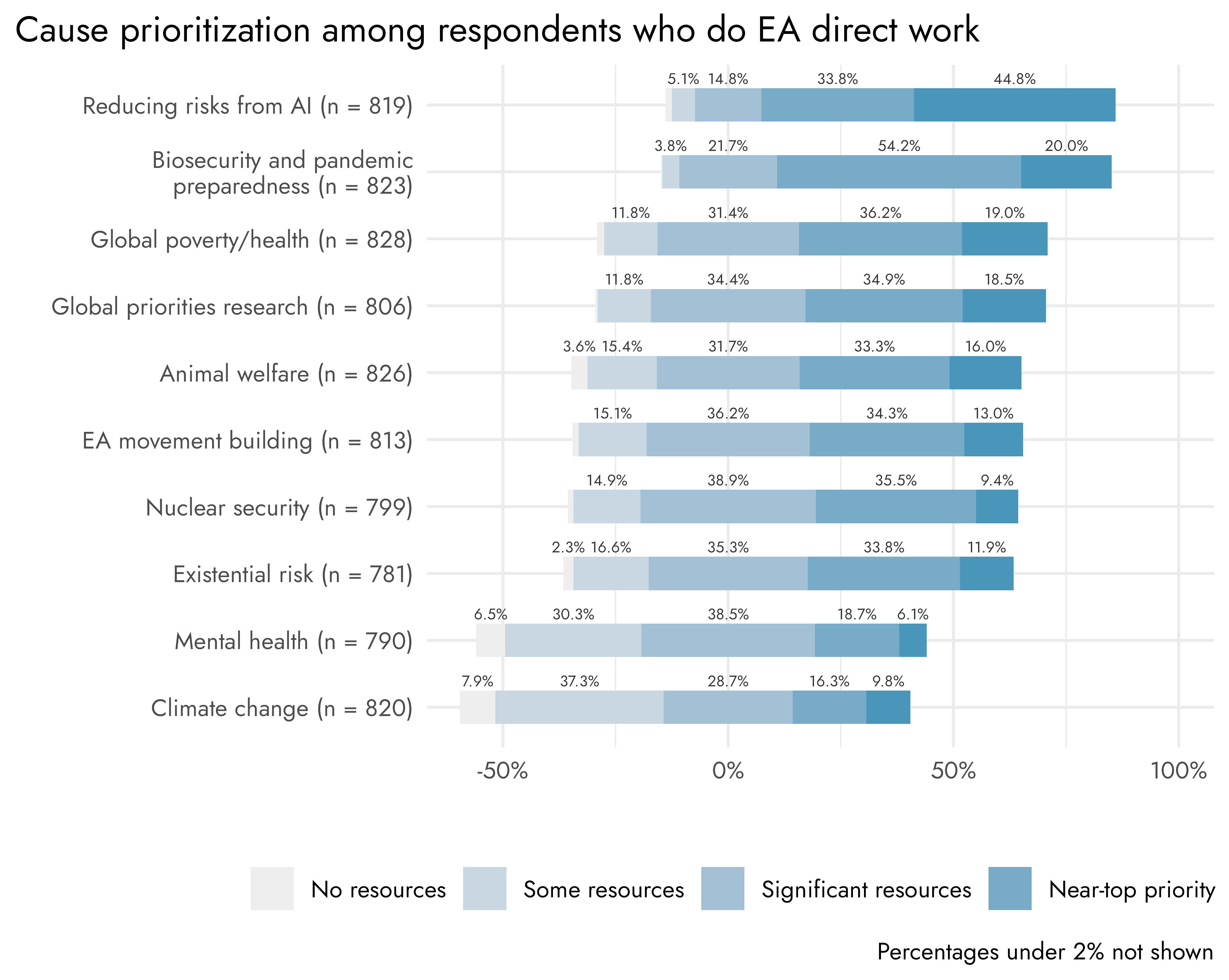

These are the results from people who currently work for an EA org or are currently doing direct work (for which we have >500 and 800 respondents respectively). Note that the EA Survey offers a wide variety of ways we can distinguish respondents based on their involvement, but I don't think any of them change the pattern I'm describing.

Both show that AI risk and Biosecurity are the most strongly prioritized causes among these groups. Global Poverty and Animal Welfare retain respectable levels of support, and it's important not to neglect that, but are less strongly prioritised among these groups.

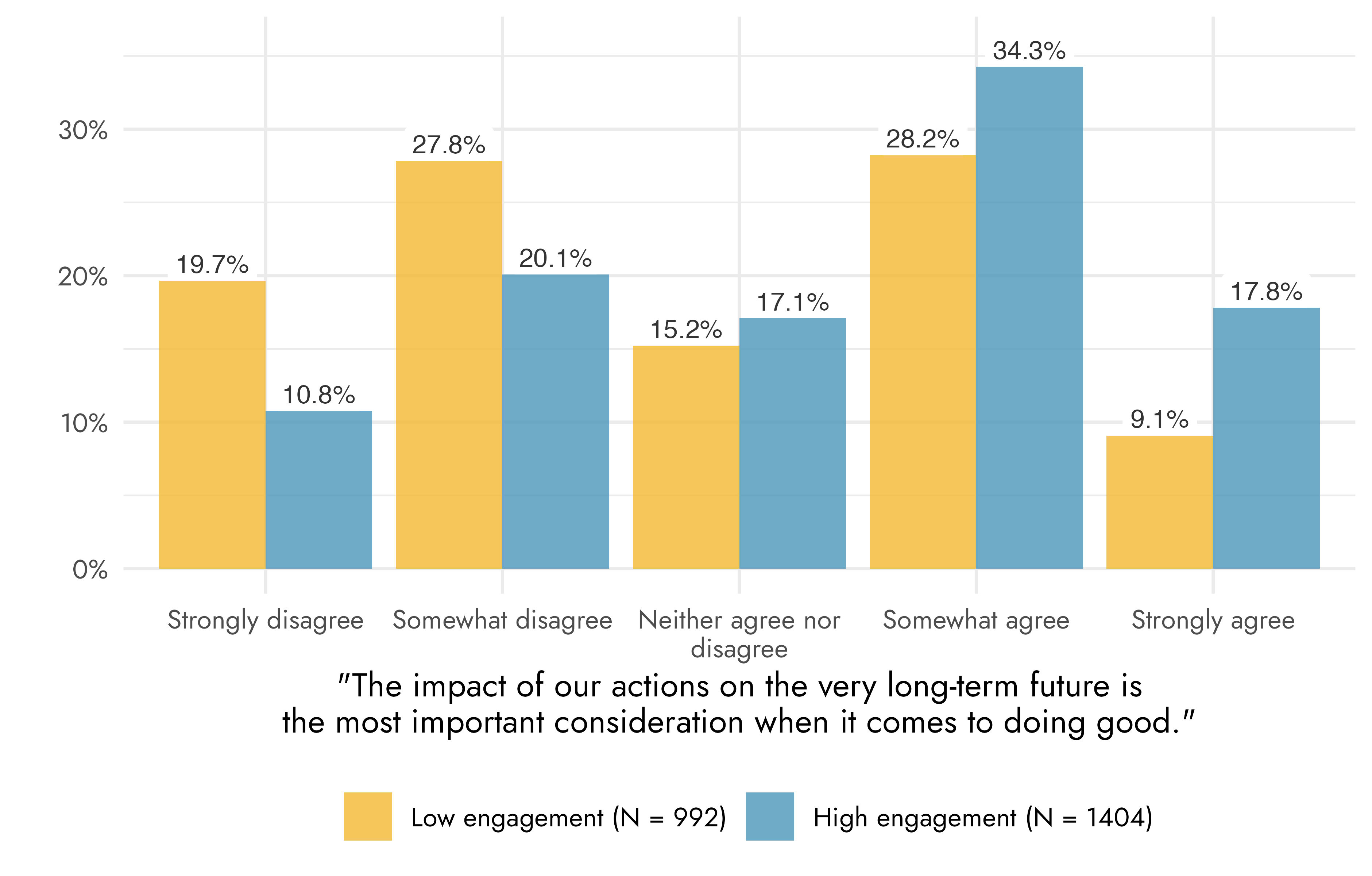

To assess the claim of whether there's a divergence between more and less highly engaged EAs, we need to look at the difference between groups however, not just a single group of somewhat actively involved EAs. Doing this with 2022 data, we see the expected pattern of AI Risk and Biosecurity being more strongly prioritised by highly engaged EAs and Global Poverty less so. Animal Welfare notably achieves higher support along the more highly engaged, but still lower than the longtermist causes.[1]

Note that we are also measuring meaningfully different things related to cause area prioritization between the 2020 analysis and this one: we simply asked our sample how promising they found each cause area, while you seemed to ask about resourced/funded each cause area should be... respondents could have validly responded 'very promising' to all of the cause areas we listed

I agree that this could explain some of the differences in results, though I think that how people would prioritize allocation of resources is more relevant for assessing prioritization. I think that promisingness may be hard to interpret both given that, as you say, people could potentially rate everything highly promising, and also because "promising" could connote an early or yet to be developed venture (one might be more inclined to describe a less developed cause area as "promising", than one which has already reached its full size, even if you think the promising cause area should be prioritized less than the fully developed cause areas). But, of course, your mileage may vary, and you might be interested in your measure for reasons other than assessing cause prioritization.

Finally, it is worth clarifying that our characterization of our sample of EAs seemingly having lukewarm views about longtermism is motivated mainly by these two results:

["I have a positive view of effective altruism's overall shift towards longtermist causes" and "I think longtermist causes should be the primary focus in effective altruism"]

Thanks, I think these provide useful new data!

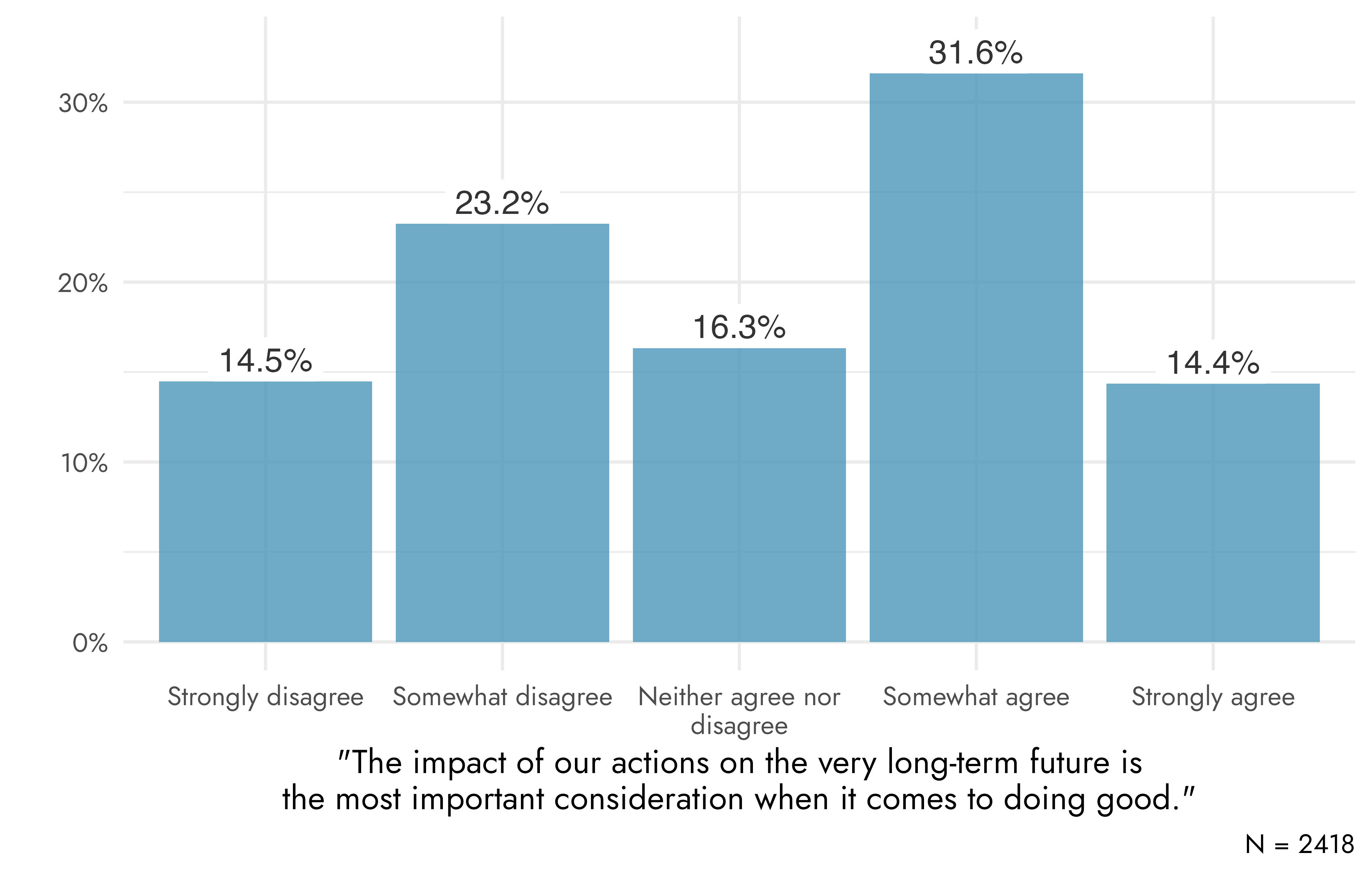

It's worth noting that we have our own, similar, measure concerning agreement with an explicit statement of longtermism: "The impact of our actions on the very long-term future is the most important consideration when it comes to doing good."

As such, I would distinguish 3 things:

- What do people think of 'longtermism'? [captured by our explicit statement]

- What do people think about allocations to / prioritisation of longtermist causes? [captured by people's actual cause prioritization]

- What do people think of EA's shift more towards longtermist causes? [captured by your 'shift' question]

Abstract support for (quite strong) longtermism

Looking at people's responses to the above (rather strong) statement of abstract longtermism we see that responses lean more towards agreement than disagreement. Given the bimodal distribution, I would also say that this reflects less a community that is collectively lukewarm on longtermism, and more a community containing one group that tends to agree with it and a group which tends to disagree with it.

Moreover, when we examine these results split by low/high engagement we see clear divergence, as in the results above.

Concrete cause prioritization

Moreover, as noted, the claim that it is "the most important consideration" is quite strong. People may be clearly longtermist despite not endorsing this statement. Looking at people's concrete cause prioritization, as I do above, we see that two longtermist causes (AI Risk and Biosecurity) are among the most highly prioritized causes across the community as a whole and they are even more strongly prioritised when examining more highly engaged EAs. I think this clearly conflicts with a view that "EAs have lukewarm views about longtermism... EAs (actively involved across 10+ cause areas) generally seem to think that AI risk and x-risk are less promising cause areas than ones like global health and development and animal welfare" and rules out an explanation based on your sample being more highly engaged.

Shift towards longtermism

Lastly, we can consider attitudes towards the "shift" towards longtermism, where your results show no strong leaning one way or the other, with a plurality being Neutral/Agnostic. It's not clear to me that this represents the community being lukewarm on longtermism, rather than, whatever their own views about cause prioritization, people expressing agnosticism about the community's shift (people might think "I support longtermist causes, but whether the community should is up to the community" or some such. One other datapoint I would point to regarding the community's attitudes towards the shift, however, is our own recent data showing that objection to the community's cause prioritization and perception of an excessive focus on AI / x-risk causes are among the most commonly cited reasons for dissatisfaction with the EA community. Thus, I think this reflects a cause for dissatisfaction for a significant portion of the community, even though large portions of the community clearly support strong prioritization of EA causes. I think more data about the community as a whole's views about whether longtermist causes should be prioritized more or less strongly by community infrastructure would be useful and is something we'll consider adding to future surveys.

- ^

Animal Welfare does not perform as a clear 'neartermist' cause in our data, when we examine the relationships between causes. It's about as strongly associated with Biosecurity as Global Poverty, for example.

Many thanks Devon!

I agree if you adjusted most of these results by a factor of 3/4x (for the LTF vs GHD/NT ratios above), you'd see GHD/NT ahead pretty much across that board. The biggest ratios in favour of longtermism in the results above are ~2x (though closer to 4x among the highly engaged specifically).

That said, I think the relationship between funding and intra-EA influence is unclear. I would expect large diminishing returns, and for a lot of Meta resources to not be spent on intra-EA influence. My guess is also that a lot of the influence driving people from neartermist to longtermist causes comes from the support of EA elites in a way that is partially separable from funding.[1] So adjusting by the funding ratio would not be straightforward.

Obviously funding levels and influence are causally related in both directions. That said, I imagine OP/80K/CEA and advocating for cause areas would have significant influence independent of their funding levels.